Figure 1. Deep learning algorithms are comprised of a spectrum of operations. Although matrix multiplication is dominant, optimizing performance efficiency while maintaining accuracy requires the core architecture to efficiently support all of the auxiliary functions. Credit: IBM

Recent advances in deep learning and exponential growth in the use of machine learning across application domains have made AI acceleration critically important. IBM Research has been building a pipeline of AI hardware accelerators to meet this need. At the 2018 VLSI Circuits Symposium, we presented a multi-TeraOPS accelerator core building block that can be scaled across a broad range of AI hardware systems. This digital AI core features a parallel architecture that ensures very high utilization and efficient compute engines that carefully leverage reduced precision.

Approximate computing is a central tenet of our approach to harnessing "the physics of AI", in which highly energy-efficient computing gains are achieved by purpose-built architectures, initially using digital computations and later including analog and in-memory computing.

Historically, computation has relied on high precision 64- and 32-bit floating point arithmetic. This approach delivers accurate calculations to the nth decimal point, a level of accuracy critical for scientific computing tasks like simulating the human heart or calculating space shuttle trajectories. But do we need this level of accuracy for common deep learning tasks? Does our brain require a high-resolution image to recognize a family member, or a cat? When we enter a text thread for search, do we require precision in the relative ranking of the 50,002nd most useful reply vs the 50,003rd? The answer is that many tasks including these examples can be accomplished with approximate computing.

Since full precision is rarely required for common deep learning workloads, reduced precision is a natural direction. Computational building blocks with 16-bit precision engines are 4x smaller than comparable blocks with 32-bit precision; this gain in area efficiency becomes a boost in performance and power efficiency for both AI training and inferencing workloads. Simply stated, in approximate computing, we can trade numerical precision for computational efficiency, provided we also develop algorithmic improvements to retain model accuracy. This approach also complements other approximate computing techniques—including recent work that described novel training compression approaches to cut communications overhead, leading to 40-200x speedup over existing methods.

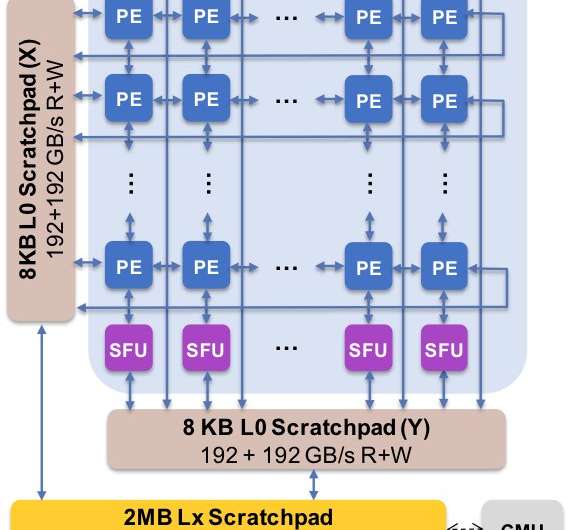

Figure 2. The core architecture captures the customized dataflow with scratchpad hierarchy. The processing element (PE) exploits reduced precision for matrix multiplication operations and some activation functions whereas the special function units (SFU) retain 32-bit floating point precision for the remaining vector operations. Credit: IBM

We presented experimental results of our digital AI core at the 2018 Symposium on VLSI Circuits. The design of our new core was governed by four objectives:

- End-to-end performance: Parallel computation, high utilization, high data bandwidth

- Deep learning model accuracy: As accurate as high-precision implementations

- Power efficiency: Application power should be dominated by compute elements

- Flexibility and programmability: Allow tuning of current algorithms as well as the development of future deep learning algorithms and models

Our new architecture has been optimized for not just matrix multiplication and convolutional kernels, which tend to dominate deep learning computations, but also a spectrum of activation functions that are a part of the deep learning computational workload. Furthermore, our architecture offers support for native convolutional operations, allowing deep learning training and inference tasks on images and speech data to run with exceptional efficiency on the core.

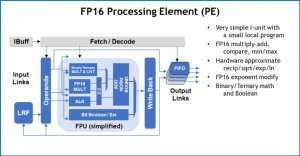

Figure 3. Processing Element (PE) with 16-bit floating point (FP16) capabilities for matrix multiplication operations, binary and ternary math, activation functions and Boolean operations. Credit: IBM

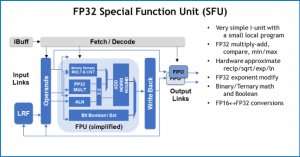

As an illustration of how the core architecture has been optimized for a variety of deep learning functions, Figure 1 shows the breakdown of operation types within deep learning algorithms across a spectrum of application domains. The dominant matrix multiplication components are computed in the core architecture by using a customized dataflow organization of the Processing Elements shown in Figures 2 and 3 where reduced precision computations can be efficiently exploited, whereas the remaining vector functions (all of the non-red bars in Figure 1) are executed in either the Processing Elements or the Special Function Units shown in Figures 3 or 4, depending on the precision needs of the specific function.

At the Symposium, we showed hardware results confirming that this single architecture approach is capable of both training and inference and supports models in multiple domains (e.g., speech, vision, natural language processing). Whereas other groups point to "peak performance" of their specialized AI chips, but have sustained performance levels at a small fraction of peak, we have focused on maximizing sustained performance and utilization, since sustained performance directly translates into user experience and response times.

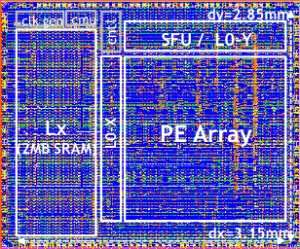

Our testchip is shown in Figure 5. Using this testchip, built in 14LPP technology, we've successfully demonstrated both training and inferencing, across a broad deep learning library, exercising all operations commonly used in deep learning tasks, including matrix multiplications, convolutions and various non-linear activation functions.

Figure 4. Special Function Unit (SFU) with 32-bit floating point (FP32) for certain vector computations. Credit: IBM

We highlighted the flexibility and multi-purpose capability of the digital AI core and native support for multiple dataflows in the VLSI paper, but this approach is fully modular. This AI core can be integrated into SoCs, CPUs, or microcontrollers and used for training, inference, or both. Chips using the core can be deployed in the data center or at the edge.

Driven by a fundamental understanding of deep learning algorithms at IBM Research, we expect the precision requirements for training and inference to continue to scale—which will drive quantum efficiency improvements in hardware architectures needed for AI. Stay tuned for more research from our team.

Figure 5. Digital AI Core testchip, based on 14LPP technology, including 5.75M gates, 1.00 flip-flops, 16KB L0 and 16KB of PE local registers. This chip was used to demonstrate both training and inferencing, across a wide range of AI workloads. Credit: IBM

Provided by IBM