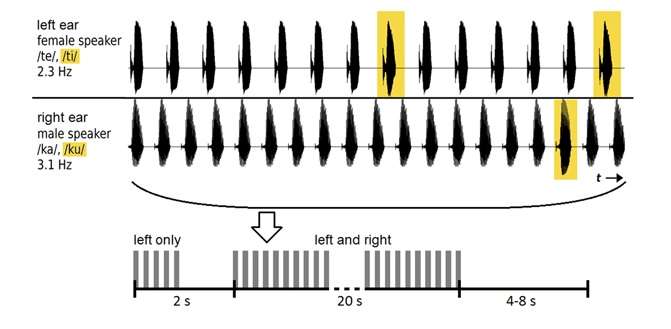

An auditory brain-computer interface can tell which speaker a listener is paying attention to with an accuracy of up to 80%, depending on the analysis time. Credit: Souto et al. ©2016 IOP Publishing

(Phys.org)—Researchers are working on the early stages of a brain-computer interface (BCI) that can tell who you're listening to in a room full of noise and other people talking. In the future, the technology could be incorporated into hearing aids as tiny beam-formers that point in the direction of interest so that they tune in to certain conversations, sounds, or voices that an individual is paying attention to, while tuning out unwanted background noise.

The researchers, Carlos da Silva Souto and coauthors at the University of Oldenburg's Department of Medical Physics and Cluster of Excellence Hearing4all, have published a paper on a proof-of-concept auditory BCI in a recent issue of Biomedical Physics and Engineering Express.

So far, most BCI research has focused primarily on visual stimuli, which currently outperforms systems that use auditory stimuli, possibly because of the larger cortical surface of the visual system compared to the auditory cortex. However, for individuals who are visually impaired or completely paralyzed, auditory-based BCI could offer a potential alternative.

In the new study, 12 volunteers listened to two recorded voices (one male, one female) speaking repeated syllables, and were asked to pay attention to just one of the voices. In early sessions, electroencephalogram (EEG) data on the electrical activity of the brain was used to train the participants. In later sessions, the system was tested on how accurately it classified the EEG data to determine which voice the volunteer was paying attention to.

At first, the system classified the data with about 60% accuracy—above the level of chance, but not good enough for practical use. By increasing the analysis time from 20 seconds to 2 minutes, the researchers could improve the accuracy to nearly 80%. Although this analysis window is too long for real-life situations, and the accuracy can be further improved, the results are among the first to show that it is possible to classify EEG data evoked from spoken syllables.

In the future, the researchers plan to optimize the classification methods to further improve the system's performance.

More information: Carlos da Silva Souto et al. "Influence of attention on speech-rhythm evoked potentials: first steps towards an auditory brain-computer interface driven by speech." Biomedical Physics & Engineering Express. DOI: 10.1088/2057-1976/2/6/065009

© 2017 Phys.org