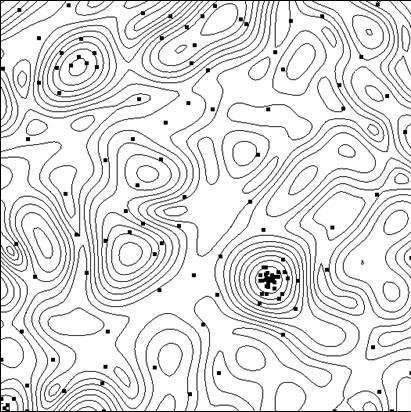

Lines of the objective function level and the points of the tests performed by the algorithm in the process of finding the minimum. Credit: Lobachevsky University

To create highly effective technical systems and technological processes, in addition to the use of new principles, new materials, new physical effects and other solutions that determine the overall structure of the object being created, researchers have to choose the best combination of the object's parameters (geometric dimensions, electrical characteristics, etc.), since any changes in the parameters with a fixed overall object structure can significantly impact the effectiveness indicators.

In computer-aided design, the testing of options can be carried out by analyzing its mathematical model for different parameter values. As the models become increasingly complex, the need arises for a targeted choice of options in the search for the optimal (most effective) solution.

A team of scientists from the Lobachevsky State University of Nizhny Novgorod (UNN) lead by Professor Roman Strongin has been studying the targeted choices when searching for the optimal solution. It involves an analysis of a subset of the possible options with the aim of excluding obviously unpromising cases and concentrating the search on the subset containing the best option.

"When mathematical models of the processes that occur in an object become more complicated, the efficiency characteristics will not possess the property of monotonicity, that is why local search methods are insufficient to evaluate the best option," says Professor Roman Strongin.

The global search procedures that are used in such problems (also called multi-extremal optimization problems) ensure that the search is targeted by taking into account the limited nature of the change in the object's characteristics when the changes in its parameters are limited. The resulting mathematical formulation can take the form of the Lipschitz condition, the uniform Hölder condition, etc.

Multi-extremal optimization problems offer a narrow scope of analytical research opportunities, and they become computationally expensive when seeking numerical solutions, since computational costs grow exponentially with the increasing dimension of the problem.

According to Konstantin Barkalov, Associate Professor of the UNN Department of Software and Supercomputer Technologies, the use of modern parallel computing systems expands the scope of global optimization methods. However, at the same time, it poses the challenge of effectively parallelizing the search process.

"That is why the main efforts in this area should be concentrated on developing efficient parallel methods for numerically solving multi-extremal optimization problems and creating appropriate software for modern computing systems on the basis of such methods," says Barkalov.

Usually, the methods of global optimization (both sequential and parallel) are intended to solve a single optimization problem. To solve a series of q problems, the problems in the series are solved sequentially, one after another. Therefore, the optimum estimation in the i-th problem in the series remains undefined until all preceding problems of the series (with the indices 1, 2, . . . , i ? 1) have been completely solved. In the case of limited computational resources, the optima estimates in the problems i + 1, . . . , q will not be obtained if the computation resources are exhausted while solving the i-th problem.

Situations when a series of q problems have to be solved are not extraordinary. For example, a series of scalar problems arises when seeking a Pareto set in solving multi-objective optimization problems. In this case, the solution of a single scalar problem corresponds to one of the Pareto optimal points of a multi-objective problem. A series of optimization problems also arises when using dimension reduction methods to solve multidimensional optimization problems. Finally, a series of test problems can also be obtained with the help of a particular test problem generator.

Scientists believe that when solving a set of problems, it is necessary to have initial estimates of solutions for all problems at once, so that at any time it is possible to evaluate the expediency of continuing the search. In this case, it is desirable to have the optimum estimates for all problems with the same accuracy.

Running many independent processes in a parallel computing system, each of the processes solving one problem from a series, has a number of drawbacks. First, a workload imbalance between the processors will occur. If solving the i -th problem requires considerably fewer iterations of the method than solving the j -th problem, the processor tasked with handling the i -th problem would remain idle after completing the task. Second, the estimates of the optima will be obtained with different precision in different problems. Simpler problems will be solved with higher precision, whereas precision will be lower for more complex problems.

The aim of the research performed at the Lobachevsky University was to develop a new method for solving a series of global optimization problems that would ensure: (i) a uniform loading of all the cores/processors employed; (ii) a uniform convergence to the solutions of all problems in the series.

In the theoretical part of their paper, UNN scientists also proved the theorem on uniform convergence of the new algorithm. The experimental part of the work consisted in solving a series of several hundred test problems of different dimensions, and the results have convincingly demonstrated the presence of uniform convergence.

Also UNN scientists consider computationally intensive global optimization problems, for solving of which the supercomputing systems with exaflops performance can be required. To overcome such computational complexity, the researchers propose generalized parallel computational schemes, which may involve numerous efficient parallel algorithms of global optimization. The proposed schemes include methods of multilevel decomposition of parallel computations to guarantee the computational efficiency of supercomputing systems with shared and distributed memory multiprocessors using thousands of processors to meet global optimization challenges.

More information: R. G. Strongin et al, Generalized Parallel Computational Schemes for Time-Consuming Global Optimization, Lobachevskii Journal of Mathematics (2018). DOI: 10.1134/S1995080218040133

Provided by Lobachevsky University