March 29, 2023 report

This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

The death of open access mega-journals?

The entire scientific publishing world is currently undergoing a massive stress test of quantity vs. quality, open access (free) vs. institutional subscriptions (paywall), and how to best judge the integrity of a publication.

The traditional model in scientific journal publishing has historically been to collect fees from universities and research institutions, and publish articles by researchers connected to those institutions through a slow and exhausting peer-review process. To read any published studies, you would need to be affiliated with an organization that has a subscription, or pay a hefty fee to read a single study online. This subscription service allowed institutions unlimited access to current published research as well as a pathway to publishing and recognition of their research for gaining grant funding.

For the traditional publishing industry, this model provided a consistent source of revenue based on the number of journals they provided, not the number of papers published within them. Instead of actively attempting to attract more papers, the growth was seen in having more specialty journals.

Open-access scientific journals came along with the promise of free access to information. No longer would the availability of research papers be hidden behind paywalls, and papers could be submitted from any institution equally. Instead of subscription fees, these journals charged researchers for submitting papers on an individual basis.

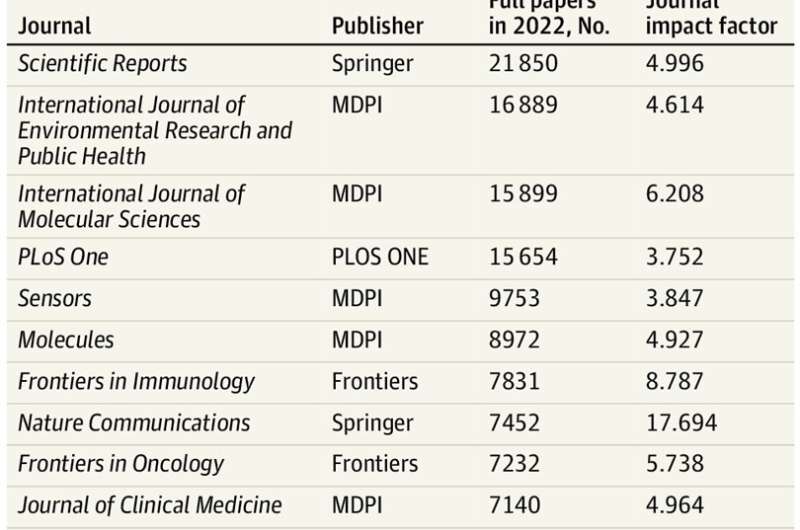

Mega-journals took the open-access model and ran with it. Some of the biggest mega-journals were the early open-access journals PLoS One and Scientific Reports. Many other mega-journals have surfaced, some converting from traditional subscription-based models and all populating the publishing space with subject-specific sub-journals.

An opinion letter, "The Rapid Growth of Mega-Journals Threats and Opportunities," published in the journal JAMA, addresses some of the pressing issues regarding the mass publishing of scientific literature.

In the JAMA Viewpoint letter, written by researchers from Italy and corresponding author John P. A. Ioannidis, MD of Stanford University, the authors share their concerns that "...explosive growth of mega-journals may be accompanied by the fall of some previously prestigious journals."

They point out that some mega-journals like journals PLoS One and Scientific Reports publish papers over a wide spectrum of research topics, and so did not pose a threat to the traditional publishing of specialty journals. However, many newer mega-journals have begun specializing in discipline-focused journals that are publishing faster and in greater volume than traditional journals can keep up with.

In an example from the letter, the authors point out that in 2022 the International Journal of Environmental Research and Public Health by MDPI published 16,889 full articles compared to the American Journal of Public Health (514), European Journal of Public Health(238), American Journal of Epidemiology (222), and Epidemiology (101). An additional concern is that the way a study or journal is ranked in terms of impact factor has a lot to do with the number of citations it receives.

Impact factor

The impact factor of journals is curated by Clarivate Analytics Web of Science group. They use the average of the sum of the citations received in a given year to a journal's previous two years of publications, divided by the sum of "citable" publications in the previous two years. As nearly straightforward as the method is, it does illustrate how getting more citations and publishing more stories in a current year helps lift the impact factor.

This may account for the rise in self-citations, where papers in journals seem to favor citing other papers written in the same journal. The previously mentioned International Journal of Environmental Research and Public Health papers cited other research papers published in the same journal 12% of the time. In a model not unlike what we see in social media, where the number of likes or views may affect visibility by the algorithms, so does the number of citations affect the impact factor of a journal.

Journal citation pressure is not limited to mega-journals, but the extent to which it happens may be. The journal PLoS One has around 2% self-citations and Scientific Reports self-cites about 3% of the time. Compare this to a collection of open-access journals published by MDPI, which averaged about 12% self-citation across 11 different journals. One of the journals, Animals, had an incredible 22% rate of self-citation, suggesting that a great deal of what we know about all animals has been published in this one journal.

Delisting factor

Recently the Web of Science has removed the impact factor of nearly two dozen journals, including one of the world's largest, the International Journal of Environmental Research and Public Health. Many of the journals published by Hindawi and two by MDPI have had their impact factor ratings removed, likely reflecting concerns with the integrity of the publishing process. This is an act that will likely have a major impact on the bottom line of the publisher as the value of publishing in these journals is diminished.

While no specific details were released, a letter from the web of science vice president Nandita Quaderi states, "We have invested in a new, internally-developed AI tool to help us identify outlier characteristics that indicate that a journal may no longer meet our quality criteria. This technology has substantially improved our ability to identify and focus our re-evaluation efforts on journals of concern. At the start of the year, more than 500 journals were flagged. Our investigations are ongoing and thus far, more than 50 of the flagged journals have failed our quality criteria and have subsequently been delisted."

The peer-review process is one possible delisting criterion. With tens of thousands of papers to assess, publishers frequently hire "guest editors" who may not be reviewing studies in their field of expertise.

Issues such as the quick turnaround times from submitting a paper to publication might have been flagged, a process that can take 200 hundred days or more in traditional publishing. In contrast, the Environmental Research and Public Health by Hindawi (not to be confused with International Journal of Environmental Research and Public Health by MDPI) boasts a submission to publication time of 31 days on their website.

This current round of delisting removed 19 Hindawi journals from the impact factor list. Hindawi was purchased by Wiley publishing in 2021 for $300 million and has already had to deal with thousands of retractions after uncovering thousands of fraudulent papers filled with off-subject citations.

As AI language models threaten to further stress-test the publishing world with both seemingly authentic computer-generated research papers, as well as AI-assisted quality vetting, this may be the right time to separate the less rigorously authenticated publications from the herd.

More information: John P. A. Ioannidis et al, The Rapid Growth of Mega-Journals Threats and Opportunities, JAMA (2023). DOI: 10.1001/jama.2023.3212

© 2023 Science X Network