World's fastest camera freezes time at 10 trillion frames per second

What happens when a new technology is so precise that it operates on a scale beyond our characterization capabilities? For example, the lasers used at INRS produce ultrashort pulses in the femtosecond range (10-15 s), which is far too short to visualize. Although some measurements are possible, nothing beats a clear image, says INRS professor and ultrafast imaging specialist Jinyang Liang. He and his colleagues, led by Caltech's Lihong Wang, have developed what they call T-CUP: the world's fastest camera, capable of capturing 10 trillion (1013) frames per second (Fig. 1). This new camera literally makes it possible to freeze time to see phenomena—and even light—in extremely slow motion.

In recent years, the junction between innovations in non-linear optics and imaging has opened the door for new and highly efficient methods for microscopic analysis of dynamic phenomena in biology and physics. But harnessing the potential of these methods requires a way to record images in real time at a very short temporal resolution—in a single exposure.

Using current imaging techniques, measurements taken with ultrashort laser pulses must be repeated many times, which is appropriate for some types of inert samples, but impossible for other more fragile ones. For example, laser-engraved glass can tolerate only a single laser pulse, leaving less than a picosecond to capture the results. In such a case, the imaging technique must be able to capture the entire process in real time.

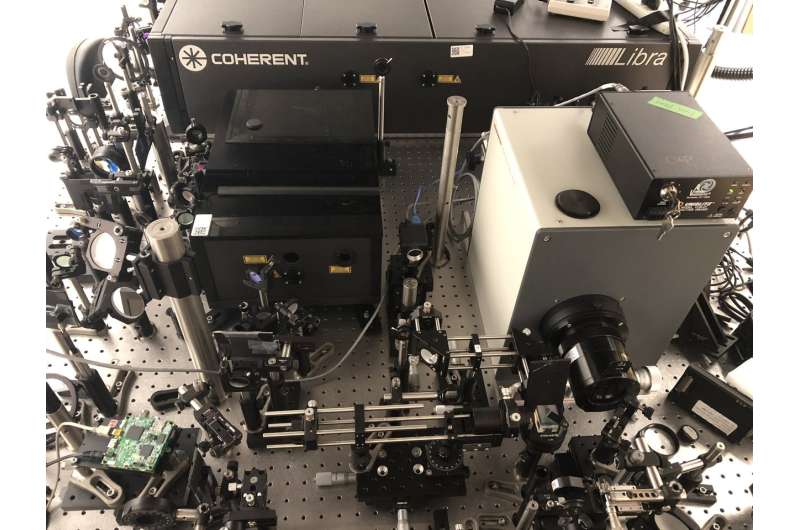

Compressed ultrafast photography (CUP) was a good starting point. At 100 billion frames per second, this method approached, but did not meet, the specifications required to integrate femtosecond lasers. To improve on the concept, the new T-CUP system was developed based on a femtosecond streak camera that also incorporates a data acquisition type used in applications such as tomography.

"We knew that by using only a femtosecond streak camera, the image quality would be limited," says Professor Lihong Wang, the Bren Professor of Medial Engineering and Electrical Engineering at Caltech and the Director of Caltech Optical Imaging Laboratory (COIL). "So to improve this, we added another camera that acquires a static image. Combined with the image acquired by the femtosecond streak camera, we can use what is called a Radon transformation to obtain high-quality images while recording ten trillion frames per second."

Setting the world record for real-time imaging speed, T-CUP can power a new generation of microscopes for biomedical, materials science, and other applications. This camera represents a fundamental shift, making it possible to analyze interactions between light and matter at an unparalleled temporal resolution.

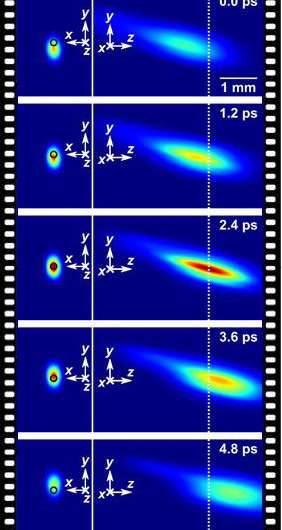

The first time it was used, the ultrafast camera broke new ground by capturing the temporal focusing of a single femtosecond laser pulse in real time (Fig. 2). This process was recorded in 25 frames taken at an interval of 400 femtoseconds and detailed the light pulse's shape, intensity, and angle of inclination.

"It's an achievement in itself," says Jinyang Liang, the leading author of this work, who was an engineer in COIL when the research was conducted, "but we already see possibilities for increasing the speed to up to one quadrillion (10 exp 15) frames per second!" Speeds like that are sure to offer insight into as-yet undetectable secrets of the interactions between light and matter.

More information: Jinyang Liang et al, Single-shot real-time femtosecond imaging of temporal focusing, Light: Science & Applications (2018). DOI: 10.1038/s41377-018-0044-7

Journal information: Light: Science & Applications

Provided by Institut national de la recherche scientifique - INRS