Human arm sensors make robot smarter (w/ Video)

Using arm sensors that can "read" a person's muscle movements, Georgia Institute of Technology researchers have created a control system that makes robots more intelligent. The sensors send information to the robot, allowing it to anticipate a human's movements and correct its own. The system is intended to improve time, safety and efficiency in manufacturing plants.

It's not uncommon to see large, fast-moving robots on manufacturing floors. Humans seldom work next to them because of safety reasons. Some jobs, however, require people and robots to work together. For example, a person hanging a car door on a hinge uses a lever to guide a robot carrying the door. The power-assisting device sounds practical but isn't easy to use.

"It turns into a constant tug of war between the person and the robot," explains Billy Gallagher, a recent Georgia Tech Ph.D. graduate in robotics who led the project. "Both react to each other's forces when working together. The problem is that a person's muscle stiffness is never constant, and a robot doesn't always know how to correctly react."

For example, as human operators shift the lever forward or backward, the robot recognizes the command and moves appropriately. But when they want to stop the movement and hold the lever in place, people tend to stiffen and contract muscles on both sides of their arms. This creates a high level of co-contraction.

"The robot becomes confused. It doesn't know whether the force is purely another command that should be amplified or 'bounced' force due to muscle co-contraction," said Jun Ueda, Gallagher's advisor and a professor in the Woodruff School of Mechanical Engineering. "The robot reacts regardless."

The robot responds to that bounced force, creating vibration. The human operators also react, creating more force by stiffening their arms. The situation and vibrations become worse.

"You don't want instability when a robot is carrying a heavy door," said Ueda.

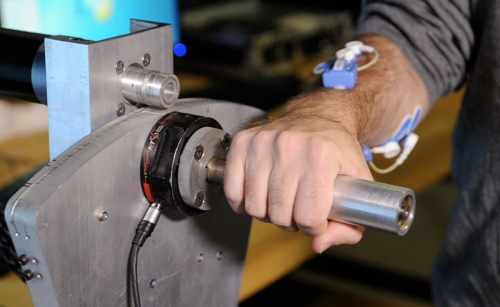

The Georgia Tech system eliminates the vibrations by using sensors worn on a controller's forearm. The devices send muscle movements to a computer, which provides the robot with the operator's level of muscle contraction. The system judges the operator's physical status and intelligently adjusts how it should interact with the human. The result is a robot that moves easily and safely.

"Instead of having the robot react to a human, we give it more information," said Gallagher. "Modeling the operator in this way allows the robot to actively adjust to changes in the way the operator moves."

Ueda will continue to improve the system using a $1.2 million National Robotics Initiative grant supported by a National Science Foundation grant to better understand the mechanisms of neuromotor adaptation in human-robot physical interaction. The research is intended to benefit communities interested in the adaptive shared control approach for advanced manufacturing and process design, including automobile, aerospace and military.

"Future robots must be able to understand people better," Ueda said. "By making robots smarter, we can make them safer and more efficient."

Provided by Georgia Institute of Technology