The human touch makes robots defter

Cornell engineers are helping humans and robots work together to find the best way to do a job, an approach called "coactive learning."

"We give the robot a lot of flexibility in learning," said Ashutosh Saxena, assistant professor of computer science. "We build on our previous work in teaching robots to plan their actions, then the user can give corrective feedback."

Saxena's research team will report their work at the Neural Information Processing Systems conference in Lake Tahoe, Calif., Dec. 5-8.

Modern industrial robots, like those on automobile assembly lines, have no brains, just memory. An operator programs the robot to move through the desired action; the robot can then repeat the exact same action every time a car goes by.

But off the assembly line, things get complicated: A personal robot working in a home has to handle tomatoes more gently than canned goods. If it needs to pick up and use a sharp kitchen knife, it should be smart enough to keep the blade away from humans.

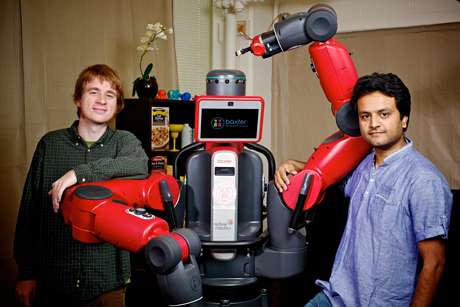

Saxena's team, led by Ph.D. student Ashesh Jain, set out to teach a robot to work on a supermarket checkout line, modifying a Baxter robot from Rethink Robotics in Boston, designed for assembly line work. It can be programmed by moving its arms through an action, but also offers a mode where a human can make adjustments while anaxctiinis in progress.

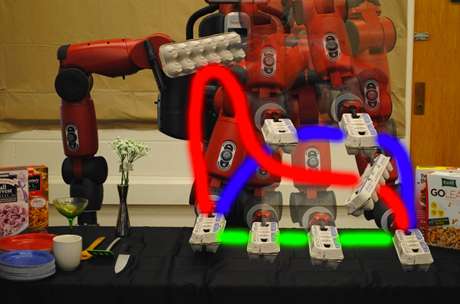

The Baxter's arms have two elbows and a rotating wrist, so it's not always obvious to a human operator how best to move the arms to accomplish a particular task. So the researchers, drawing on previous work, added programming that lets the robot plan its own motions. It displays three possible trajectories on a touch screen where the operator can select the one that looks best.

Then humans can give corrective feedback. As the robot executes its movements, the operator can intervene, guiding the arms to fine-tune the trajectory. The robot has what the researechers call a "zero-G" mode, where the robot's arms hold their position against gravity but allow the operator to move them. The first correction may not be the best one, but it may be slightly better. The learning algorithm the researchers provided allows the robot to learn incrementally, refining its trajectory a little more each time the human operator makes adjustments. Even with weak but incrementally correct feedback from the user, the robot arrives at an optimal movement.

The robot learns to associate a particular trajectory with each type of object. A quick flip over might be the fastest way to move a cereal box, but that wouldn't work with a carton of eggs. Also, since eggs are fragile, the robot is taught that they shouldn't be lifted far above the counter. Likewise, the robot learns that sharp objects shouldn't be moved in a wide swing; they are held in close, away from people.

In tests with users who were not part of the research team, most users were able to train the robot successfully on a particular task with just five corrective feedbacks. The robots also were able to generalize what they learned, adjusting when the object, the environment or both were changed.

Provided by Cornell University