New motion tracking technology is extremely precise, inexpensive with minimal lag

Researchers at Carnegie Mellon University and Disney Research Pittsburgh have devised a motion tracking technology that could eliminate much of the annoying lag that occurs in existing video game systems that use motion tracking, while also being extremely precise and highly affordable.

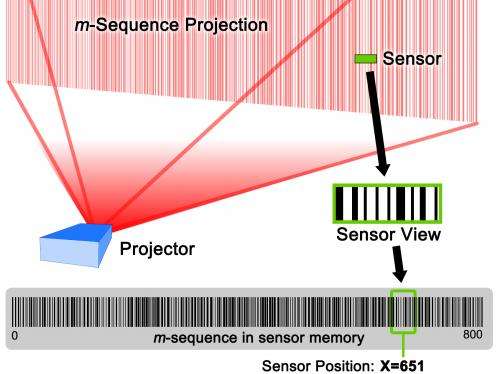

Called Lumitrack, the technology has two components—projectors and sensors. A structured pattern, which looks something like a very large barcode, is projected over the area to be tracked. Sensor units, either near the projector or on the person or object being tracked, can then quickly and precisely locate movements anywhere in that area.

"What Lumitrack brings to the table is, first, low latency," said Robert Xiao, a Ph.D. student in Carnegie Mellon's Human-Computer Interaction Institute (HCII). "Motion tracking has added a compelling dimension to popular game systems, but there's always a lag between the player's movements and the movements of the avatar in the game. Lumitrack is substantially faster than these consumer systems, with near real-time response."

Xiao said Lumitrack also is extremely precise, with sub-millimeter accuracy. Moreover, this performance is achieved at low cost. The sensors require little power and would be inexpensive to assemble in volume. The components could even be integrated into mobile devices, such as smartphones.

Xiao and his collaborators will present their findings at UIST 2013, the Association for Computing Machinery's Symposium on User Interface Software and Technology, Oct. 8-11 in St. Andrews, Scotland. Scott Hudson, professor of HCII, and Chris Harrison, a recent Ph.D. graduate of the HCII who will be joining the faculty next year, are co-authors, as are Disney Research Pittsburgh's Ivan Poupyrev, director of the Interactions Group, and Karl Willis.

Many approaches exist for tracking human motion, including expensive, highly precise systems used to create computer-generated imagery (CGI) for films. Though Lumitrack's developers have targeted games as an initial application, the technology's combination of low latency, high precision and low cost make it suitable for many applications, including CGI and human-robot interaction.

"We think the core technology is potentially transformative and that you could think of many more things to do with it besides games," Poupyrev said.

A key to Lumitrack is the structured pattern that is projected over the tracking area. Called a binary m-sequence, the series of bars encodes a series of bits in which every sequence of seven bits appears only once. A simple optical sensor can thus quickly determine where it is based on which part of the sequence it sees. When two m-sequences are projected at right angles to each other, the sensor can determine its position in two dimensions; when multiple sensors are used, 3D motion tracking is possible.

More information: chrisharrison.net/index.php/Research/Lumitrack

Provided by Carnegie Mellon University