Controlling robots with your thoughts

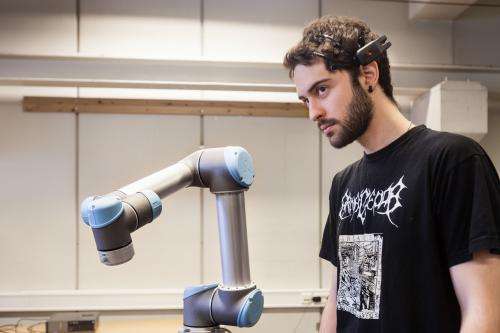

This is Angel Perez Garcia. He can make a robot move exactly as he wants via the electrodes attached to his head.

"I use the movements of my eyes, eyebrows and other parts of my face", he says. "With my eyebrows I can select which of the robot's joints I want to move" smiles Angel, who is a Master's student at NTNU.

Facial grimaces generate major electrical activity (EEG signals) across our heads, and the same happens when Angel concentrates on a symbol, such as a flashing light, on a computer monitor. In both cases the electrodes read the activity in the brain. The signals are then interpreted by a processor which in turn sends a message to the robot to make it move in a pre-defined way.

"I can focus on a selection of lights on the screen. The robot's movements depend on which light I select and the type of activity generated in my brain", says Angel. "The idea of controlling a robot simply by using our thoughts (EEG brainwave activity), is fascinating and futuristic", he says.

A school for robots

Angel Garcia is not alone in developing new ways of manoeuvring robots. Today, teaching robots dominates activity among the cybernetics community at NTNU/SINTEF.

In the robotics hall, fellow student Signe Moe is guiding a robot by moving her arms, while SINTEF researcher and supervisor Ingrid Schjølberg is using a new training programme to try to get her three-fingered robot to grasp objects in new ways.

"Why all this enthusiasm for training?"

"Well, everyone knows about industrial robots used on production lines to pick up and assemble parts", says Schjølberg. "They are pre-programmed and relatively inflexible, and carry out repeated and identical movements of specialised graspers adapted to the parts in question", she says.

"So you are developing something new?"

"We can see that industries encounter major problems every time a new part is brought in and has to be handled on the production line", she says. "The replacement of graspers and the robot's guidance programme is a complex process, and we want to make this simpler. We want to be able to programme robots more intuitively and not just in the traditional way using a panel with buttons pressed by an operator.

"We want you to move over here"

Signe Moe's task has thus been to find out how a robot can be trained to imitate human movements. She has solved this using a system by which she guides the robot using a Kinect camera of the type used in games technology.

"Now it's possible for anyone to guide the robot", says Moe. "Not long ago some 6th grade pupils visited us here at the robotics hall. They were all used to playing video games, so they had no problems in guiding the robot", she says.

To demonstrate, she stands about a metre and a half in front of the camera. "Firstly, I hold up my right hand and make a click in the air. This causes the camera to register me and trace the movements of my hand", says Moe. "Now, when I move my hand up and to the right, you can see that the robot imitates my movements", she says.

"It looks simple enough, but what happens if....?

"The Kinect camera has built-in algorithms which can trace the movements of my hand", she says. "All we have to do is to transpose these data to define the position we want the robot to assume, and set up a communications system between the sensors in the camera and the robot", she explains. "In this way the robot receives a reference along the lines of 'we want you to move over here', and an in-built regulator then computes how it can achieve the movement and how much electricity the motor requires to carry the movement out" says Moe.

New learning using camera images and sensors

Ingrid Schjølberg is demonstrating her three-fingered robotic grasper. Teaching robots new ways of grasping will greatly benefit the manufacturing industry, and this is why researchers are testing out new approaches.

"We are combining sensors in the robotic hand with Kinect images to identify the part which has to be picked up and handled", says Schjølberg. "In this way the robot can teach itself the best ways of adapting its grasping action", she says. "It is trying out different grips in just the same way as we humans do when picking up an unfamiliar object. We've developed some pre-determined criteria for what defines a good and bad grip", she explains. "The robot is testing out several different grips, and is praised or scolded for each attempt by means of a points score", smiles Schjølberg.

"Has this method been put into practice?"

"This system has not yet been industrialised, but we have it up and running here at the robotics hall", says Schjølberg. "The next step might be to install the robot on the premises of one of the project's industry partners", she says.

The project "Next Generation Robotics for Norwegian Industry" incorporates all relevant aspects of activities being carried out by our industry partners in the project – Statoil, Hydro, Glen Dimplex and Haag – who are all taking an active part.

"They are funding the work, but also involve themselves actively by providing case examples and problems", says Schjølberg. "During the remainder of the project term, we will be working to make both the software and the system more robust", she says.

Provided by SINTEF