This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

trusted source

proofread

Scientists develop computer vision framework to track animals in the wild without markers

Researchers from the Cluster of Excellence Collective Behavior have developed a computer vision framework for posture estimation and identity tracking that they can use in indoor environments as well as in the wild. This is an important step toward the markerless tracking of animals in the wild using computer vision and machine learning.

Two pigeons are pecking grains in a park in Konstanz. A third pigeon flies in. There are four cameras in the immediate vicinity. Doctoral students Alex Chan and Urs Waldmann from the Cluster of Excellence Collective Behavior at the University of Konstanz are filming the scene. After an hour, they return with the footage to their office to analyze it with a computer vision framework for posture estimation and identity tracking.

The framework detects and draws a box around all pigeons. It records central body parts and determines their posture, their position, and their interaction with the other pigeons around them. All of this happens without any markers being attached to pigeons or any need for a human being called in to help. This would not have been possible just a few years ago.

3D-MuPPET framework

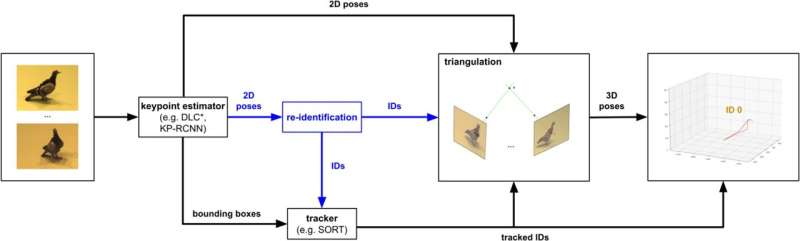

Markerless methods for animal posture tracking have been rapidly developing recently, but frameworks and benchmarks for tracking large animal groups in 3D are still lacking. To overcome this gap, researcher Urs Waldmann from the Cluster of Excellence Collective Behavior at the University of Konstanz and Alex Chan from the Max Planck Institute of Animal Behavior and their colleagues present 3D-MuPPET, a framework to estimate and track 3D poses of up to 10 pigeons at interactive speed using multiple camera views.

The research was recently published in the International Journal of Computer Vision.

3D-MuPPET, which stands for 3D Multi-Pigeon Pose Estimation and Tracking, is a computer vision framework for posture estimation and identity tracking for up to 10 individual pigeons from four camera views, based on data collected both in captive environments and even in the wild.

"We trained a 2D keypoint detector and triangulated points into 3D, and also show that models trained on single pigeon data work well with multi-pigeon data," explains Waldmann. This is a first example of 3D animal posture tracking for an entire group of up to 10 individuals.

Thus, the new framework provides a concrete method for biologists to create experiments and measure animal posture for automatic behavioral analysis. "This framework is an important milestone in animal posture tracking and automatic behavioral analysis," says Chan.

Framework can be used in the wild

In addition to tracking pigeons indoors, the framework is also extended to pigeons in the wild. "Using a model that can identify the outline of any object in an image called the Segment Anything Model, we further trained a 2D keypoint detector with a masked pigeon from the captive data, then applied the model to pigeon videos outdoors without any extra model finetuning," states Chan.

3D-MuPPET presents one of the first case-studies on how to transition from tracking animals in captivity towards tracking animals in the wild, allowing fine-scaled behaviors of animals to be measured in their natural habitats. The developed methods can potentially be applied across other species in future work, with potential application for large scale collective behavior research and species monitoring in a non-invasive way.

3D-MuPPET showcases a powerful and flexible framework for researchers who would like to use 3D posture reconstruction for multiple individuals to study collective behavior in any environment or species. As long as a multi-camera setup and a 2D posture estimator is available, the framework can be applied to track 3D postures of any animal.

More information: Urs Waldmann et al, 3D-MuPPET: 3D Multi-Pigeon Pose Estimation and Tracking, International Journal of Computer Vision (2024). DOI: 10.1007/s11263-024-02074-y

Provided by University of Konstanz