This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

Scientists can tell where a mouse is looking and located based on its neural activity

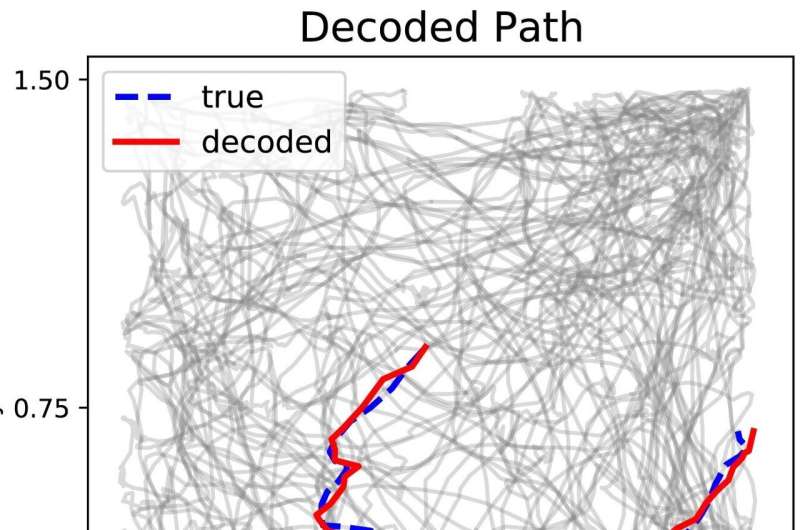

Researchers have paired a deep learning model with experimental data to "decode" mouse neural activity. Using the method, they can accurately determine where a mouse is located within an open environment and which direction it is facing just by looking at its neural firing patterns.

Being able to decode neural activity could provide insight into the function and behavior of individual neurons or even entire brain regions. These findings, published February 22 in Biophysical Journal, could also inform the design of intelligent machines that currently struggle to navigate autonomously.

In collaboration with researchers at the US Army Research Laboratory, senior author Vasileios Maroulas' team used a deep learning model to investigate two types of neurons that are involved in navigation: "head direction" neurons, which encode information about which direction the animal is facing, and "grid cells," which encode two-dimensional information about the animal's location within its spatial environment.

"Current intelligence systems have proved to be excellent at pattern recognition, but when it comes to navigation, these same so-called intelligence systems don't perform very well without GPS coordinates or something else to guide the process," says Maroulas, a mathematician at the University of Tennessee Knoxville.

"I think the next step forward for artificial intelligence systems is to integrate biological information with existing machine-learning methods."

Unlike previous studies that have tried to understand grid cell behavior, the team based their method on experimental rather than simulated data.

The data, which were collected as part of a previous study, consisted of neural firing patterns that were collected via internal probes, paired with "ground-truthing" video footage about the mouse's actual location, head position, and movements as they explored an open environment. The analysis involved integrating activity patterns across groups of head direction and grid cells.

"Understanding and representing these neural structures requires mathematical models that describe higher-order connectivity—meaning, I don't want to understand how one neuron activates another neuron, but rather, I want to understand how groups and teams of neurons behave," says Maroulas.

Using the new method, the researchers were able to predict mouse location and head direction with greater accuracy than previously described methods. Next, they plan to incorporate information from other types of neurons that are involved in navigation and to analyze more complex patterns.

Ultimately, the researchers hope their method will help design intelligent machines that can navigate in unfamiliar environments without using GPS or satellite information. "The end goal is to harness this information to develop a machine-learning architecture that would be able to successfully navigate unknown terrain autonomously and without GPS or satellite guidance," says Maroulas.

More information: A Topological Deep Learning Framework for Neural Spike Decoding, Biophysical Journal (2024). DOI: 10.1016/j.bpj.2024.01.025

Journal information: Biophysical Journal

Provided by Cell Press