This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

trusted source

proofread

Novel 'registration' method identifies plant traits in close-up photos

Modern cameras and sensors, together with image processing algorithms and artificial intelligence (AI), are ushering in a new era of precision agriculture and plant breeding. In the near future, farmers and scientists will be able to quantify various plant traits by simply pointing special imaging devices at plants.

However, some obstacles must be overcome before these visions become a reality. A major issue faced during image-sensing is the difficulty of combining data from the same plant gathered from multiple image sensors, also known as 'multispectral' or 'multimodal' imaging. Different sensors are optimized for different frequency ranges and provide useful information about the plant. Unfortunately, the process of combining plant images acquired using multiple sensors, called 'registration,' can be notoriously complex.

Registration is even more complex when involving three-dimensional (3D) multispectral images of plants at close range. To properly align close-up images taken from different cameras, it is necessary to develop computational algorithms that can effectively address geometric distortions. Besides, algorithms that perform registration for close-range images are more susceptible to errors caused by uneven illumination. This situation is commonly faced in the presence of leaf shadows, as well as light reflection and scattering in dense canopies.

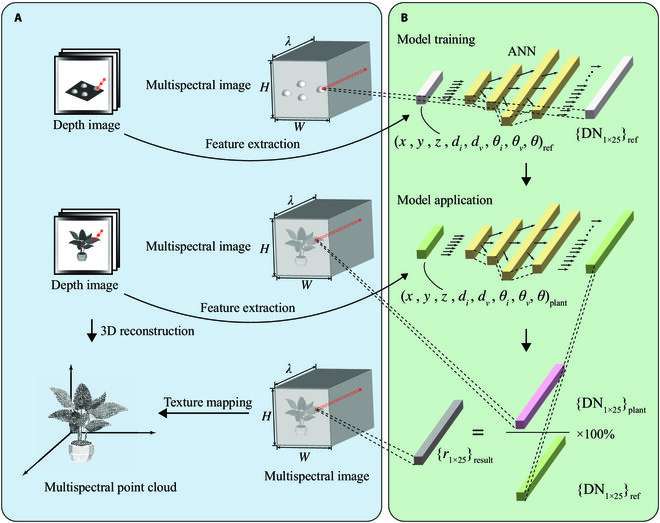

Against this backdrop, a research team including Professor Haiyan Cen from Zhejiang University, China, recently proposed a new approach for generating high-quality point clouds of plants by fusing depth images and snapshot spectral images. As explained in their paper, which was published in Plant Phenomics, the researchers employed a three-step image registration process which was combined with a novel artificial intelligence (AI)-based technique to correct for illumination effects.

Prof. Cen explains, "Our study shows that it is promising to use stereo references to correct plant spectra and generate high-precision, 3D, multispectral point clouds of plants."

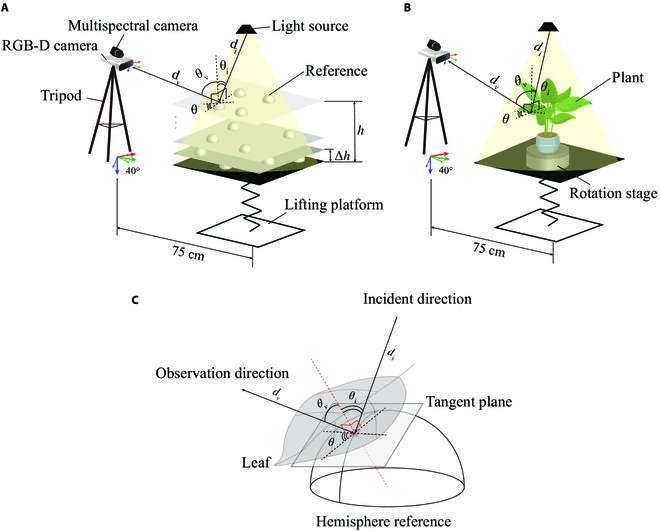

The experimental setup consisted of a lifting platform which held a rotating stage at a preset distance from two cameras on a tripod; an RGB (red, green, and blue)-depth camera and a snapshot multispectral camera. In each experiment, the researchers placed a plant on the stage, rotated the plant, and photographed it from 15 different angles.

They also took images of a flat surface containing Teflon hemispheres at various positions. The images of these hemispheres served as reference data for a reflectance correction method, which the team implemented using an artificial neural network.

For registration, the team first used image processing to extract the plant structure from the overall images, remove noise, and balance brightness. Then, they performed coarse registration using Speeded-Up Robust Features (SURF)—a method that can identify important image features that are mostly unaffected by changes in scale, illumination, and rotation.

Finally, the researchers performed fine registration using a method known as "Demons." This approach is based on finding mathematical operators that can optimally 'deform' one image to match it with another.

These experiments showed that the proposed registration method significantly outperformed conventional approaches. Moreover, the proposed reflectance correction technique produced remarkable results, as Prof. Cen highlights: "We recommended using our correction method for plants in growth stages with low canopy structural complexity and flattened and broad leaves." The study also highlighted a few potential areas of improvement to make the proposed approach even more powerful.

Satisfied with the results, Prof. Cen concludes, "Overall, our method can be used to obtain accurate, 3D, multispectral point cloud model of plants in a controlled environment. The models can be generated successively without varying the illumination condition."

In the future, techniques such as this one will help scientists, farmers, and plant breeders easily integrate data from different cameras into one consistent format. This could not only help them visualize important plant traits, but also feed these data to emerging AI-based software to simplify or even fully automate analyses.

More information: Pengyao Xie et al, Generating 3D Multispectral Point Clouds of Plants with Fusion of Snapshot Spectral and RGB-D Images, Plant Phenomics (2023). DOI: 10.34133/plantphenomics.0040

Provided by NanJing Agricultural University