This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

trusted source

proofread

New motion blur restoration approach for improved weed detection in crop fields

Effective weed control is crucial in agriculture to ensure high crop productivity. It entails the careful separation of weeds from crops before herbicides are sprayed in the fields. In simple terms, the goal of weed control is to remove the weeds while ensuring that the crop are not harmed. Traditional weed control methods have several drawbacks, such as crop contamination, herbicide waste, and poor accuracy. Therefore, it is essential to develop methods that can precisely locate and identify the boundary between a crop and weed and implement better weed control.

Farming robots programmed with 'semantic segmentation', a deep learning algorithm that accurately identifies different plants from captured images, may offer a solution to this problem. These robots can automatically distinguish weeds from crops and increase the efficiency of spraying herbicides. However, as the camera captures images of the plants, vibration of farming robots, crops and/or weeds may create a 'motion blur'.

In a new study, a research team led by Professor Kang Ryoung Park from Dongguk University, Korea, has proposed a method that restores motion-blurred images and improves crop and weed segmentation. This is the first study that considers motion blur for crop-weed segmentation. Findings of the study were published in Plant Phenomics .

Sharing the motivation behind their study, Prof. Park states, "Motion blur severely degrades the quality of the captured crop and weed images, reducing the accuracy of high-level vision tasks. This study proposes a method that restores the motion-blurred images to perform crop and weed segmentation, making this the first study on crop and weed segmentation considering motion blur."

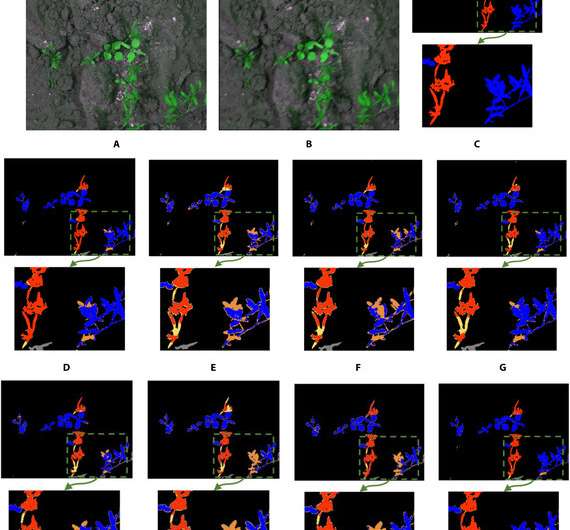

The team proposed the use of "wide receptive field attention network" (WRA-Net), a deep learning model to restore motion-blurred images and improve image quality for further processing. Post-image restoration, U-Net, a semantic segmentation technology, was used to segregate crops and weeds.

WRA-Net consists of an encoder, that extracts useful features from the blurred image and sends them to a decoder, that then upgrades the image. The encoder's primary function is to provide the decoder with enriched image features to refine and improve the image quality. For effective restoration, the proposed method divides the input image into patches instead of using the entire image at once.

The group tested the WRA-Net method with three publicly available databases: BoniRob, crop/weed field image dataset (CWFID), and rice seedling and weed datasets. They also evaluated the restoration efficiency to check for similarities between the restored and original images. The semantic segmentation performance was measured using mIOU (mean intersection over union), a parameter used to assess deep learning algorithms.

Across all measured parameters, WRA-Net outperformed the other databases. It effectively restored motion-blurred images and turned out to be the best weed segmentation model. This method was also more efficient than the state-of-the-art methods in terms of segmentation accuracy.

The mIOUs of test images restored by WRA-Net were 0.7741, 0.7444, and 0.7749, in CWFID, BoniRob, and rice seedling and weed database, respectively. Furthermore, WRA-Net performed well even in an embedded system (a combination of a computer processor, computer memory, and input/output peripheral devices) with limited computing resources.

So, what's next for the team? "In future studies, we would research about the method using preprocessing to reduce the errors caused by high similarity of crop and weed and thin area of object. In addition, we would research about the feature fusion that can obtain high semantic segmentation results directly from the motion-blurred image without performing two steps of restoration and semantic segmentation," adds Prof. Park.

Food security is gradually becoming difficult to achieve and is affected by lack of manpower and weather atrocities. This method can help overcome these issues and increase crop productivity.

More information: Chaeyeong Yun et al, WRA-Net: Wide Receptive Field Attention Network for Motion Deblurring in Crop and Weed Image, Plant Phenomics (2023). DOI: 10.34133/plantphenomics.0031

Provided by NanJing Agricultural University