Making entropy production work

While Rolf Landauer was working at IBM in the early 1960s, he had a startling insight about how heat, entropy, and information were connected. Landauer realized that manipulating information releases heat and increases entropy, or the disorder of the environment. He used this to calculate a theoretical lower limit for heat released from a computation, such as erasing a bit. At room temperature, the limit is about 10-21, or one billionth of a trillionth of a joule. (A joule is about the energy required to lift an apple by a meter.)

But Landauer's limit is, ironically, limited because of how general it is. It allows for unrealistic scenarios, like computations that take place over infinite time, and is millions of times lower than the inefficiencies in typical computers. "It's got nothing to do with the real world. If you want to look at what's important in the brain and cells, or in a laptop ... Landauer's bound is just not relevant," says physicist David Wolpert, a professor at SFI.

In a new paper published in Physical Review X, Wolpert and first author Artemy Kolchinsky explore more realistic bounds on entropy production by considering how constraints affect Landauer's limit. Their approach to understanding how much work can be extracted from a physical system could lead to a better understanding of the thermodynamic efficiency of various real-world systems, ranging from biomolecular machines to recently-developed "information engines" that use information as fuel.

Constraints are simply a way in which a system can't be manipulated. Kolchinsky gives the example of systems such as legos, which are constrained because they're modular—that means you can only assemble and disassemble them in certain ways. Other systems have indistinguishable components, like particles in a gas. "Let's imagine certain operations are not available to you," Kolchinsky says. "What happens then? Is there a better bound?" Landauer's question reformulated is then: What is the minimal amount of entropy a constrained system can produce?

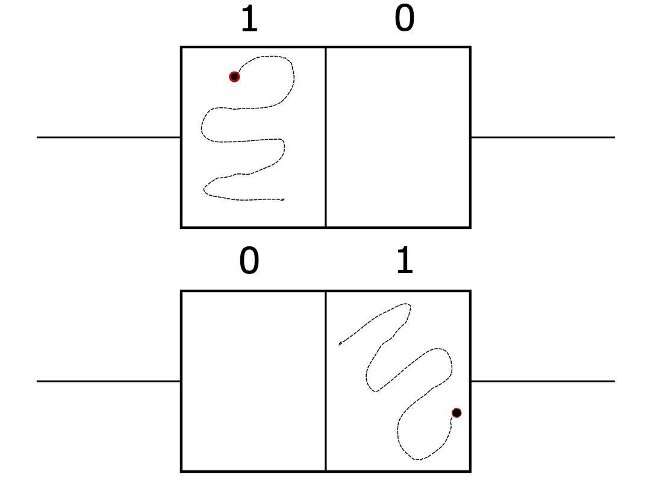

To grasp how entropy production works under constraints, a thought experiment called Szilard's engine helps. Szilard's engine is a box with a particle in it, split in half by a vertical partition. On each end there are pistons. The position of the particle can be thought of as a bit (0 or 1, depending on which side of the partition it is).

If you somehow knew which side of the box the particle was on, left or right, you could depress the piston into the empty side. Then you could remove the partition and the particle would bounce off the walls against the piston, pushing it back. This operation extracts energy from the particle, whose whereabouts are now unknown, and increases entropy. In other words, information about the particle's location is destroyed and converted to do work on the piston.

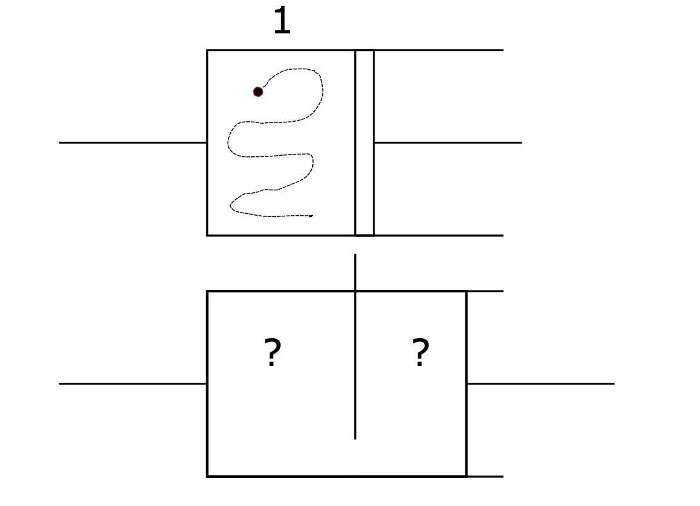

In a classical Szilard engine, the limit for how much entropy is produced is simply Landauer's. But suppose your information was different— for example, you knew that the particle was located in the top half of the box.

"We can derive tighter bounds than Landauer's," says Kolchinsky. Curiously, knowing only that the particle is located in the top half of the box, as opposed to left or right, prevents any energy from being extracted. Vertical information can't be turned into work when your constraint is horizontal motion. Kolchinsky and Wolpert introduce new terms to describe this phenomenon: information is "accessible" when it can be turned into work given the constraints. When information can't be turned into work, because of the misalignment between knowledge and the constraints, it's inaccessible.

Though their paper looks at several different examples of constraints, Wolpert says this work is only the beginning. These are rudimentary models—they hope to expand the analysis to more complex systems, such as cells, and calculate entropy production bounds under a wider variety of constraints.

More information: Artemy Kolchinsky et al, Work, Entropy Production, and Thermodynamics of Information under Protocol Constraints, Physical Review X (2021). DOI: 10.1103/PhysRevX.11.041024

Journal information: Physical Review X

Provided by Santa Fe Institute