Brain-inspired synaptic transistor learns while it computes

(Phys.org) —It doesn't take a Watson to realize that even the world's best supercomputers are staggeringly inefficient and energy-intensive machines.

Our brains have upwards of 86 billion neurons, connected by synapses that not only complete myriad logic circuits; they continuously adapt to stimuli, strengthening some connections while weakening others. We call that process learning, and it enables the kind of rapid, highly efficient computational processes that put Siri and Blue Gene to shame.

Materials scientists at the Harvard School of Engineering and Applied Sciences (SEAS) have now created a new type of transistor that mimics the behavior of a synapse. The novel device simultaneously modulates the flow of information in a circuit and physically adapts to changing signals.

Exploiting unusual properties in modern materials, the synaptic transistor could mark the beginning of a new kind of artificial intelligence: one embedded not in smart algorithms but in the very architecture of a computer. The findings appear in Nature Communications.

"There's extraordinary interest in building energy-efficient electronics these days," says principal investigator Shriram Ramanathan, associate professor of materials science at Harvard SEAS. "Historically, people have been focused on speed, but with speed comes the penalty of power dissipation. With electronics becoming more and more powerful and ubiquitous, you could have a huge impact by cutting down the amount of energy they consume."

The human mind, for all its phenomenal computing power, runs on roughly 20 Watts of energy (less than a household light bulb), so it offers a natural model for engineers.

"The transistor we've demonstrated is really an analog to the synapse in our brains," says co-lead author Jian Shi, a postdoctoral fellow at SEAS. "Each time a neuron initiates an action and another neuron reacts, the synapse between them increases the strength of its connection. And the faster the neurons spike each time, the stronger the synaptic connection. Essentially, it memorizes the action between the neurons."

In principle, a system integrating millions of tiny synaptic transistors and neuron terminals could take parallel computing into a new era of ultra-efficient high performance.

While calcium ions and receptors effect a change in a biological synapse, the artificial version achieves the same plasticity with oxygen ions. When a voltage is applied, these ions slip in and out of the crystal lattice of a very thin (80-nanometer) film of samarium nickelate, which acts as the synapse channel between two platinum "axon" and "dendrite" terminals. The varying concentration of ions in the nickelate raises or lowers its conductance—that is, its ability to carry information on an electrical current—and, just as in a natural synapse, the strength of the connection depends on the time delay in the electrical signal.

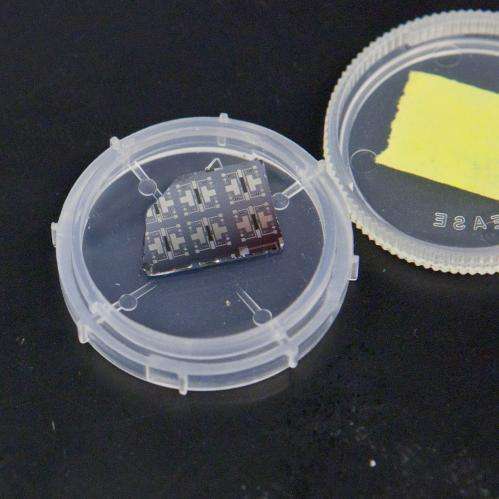

Structurally, the device consists of the nickelate semiconductor sandwiched between two platinum electrodes and adjacent to a small pocket of ionic liquid. An external circuit multiplexer converts the time delay into a magnitude of voltage which it applies to the ionic liquid, creating an electric field that either drives ions into the nickelate or removes them. The entire device, just a few hundred microns long, is embedded in a silicon chip.

The synaptic transistor offers several immediate advantages over traditional silicon transistors. For a start, it is not restricted to the binary system of ones and zeros.

"This system changes its conductance in an analog way, continuously, as the composition of the material changes," explains Shi. "It would be rather challenging to use CMOS, the traditional circuit technology, to imitate a synapse, because real biological synapses have a practically unlimited number of possible states—not just 'on' or 'off.'"

The synaptic transistor offers another advantage: non-volatile memory, which means even when power is interrupted, the device remembers its state.

Additionally, the new transistor is inherently energy efficient. The nickelate belongs to an unusual class of materials, called correlated electron systems, that can undergo an insulator-metal transition. At a certain temperature—or, in this case, when exposed to an external field—the conductance of the material suddenly changes.

"We exploit the extreme sensitivity of this material," says Ramanathan. "A very small excitation allows you to get a large signal, so the input energy required to drive this switching is potentially very small. That could translate into a large boost for energy efficiency."

The nickelate system is also well positioned for seamless integration into existing silicon-based systems.

"In this paper, we demonstrate high-temperature operation, but the beauty of this type of a device is that the 'learning' behavior is more or less temperature insensitive, and that's a big advantage," says Ramanathan. "We can operate this anywhere from about room temperature up to at least 160 degrees Celsius."

For now, the limitations relate to the challenges of synthesizing a relatively unexplored material system, and to the size of the device, which affects its speed.

"In our proof-of-concept device, the time constant is really set by our experimental geometry," says Ramanathan. "In other words, to really make a super-fast device, all you'd have to do is confine the liquid and position the gate electrode closer to it."

In fact, Ramanathan and his research team are already planning, with microfluidics experts at SEAS, to investigate the possibilities and limits for this "ultimate fluidic transistor."

He also has a seed grant from the National Academy of Sciences to explore the integration of synaptic transistors into bioinspired circuits, with L. Mahadevan, Lola England de Valpine Professor of Applied Mathematics, professor of organismic and evolutionary biology, and professor of physics.

"In the SEAS setting it's very exciting; we're able to collaborate easily with people from very diverse interests," Ramanathan says.

For the materials scientist, as much curiosity derives from exploring the capabilities of correlated oxides (like the nickelate used in this study) as from the possible applications.

"You have to build new instrumentation to be able to synthesize these new materials, but once you're able to do that, you really have a completely new material system whose properties are virtually unexplored," Ramanathan says. "It's very exciting to have such materials to work with, where very little is known about them and you have an opportunity to build knowledge from scratch."

"This kind of proof-of-concept demonstration carries that work into the 'applied' world," he adds, "where you can really translate these exotic electronic properties into compelling, state-of-the-art devices."

More information: www.nature.com/ncomms/2013/131 … full/ncomms3676.html

Journal information: Nature Communications

Provided by Harvard University