June 26, 2012 report

Google team: Self-teaching computers recognize cats

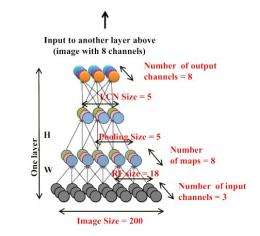

(Phys.org) -- At the International Conference on Machine Learning, which starts today in Edinburgh, participants will hear about Google’s results after several years’ work at their big idea laboratory, Google X. Computers can teach themselves to recognize cats. The artificial neural network had successfully taught itself on its own to identify these animals. The team of scientists and programmers, in their paper titled “Building high-level features using large scale unsupervised learning,” describe how they trained computers on a dataset of 10 million images, each with 200x200 pixels.

In one of the largest neural networks for machine learning, the team connected 16,000 computer processors and used the pool of 10 million images, taken from YouTube videos. A brain-like neural network was then put to work. The Google research team was led by Stanford University computer scientist Andrew Y. Ng and Google fellow Jeff Dean. The “brain” assembled a dreamlike digital image of a cat by using a hierarchy of memory locations to cull features after exposure to the millions of images. Presented with the digital images, Google’s brain looked for cats.

In the human brain, as biologists suggest, neurons detect significant objects and that is what the software neural network closely mirrored, described as turning out to be a “cybernetic cousin” to what takes place in the human visual cortex.

“We would like to understand if it is possible to learn a face detector using only unlabeled images downloaded from the Internet,” said the authors, describing the purpose at the outset of their research. “Contrary to what appears to be a widely held negative belief, our experimental results reveal that it is possible to achieve a face detector via only unlabeled data. Control experiments show that the feature detector is robust not only to translation but also to scaling and 3D rotation,” they said.

Their work in self-teaching machines is an example of scientific interest in what clusters of computers can achieve now in learning systems. According to Ng, the idea is that “You throw a ton of data at the algorithm and you let the data speak and have the software automatically learn from the data.”

At the same time, he is reluctant to even suggest that what scientists are achieving exactly mirrors the human brain, as computing capacity is still dwarfed by the number of connections in the brain. “A loose and frankly awful analogy is that our numerical parameters correspond to synapses,” he said.

More information: icml.cc/2012/

Building high-level features using large scale unsupervised learning, arXiv:1112.6209v3 [cs.LG] arxiv.org/abs/1112.6209

Abstract

We consider the problem of building high- level, class-specific feature detectors from only unlabeled data. For example, is it possible to learn a face detector using only unlabeled images? To answer this, we train a 9-layered locally connected sparse autoencoder with pooling and local contrast normalization on a large dataset of images (the model has 1 bil- lion connections, the dataset has 10 million 200x200 pixel images downloaded from the Internet). We train this network using model parallelism and asynchronous SGD on a clus- ter with 1,000 machines (16,000 cores) for three days. Contrary to what appears to be a widely-held intuition, our experimental re- sults reveal that it is possible to train a face detector without having to label images as containing a face or not. Control experiments show that this feature detector is robust not only to translation but also to scaling and out-of-plane rotation. We also find that the same network is sensitive to other high-level concepts such as cat faces and human bod- ies. Starting with these learned features, we trained our network to obtain 15.8% accu- racy in recognizing 20,000 object categories from ImageNet, a leap of 70% relative im- provement over the previous state-of-the-art.

© 2012 Phys.Org