Trillion-frame-per-second video

By using optical equipment in a totally unexpected way, MIT researchers have created an imaging system that makes light look slow.

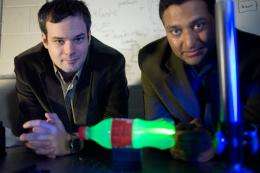

MIT researchers have created a new imaging system that can acquire visual data at a rate of one trillion exposures per second. That’s fast enough to produce a slow-motion video of a burst of light traveling the length of a one-liter bottle, bouncing off the cap and reflecting back to the bottle’s bottom.

Media Lab postdoc Andreas Velten, one of the system’s developers, calls it the “ultimate” in slow motion: “There’s nothing in the universe that looks fast to this camera,” he says.

The system relies on a recent technology called a streak camera, deployed in a totally unexpected way. The aperture of the streak camera is a narrow slit. Particles of light — photons — enter the camera through the slit and pass through an electric field that deflects them in a direction perpendicular to the slit. Because the electric field is changing very rapidly, it deflects late-arriving photons more than it does early-arriving ones.

The image produced by the camera is thus two-dimensional, but only one of the dimensions — the one corresponding to the direction of the slit — is spatial. The other dimension, corresponding to the degree of deflection, is time. The image thus represents the time of arrival of photons passing through a one-dimensional slice of space.

The camera was intended for use in experiments where light passes through or is emitted by a chemical sample. Since chemists are chiefly interested in the wavelengths of light that a sample absorbs, or in how the intensity of the emitted light changes over time, the fact that the camera registers only one spatial dimension is irrelevant.

But it’s a serious drawback in a video camera. To produce their super-slow-mo videos, Velten, Media Lab Associate Professor Ramesh Raskar and Moungi Bawendi, the Lester Wolfe Professor of Chemistry, must perform the same experiment — such as passing a light pulse through a bottle — over and over, continually repositioning the streak camera to gradually build up a two-dimensional image. Synchronizing the camera and the laser that generates the pulse, so that the timing of every exposure is the same, requires a battery of sophisticated optical equipment and exquisite mechanical control. It takes only a nanosecond — a billionth of a second — for light to scatter through a bottle, but it takes about an hour to collect all the data necessary for the final video. For that reason, Raskar calls the new system “the world’s slowest fastest camera.”

Doing the math

After an hour, the researchers accumulate hundreds of thousands of data sets, each of which plots the one-dimensional positions of photons against their times of arrival. Raskar, Velten and other members of Raskar’s Camera Culture group at the Media Lab developed algorithms that can stitch that raw data into a set of sequential two-dimensional images.

The streak camera and the laser that generates the light pulses — both cutting-edge devices with a cumulative price tag of $250,000 — were provided by Bawendi, a pioneer in research on quantum dots: tiny, light-emitting clusters of semiconductor particles that have potential applications in quantum computing, video-display technology, biological imaging, solar cells and a host of other areas.

The trillion-frame-per-second imaging system, which the researchers have presented both at the Optical Society's Computational Optical Sensing and Imaging conference and at Siggraph, is a spinoff of another Camera Culture project, a camera that can see around corners. That camera works by bouncing light off a reflective surface — say, the wall opposite a doorway — and measuring the time it takes different photons to return. But while both systems use ultrashort bursts of laser light and streak cameras, the arrangement of their other optical components and their reconstruction algorithms are tailored to their disparate tasks.

Because the ultrafast-imaging system requires multiple passes to produce its videos, it can’t record events that aren’t exactly repeatable. Any practical applications will probably involve cases where the way in which light scatters — or bounces around as it strikes different surfaces — is itself a source of useful information. Those cases may, however, include analyses of the physical structure of both manufactured materials and biological tissues — “like ultrasound with light,” as Raskar puts it.

As a longtime camera researcher, Raskar also sees a potential application in the development of better camera flashes. “An ultimate dream is, how do you create studio-like lighting from a compact flash? How can I take a portable camera that has a tiny flash and create the illusion that I have all these umbrellas, and sport lights, and so on?” asks Raskar, the NEC Career Development Associate Professor of Media Arts and Sciences. “With our ultrafast imaging, we can actually analyze how the photons are traveling through the world. And then we can recreate a new photo by creating the illusion that the photons started somewhere else.”

“It’s very interesting work. I am very impressed,” says Nils Abramson, a professor of applied holography at Sweden’s Royal Institute of Technology. In the late 1970s, Abramson pioneered a technique called light-in-flight holography, which ultimately proved able to capture images of light waves at a rate of 100 billion frames per second.

But as Abramson points out, his technique requires so-called coherent light, meaning that the troughs and crests of the light waves that produce the image have to line up with each other. “If you happen to destroy the coherence when the light is passing through different objects, then it doesn’t work,” Abramson says. “So I think it’s much better if you can use ordinary light, which Ramesh does.”

Indeed, Velten says, “As photons bounce around in the scene or inside objects, they lose coherence. Only an incoherent detection method like ours can see those photons.” And those photons, Velten says, could let researchers “learn more about the material properties of the objects, about what is under their surface and about the layout of the scene. Because we can see those photons, we could use them to look inside objects — for example, for medical imaging, or to identify materials.”

“I’m surprised that the method I’ve been using has not been more popular,” Abramson adds. “I’ve felt rather alone. I’m very glad that someone else is doing something similar. Because I think there are many interesting things to find when you can do this sort of study of the light itself.”

Provided by Massachusetts Institute of Technology

This story is republished courtesy of MIT News (web.mit.edu/newsoffice/), a popular site that covers news about MIT research, innovation and teaching.