September 15, 2011 report

New 'Koomey’s Law' of power efficiency parallels Moore'e Law

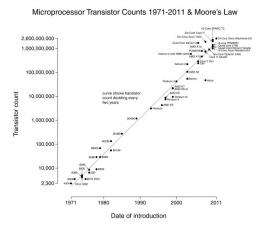

(PhysOrg.com) -- For most of the computer age, the central theme in computer hardware architecture has been: create more computational power using the same amount of chip space. Intel founder Gordon Moore even came up with a “law” based on what he’d seen up to that point to predict how things would go in the future; that computing power would double every year and a half. Now Jonathan Koomey, a consulting professor at Stanford has led a study that shows that the electrical energy efficiency of computers has been following roughly the same path. He and his colleagues from Microsoft and Intel have published the results of their study in EEE Annals of the History of Computing that shows that the energy efficiency of computers has doubled nearly every eighteen months (now called appropriately enough, Koomey’s Law) going all the way back to the very first computers built in the 1950’s.

This is not the first time Koomey’s name has been in the news, just last month he was the lead author of a paper that showed that electricity consumed by data centers in the U.S. and around the world grew at a slower pace (from 2005 to 2010) than had been predicted by a 2007 U.S. EPA report. This time around, Koomey, in collaboration, with Intel and Microsoft has been studying how much electricity is used relative to processing power, by computers in a historical context. Way back in 1956, for example, ENIAC, one of the first true computers, used approximately 150 kilowatts of electricity to perform just a few hundred calculations per second.

Using historical data, the team created a graph comparing the amount of computing power of the average computer (from supercomputers to laptops) with the amount of electricity it needed and found that over time, energy efficiency improvements from the 1950’s till now, have moved in virtual lockstep with increases in the amount of processing power: energy efficiency, they found effectively doubled every 1.57 year. Because of this, they predict that the trend is likely to continue into the foreseeable future.

This is important as computing platforms have become more mobile and end users increasingly tend to place more value in power efficiency (because it means longer battery life) than in how fast their Smartphone or tablet is able to produce results. Thus, it’s possible that Koomey’s Law will become the rallying cry on into the future, much as Moore’s Law has been in the past. Though hopefully, new engineers won’t start to fudge on Moore’s Law to get these results, as that could lead to small devices that last for weeks on batteries alone, but are sluggish.

More information:

Implications of Historical Trends in the Electrical Efficiency of Computing, July-September 2011 (vol. 33 no. 3)

pp. 46-54. doi.ieeecomputersociety.org/10.1109/MAHC.2010.28

Abstract

The electrical efficiency of computation has doubled roughly every year and a half for more than six decades, a pace of change comparable to that for computer performance and electrical efficiency in the microprocessor era. These efficiency improvements enabled the creation of laptops, smart phones, wireless sensors, and other mobile computing devices, with many more such innovations yet to come. The Web Extra appendix outlines the data and methods used in this study.

© 2011 PhysOrg.com