December 16, 2021 report

A classical machine learning technique for easier segmentation of mummified remains

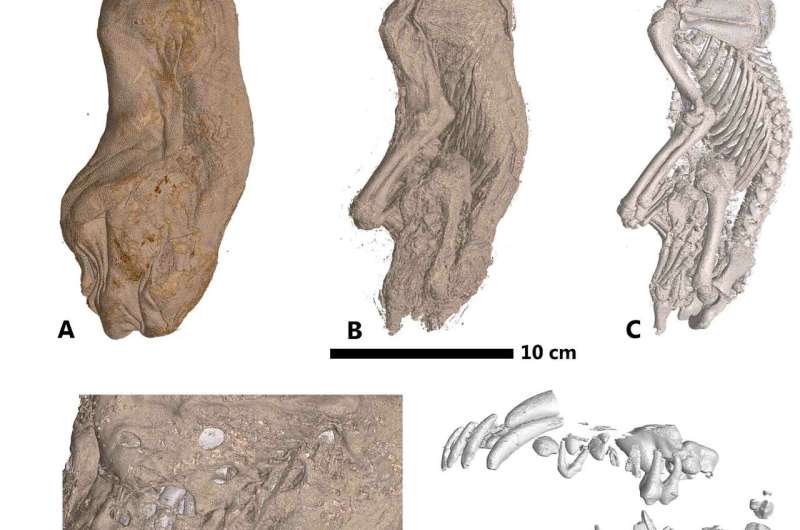

A team of researchers from the University of Malta and the European Synchrotron Radiation Facility in France has developed a new segmentation method for viewing the inside of mummified remains. In their paper posted on the open access site PLOS ONE, the group describes their new technique and how well it worked when tested on mummified animals.

In years past, if archaeologists wanted to know what a wrapped mummy looked like, they simply cut the wrappings off and had a look. Of course, that approach damaged mummified remains, which is why it is no longer used. Instead, researchers have turned to X-rays or CT scans. Unfortunately, the results leave much to be desired. To improve the images, researchers have recently used deep-learning algorithms trained on imagery of mummified remains. And while the results have been quite spectacular at times, the process is long and drawn out and difficult. In this new effort, the researchers tried a new approach that entails much less work—using classical machine learning as a processing aid.

All of the techniques to image the inside of mummy casings are based on segmentation, in which parts of images are broken down into voxels (3D pixels) on a slice-by-slice basis—each is then assigned to a certain characteristic, such as soft tissue or bone. Once such assignments have been made, an algorithm pieces the voxels together to create an image.

Noting that the complexity of current systems is due to the slice-by-slice analysis of the deep learning systems, the researchers instead used classical machine learning because it could be taught to look at 3D data as a whole and to identify parts in new imagery that it had learned from studying older images.

Testing of the system using mummified animals showed it to be nearly as good as those based on deep learning algorithms—they found it to be 94 to 98 percent accurate as compared to 97 to 99 percent accurate for conventional systems. They note also that their system could be scaled for use in other applications such as paleontology and medical imaging.

More information: Marc Tanti et al, Automated segmentation of microtomography imaging of Egyptian mummies, PLOS ONE (2021). DOI: 10.1371/journal.pone.0260707

Journal information: PLoS ONE

© 2021 Science X Network