New memristor boosts accuracy and efficiency for neural networks on an atomic scale

Just like their biological counterparts, hardware that mimics the neural circuitry of the brain requires building blocks that can adjust how they synapse, with some connections strengthening at the expense of others. One such approach, called memristors, uses current resistance to store this information. New work looks to overcome reliability issues in these devices by scaling memristors to the atomic level.

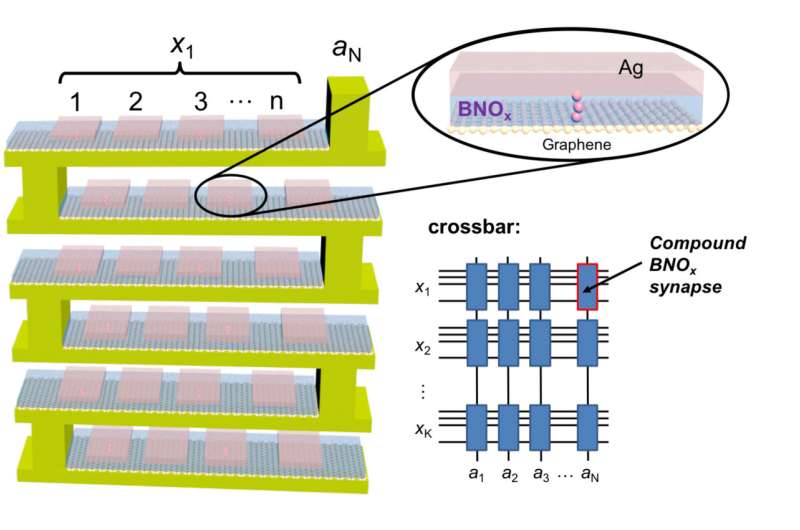

A group of researchers demonstrated a new type of compound synapse that can achieve synaptic weight programming and conduct vector-matrix multiplication with significant advances over the current state of the art. Publishing its work in the Journal of Applied Physics, the group's compound synapse is constructed with atomically thin boron nitride memristors running in parallel to ensure efficiency and accuracy.

The article appears in a special topic section of the journal devoted to "New Physics and Materials for Neuromorphic Computation," which highlights new developments in physical and materials science research that hold promise for developing the very large-scale, integrated "neuromorphic" systems of tomorrow that will carry computation beyond the limitations of current semiconductors today.

"There's a lot of interest in using new types of materials for memristors," said Ivan Sanchez Esqueda, an author on the paper. "What we're showing is that filamentary devices can work well for neuromorphic computing applications, when constructed in new clever ways."

Current memristor technology suffers from a wide variation in how signals are stored and read across devices, both for different types of memristors as well as different runs of the same memristor. To overcome this, the researchers ran several memristors in parallel. The combined output can achieve accuracies up to five times those of conventional devices, an advantage that compounds as devices become more complex.

The choice to go to the subnanometer level, Sanchez said, was born out of an interest to keep all of these parallel memristors energy-efficient. An array of the group's memristors were found to be 10,000 times more energy-efficient than memristors currently available.

"It turns out if you start to increase the number of devices in parallel, you can see large benefits in accuracy while still conserving power," Sanchez said. Sanchez said the team next looks to further showcase the potential of the compound synapses by demonstrating their use completing increasingly complex tasks, such as image and pattern recognition.

More information: Efficient learning and crossbar operations with atomically-thin 2-D material compound synapses, Journal of Applied Physics (2018). DOI: 10.1063/1.5042468

Journal information: Journal of Applied Physics

Provided by American Institute of Physics