Metacognition training boosts gen chem exam scores

It's a lesson in scholastic humility: You waltz into an exam, confident that you've got a good enough grip on the class material to swing an 80 percent or so, maybe a 90 if some of the questions go your way.

Then you get your results: 60 percent. Your grade and your stomach both sink. What went wrong?

Students, and people in general, can tend to overestimate their own abilities. But University of Utah research shows that students who overcome this tendency score better on final exams. The boost is strongest for students in the lower 25 percent of the class. By thinking about their thinking, a practice called metacognition, these students raised their final exam scores by 10 percent on average - a full letter grade.

The study, published today in the Journal of Chemical Education, is authored by University of Utah doctoral student Brock Casselman and professor Charles Atwood.

"The goal was to create a system that would help the student to better understand their ability," says Casselman, "so that by the time they get to the test, they will be ready."

Errors in estimation

General chemistry at the University of Utah is a rigorous course. In 2010 only two-thirds of the students who took the course passed it - and of those who didn't, only a quarter ever retook and passed the class.

"We're trying to stop that," Atwood says. "We always want our students to do better, particularly on more difficult, higher-level cognitive tasks, and we want them to be successful and competitive with any other school in the country."

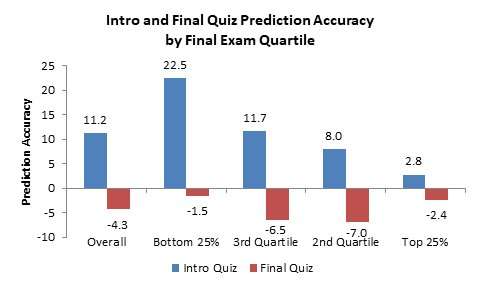

Part of the problem may lie in how students view their own abilities. When asked to predict their scores on a midterm pretest near the beginning of the school year, students of all performance levels overestimated their scores by an average of 11 percent over the whole class. The students in the lower 25 percent of class scores, also called the "bottom quartile," overestimated by around 22 percent.

This phenomenon isn't unknown - in 1999 psychologists David Dunning and Justin Kruger published a paper stating that people who perform poorly at a task tend to overestimate their performance ability, while those who excel at the task may slightly underestimate their competence. This beginning-of-year survey showed that general chemistry students are not exempt.

"They convince themselves that they know what they're doing when in fact they really don't," Atwood says.

The antidote to such a tendency is engagement in metacognition, or thinking about and recognizing one's own strengths and limitations. Atwood says that scientists employ metacognition skills to evaluate the course of their research.

"Once they have got some chunk figured out and realize 'I don't understand this as well as I thought I did,' they will adjust their learning pattern," he says. After reviewing previous research on metacognition in education, Atwood and Casselman set out to design a system to help chemistry students accurately estimate their performance and make adjustments as necessary.

Accurate estimation

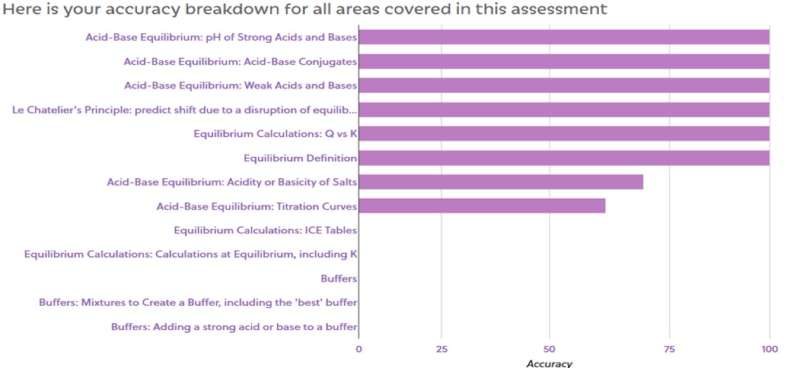

In collaboration with Madra Learning, an online homework and learning assessment platform, Casselman and Atwood put together practice materials that would present a realistic test, and asked students to predict their scores on the practice test before taking it. They also implemented a feedback system that would identify the topics the students were struggling with so they could make a personal study plan.

After a few years of tweaking the feedback system, they added the element of weekly quizzes into the experimental metacognition training to provide students more frequent feedback. By the first midterm exam of the 2016 class, Casselman and Atwood could see that the experimental course section's scores were significantly higher than a control section's that did not receive metacognition training. "I was ecstatic!" Casselman says.

By the final exam, students' predictions of their scores were about right on, or a little underpredicted. Overall, the researchers report, students who learned metacognition skills scored around 4 percent higher on the final exam than their peers in the control section. But the strongest improvement was in the bottom quartile of students, who scored a full 10 percent better, on average, than the bottom quartile of the control section.

"This will take D and F students and turn them into C students," Atwood says. "We also see it taking higher-end C students and making them into B students. Higher-end B students become A students."

Atwood adds that the students took a nationally standardized test as their final exam. That means that the researchers can compare the U students' performance to other students nationwide. The bottom quartile of students at the U who received metacognition training scored in the 54th percentile. "So, our bottom students are now performing better than the national average," Atwood says.

"They're not going to be overpredicting their ability," Casselman says. "They're going to go in knowing exactly how well they're going to do and they will have prepared in the areas they knew they were weakest."

A cumulative effect

This study covered students in the first semester of general chemistry. Casselman has now expanded the study into the second semester, meaning some students have had no semesters of metacognition training, some have had one and some have had two. Preliminary analysis suggests that the training may have a cumulative effect across semesters.

"The students who are successful will ask themselves—what is this question asking me to do?" Atwood says. "How does that relate to what we're doing in class? Why are they giving me this question? If there's an equation, why does this equation work? That's the metacognitive part. If they will kick that in, they will see their grades go straight through the roof."

Both Atwood and Casselman say this principle is not limited to chemistry and could be applied throughout campus. It's a principle universally applicable to learning, and has been hinted at for centuries, including in a Confucian proverb:

"Real knowledge is to know the extent of one's ignorance."

More information: Brock L. Casselman et al, Improving General Chemistry Course Performance through Online Homework-Based Metacognitive Training, Journal of Chemical Education (2017). DOI: 10.1021/acs.jchemed.7b00298

Journal information: Journal of Chemical Education

Provided by University of Utah