Teaching robots to see

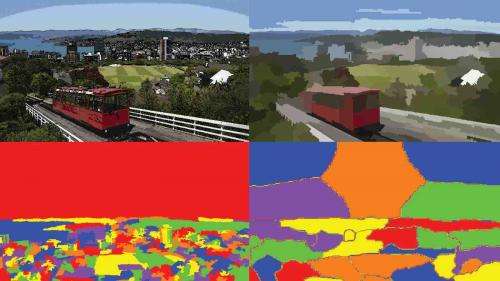

Syed Saud Naqvi, a PhD student from Pakistan, is working on an algorithm to help computer programmes and robots to view static images in a way that is closer to how humans see.

Saud explains: "Right now computer programmes see things as very flat—they find it difficult to distinguish one object from another."

Facial recognition is already in use but, says one of Saud's supervisors Dr Will Browne, object detection is more complex than facial recognition as there are many more variables.

Different object detection algorithms exist, some focus on patterns, textures or colours while others focus on the outline of a shape. Saud's algorithm extracts the most relevant information for decision-making by selecting the best algorithm to use on an individual image.

"The defining feature of an object is not always the same—sometimes it's the shape that defines it, sometimes it's the textures or colours. A picture of a field of flowers, for example, could need a different algorithm than an image of a cardboard box," says Saud.

Work on the algorithm was presented at this year's Genetic and Evolutionary Computational Conference (GECCO) in Vancouver and received a Best Paper Award.

Now the computer vision algorithm is going to be taken even further through a Victoria Summer Scholarship project to apply it to a dynamic, real-world robot for object detection tasks. This will take the algorithm from analysing static images to moving real-time scenes.

It is hoped that the algorithm will be able to help a robot to navigate its environment by being able to separate objects from their surrounds.

Dr Browne says there are a number of uses for this kind of technology both now and in the future. Immediate possibilities include use on social media and other websites to self-caption photos with information on the location or content of a photo.

"Most of the robots that have been dreamed up in pop culture would need this kind of technology to work. Currently, there aren't many home helper robots which can load a washing machine—this technology would help them do it."

It's early days but Dr Browne says in the future it's possible that this kind of imaging technology could be adapted to use in medical testing, such as identifying cancer cells in a mammogram.

Provided by Victoria University