IBM research advances device performance for quantum computing

(PhysOrg.com) -- Scientists at IBM Research have achieved major advances in quantum computing device performance that may accelerate the realization of a practical, full-scale quantum computer. For specific applications, quantum computing, which exploits the underlying quantum mechanical behavior of matter, has the potential to deliver computational power that is unrivaled by any supercomputer today.

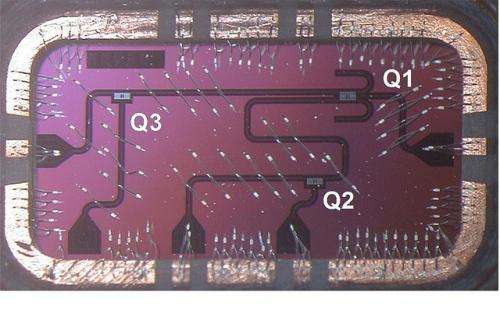

Using a variety of techniques in the IBM labs, scientists have established three new records for reducing errors in elementary computations and retaining the integrity of quantum mechanical properties in quantum bits (qubits) – the basic units that carry information within quantum computing. IBM has chosen to employ superconducting qubits, which use established microfabrication techniques developed for silicon technology, providing the potential to one day scale up to and manufacture thousands or millions of qubits.

IBM researchers will be presenting their latest results today at the annual American Physical Society meeting taking place February 27-March 2, 2012 in Boston, Mass.

The special properties of qubits will allow quantum computers to work on millions of computations at once, while desktop PCs can typically handle minimal simultaneous computations. For example, a single 250-qubit state contains more bits of information than there are atoms in the universe.

These properties will have wide-spread implications foremost for the field of data encryption where quantum computers could factor very large numbers like those used to decode and encode sensitive information.

"The quantum computing work we are doing shows it is no longer just a brute force physics experiment. It's time to start creating systems based on this science that will take computing to a new frontier," says IBM scientist Matthias Steffen, manager of the IBM Research team that's focused on developing quantum computing systems to a point where it can be applied to real-world problems.

Other potential applications for quantum computing may include searching databases of unstructured information, performing a range of optimization tasks and solving previously unsolvable mathematical problems.

The most basic piece of information that a typical computer understands is a bit. Much like a light that can be switched on or off, a bit can have only one of two values: "1" or "0". For qubits, they can hold a value of “1” or “0” as well as both values at the same time. Described as superposition, this is what allows quantum computers to perform millions of calculations at once.

One of the great challenges for scientists seeking to harness the power of quantum computing is controlling or removing quantum decoherence – the creation of errors in calculations caused by interference from factors such as heat, electromagnetic radiation, and materials defects. To deal with this problem, scientists have been experimenting for years to discover ways of reducing the number of errors and of lengthening the time periods over which the qubits retain their quantum mechanical properties. When this time is sufficiently long, error correction schemes become effective making it possible to perform long and complex calculations.

There are many viable systems that can potentially lead to a functional quantum computer. IBM is focusing on using superconducting qubits that will allow a more facile transition to scale up and manufacturing.

IBM has recently been experimenting with a unique “three dimensional” superconducting qubit (3D qubit), an approach that was initiated at Yale University. Among the results, the IBM team has used a 3D qubit to extend the amount of time that the qubits retain their quantum states up to 100 microseconds – an improvement of 2 to 4 times upon previously reported records. This value reaches just past the minimum threshold to enable effective error correction schemes and suggests that scientists can begin to focus on broader engineering aspects for scalability.

In separate experiments, the group at IBM also demonstrated a more traditional “two-dimensional” qubit (2D qubit) device and implemented a two-qubit logic operation – a controlled-NOT (CNOT) operation, which is a fundamental building block of a larger quantum computing system. Their operation showed a 95 percent success rate, enabled in part due to the long coherence time of nearly 10 microseconds. These numbers are on the cusp of effective error correction schemes and greatly facilitate future multi-qubit experiments.

The implementation of a practical quantum computer poses tremendous scientific and technological challenges, but all results taken together paint an optimistic picture of rapid progress in that direction.

Core device technology and performance metrics at IBM have undergone a series of amazing advancements by a factor of 100 to 1,000 times since the middle of 2009, culminating in the recent results that are very close to the minimum requirements for a full-scale quantum computing system as determined by the world-wide research community. In these advances, IBM stresses the importance and value of the ongoing exchange of information and learning with the quantum computing research community as well as direct university and industrial collaborations.

“The superconducting qubit research led by the IBM team has been progressing in a very focused way on the road to a reliable, scalable quantum computer. The device performance that they have now reported brings them nearly to the tipping point; we can now see the building blocks that will be used to prove that error correction can be effective, and that reliable logical qubits can be realized,” observes David DiVincenzo, professor at the Institute of Quantum Information, Aachen University and Forschungszentrum Juelich.

Based on this progress, optimism about superconducting qubits and the possibilities for a future quantum computer are rapidly growing. While most of the work in the field to date has focused on improvements in device performance, efforts in the community now must now include systems integration aspects, such as assessing the classical information processing demands for error correction, I/O issues, feasibility, and costs with scaling.

IBM envisions a practical quantum computing system as including a classical system intimately connected to the quantum computing hardware. Expertise in communications and packaging technology will be essential at and beyond the level presently practiced in the development of today’s most sophisticated digital computers.

Provided by IBM