Mapping the protein universe

To understand how life works, figure out the proteins first. DNA is the architect of life, but proteins are the workhorses. After proteins are built using DNA blueprints, they are constantly at work breaking down and building up all parts of the cell—ferrying oxygen around the body, sending signals to patch wounds, transmitting thoughts across neurons, breaking down oil or cellulose, and creating biofuels for energy.

But despite proteins' vital contributions, we know little about what the vast majority of proteins do, and even less about how they do it. While the ability to scoop up microbes from the environment and sequence their DNA has been getting cheaper, we don't yet have specialized tools to relate the novel proteins and patterns present in environmental samples to a complete collection of known proteins.

One critically needed tool is good software—and that's what a group of researchers from five national laboratories, led by the U.S. Department of Energy's Argonne National Laboratory, are building in a project called "Mapping the Protein Universe."

"For everything other than a handful of well-studied model organisms, we don't know what the majority of genes, and thus proteins, do," said Argonne associate laboratory director Rick Stevens, who is leading the project. "Ultimately, you want to take raw DNA sequences and turn them into a complete set of good predictions about proteins and their potential functions."

When you take a sample of soil, for example, at the site of a toxic spill—which contains bacteria, viruses, fungi and even small insects and plants—and run the contents through a DNA sequencer, you get a soup of chopped-up DNA snippets from thousands of different microbes.

To make that data useful, the project plans to develop software to:

- Piece together snippets of DNA into their probable whole genomes, using matching overlaps, probability, and a lot of computer memory.

- Compare this DNA with databases of genes we already know to find matches.

- Flag the protein genes that frequently show up together.

- And finally, use contextual clues to predict what the proteins we don't recognize at all might be for.

Proteins frequently appear together because they are part of the same pathway or reaction chain: one protein breaks down a molecule, the next takes one piece to build a new molecule, and so forth. Understanding these pathways is important for researchers trying to build methods to manufacture fuels or other products.

Perhaps researchers are trying to build a catalyst or drug that depends on one protein, but they don't know that other proteins have to be present for the reaction to occur.

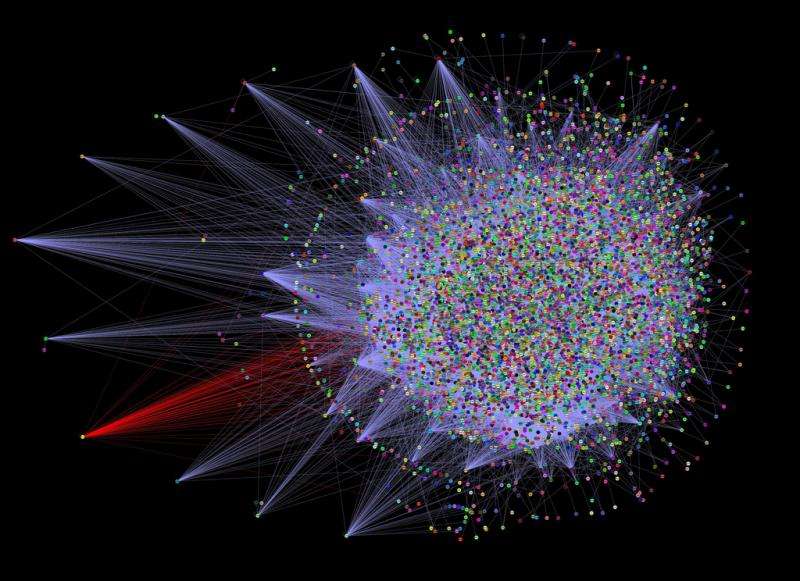

Software can help identify these partner proteins by looking for genes that frequently occur together or are located physically close to each other on the chromosome, especially genes that stay the same across many different species.

"If you have network data from a project like this, you can look by association," said Brookhaven computational biologist Sergei Maslov. "For example, what proteins are more common in bacteria that live in areas contaminated by mercury? Perhaps those proteins are involved in breaking down mercury to a less harmful form."

The program would provide researchers with an extensive library of proteins, as well as new computational approaches to handling this amount of data.

"This type of work is usually done on a set with fewer than 10,000 species. We are doing 50,000 species plus integrating all the large-scale public metagenomes" said Maslov. "When it's completed, it will be orders of magnitude larger than anything that has been done before."

"We're bringing all these threads together for the first time, and it really requires a new way of thinking to integrate all of this data," Stevens said.

The effort aligns with the U.S. Department of Energy's push to find ways to effectively use "big data" to solve challenging scientific questions, especially as scientists are able to collect more and more data from synchrotrons, climate stations, metagenomics and more. The national laboratories are particularly well suited to address problems that include large datasets, thanks to their supercomputers and highly trained teams that frequently wrestle with such data, particularly at the Leadership Computing Facilities.

"There are all kinds of chemistries that we know nothing about—any of which might be keys to new drugs, new forms of energy or more efficient industrial processes," Stevens said. "This will help us bring our full computational power to bear on these important questions."

Provided by Argonne National Laboratory