Tiny chip mimics brain, delivers supercomputer speed

Researchers Thursday unveiled a powerful new postage-stamp size chip delivering supercomputer performance using a process that mimics the human brain.

The so-called "neurosynaptic" chip is a breakthrough that opens a wide new range of computing possibilities from self-driving cars to artificial intelligence systems that can installed on a smartphone, the scientists say.

The researchers from IBM, Cornell Tech and collaborators from around the world said they took an entirely new approach in design compared with previous computer architecture, moving toward a system called "cognitive computing."

"We have taken inspiration from the cerebral cortex to design this chip," said IBM chief scientist for brain-inspired computing, Dharmendra Modha, referring to the command center of the brain.

He said existing computers trace their lineage back to machines from the 1940s which are essentially "sequential number-crunching calculators" that perform mathematical or "left brain" tasks but little else.

The new chip dubbed "TrueNorth" works to mimic the "right brain" functions of sensory processing—responding to sights, smells and information from the environment to "learn" to respond in different situations, Modha said.

It accomplishes this task by using a huge network of "neurons" and "synapses," similar to how the human brain functions by using information gathered from the body's sensory organs.

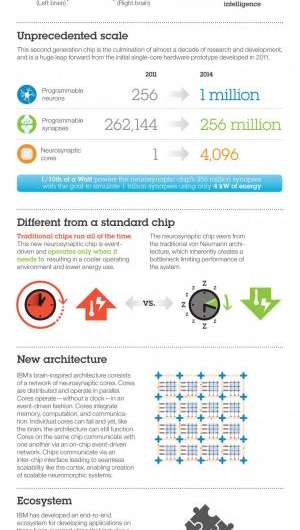

The researchers designed TrueNorth with one million programmable neurons and 256 million programmable synapses, on a chip with 4,096 cores and 5.4 billion transistors.

A key to the performance is the extremely low energy use on the new chip, which runs on the equivalent energy of a hearing-aid battery.

Sensor becomes the computer

This can allow a chip installed in a car or smartphone to perform supercomputer calculations in real time without connecting to the cloud or other network.

"The sensor becomes the computer," Modha told AFP in a phone interview.

"You could have better sensory processors without the connection to Wi-Fi or the cloud.

This would allow a self-driving vehicle, for example, to detect problems and deal with them even if its data connection is broken.

"It can see an accident about to happen," Modha said.

Similarly, a mobile phone can take smells or visual information and interpret them in real time, without the need for a network connection.

"After years of collaboration with IBM, we are now a step closer to building a computer similar to our brain," said Rajit Manohar, a researcher at Cornell Tech, a graduate school of Cornell University.

The project funded by the US Defense Advanced Research Projects Agency (DARPA) published its research in a cover article on the August 8 edition of the journal Science.

The researchers say TrueNorth in some ways outperforms today's supercomputers although a direct comparison is not possible because they operate differently.

But they wrote that TrueNorth can deliver from 46 billion to 400 billion "synaptic" calculations per second per watt of energy. That compares with the most energy-efficient supercomputer which delivers 4.5 billion "floating point" calculations per second and per watt.

The chip was fabricated using Samsung's 28-nanometer process technology.

"It is an astonishing achievement to leverage a process traditionally used for commercially available, low-power mobile devices to deliver a chip that emulates the human brain by processing extreme amounts of sensory information with very little power," said Shawn Han of Samsung Electronics, in a statement.

"This is a huge architectural breakthrough that is essential as the industry moves toward the next-generation cloud and big-data processing."

Modha said the researchers have produced only the chip and that it could be years before commercial applications become available.

But he said it "has the potential to transform society" with a new generation of computing technology. And he noted that hybrid computers may be able to one day combine the "left brain" machines with the new "right brain" devices for even better performance.

More information: "A million spiking-neuron integrated circuit with a scalable communication network and interface," by P.A. Merolla et al. Science, 2014. www.sciencemag.org/lookup/doi/ … 1126/science.1254642

Journal information: Science

© 2014 AFP