Teachable moments: Robots learn our humanistic ways

(Phys.org) —Robots can observe human behavior and—like a human baby—deduce a reasonable approach to handling specific objects.

Using new algorithms developed by Cornell researchers, a robot saw a human eating a meal and then cleared the table without spilling liquids or leftover foods. In another test, the robot watched a human taking medicine and fetched a glass of water. After seeing a human pour milk into cereal, the robot decided—on its own—to return the milk to the refrigerator.

Ashutosh Saxena, assistant professor of computer science, graduate student Hema Koppula and colleagues describe their new methods in "Learning Human Activities and Object Affordances from RGB-D Videos," in a forthcoming issue of the International Journal of Robotics Research. Saxena's goal is to develop learning algorithms to enable robots to assist humans at home and in the workplace.

Saxena's team previously programed robots to identify human activities and common objects, using a Microsoft Kinect 3-D video camera. They have put those capabilities together to enable a robot to discover what to do with each object. For example, a water pitcher is "pourable," while a cup is "pour-to." Both are "movable."

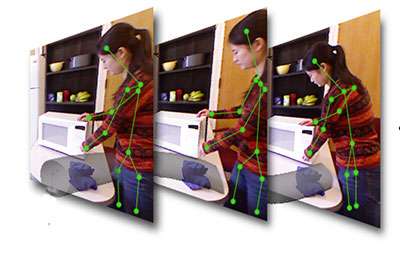

A robot learns by analyzing video of a human handling an object. It treats the body as a skeleton, with the 3-D coordinates of joints and the angles made by limbs tracked from one frame to the next. The sequence is compared with a database of activities, using a mathematical method that adds up all the probabilities of a match for each step. This allows for the possibility that part of the activity might be hidden from view as the person moves around.

The robot "trains" by observing several different people performing the same activity, breaking it down into sub-activities like reaching, pouring or lifting, and learns what the various versions have in common. Observing a new person, it computes the probability of a match with each of the activities it has learned about and selects the best match.

At the same time, the robot examines the part of the image around the skeleton's hands and compares it to a database of objects, to build a list of activities associated with each object. That's how it knows, for example, that cups and plates are usually held level to avoid spilling their contents.

"It is not about naming the object," Saxena said. "It is about the robot trying to figure out what to do with the object."

Neuroscientists, he noted, suggest that human babies learn in the same way. "As babies we learn to name things later in life, but first we learn how to use them," he explained. Eventually, he added, a robot might be able to learn how to perform the entire human activity.

In experiments, the robot can figure out the use of the object correctly 79 percent of the time. If the robot has observed humans performing different activities with the same object, it performs new tasks with that object with 100 percent accuracy. As assistive robots become common, Saxena said, they may be able to draw on a large common database of object uses and learn from each other.

Provided by Cornell University