Wireless data centers could be faster, cheaper, greener

(Phys.org)—Cornell computer scientists have proposed an innovative wireless design that could greatly reduce the cost and power consumption of massive cloud computing data centers, while improving performance.

In the "cloud," data is stored and processed in remote data centers. Economies of scale let cloud providers offer these services at far lower cost than buying and maintaining one's own equipment.

But data centers with tens of thousands of computers draw tens of thousands of kilowatts.

"Reducing power consumption would not only cut costs but would be a benefit to the environment," said Hakim Weatherspoon, assistant professor of computer science. Weatherspoon; Emin Gün Sirer, associate professor of computer science; graduate student Ji-Yong Shin; and Darko Kirovski of Microsoft Research have prepared a feasibility study for what they call a "Cayley Data Center," based on wireless networking and named for mathematician Arthur Cayley, who laid out in 1854 the mathematics they used in their design.

Their proposal is available in the Cornell eCommons information repository and will be presented at the Eighth ACM/IEEE Symposium on Architectures for Networking and Communications Systems Oct. 29-30 at the University of Texas in Austin.

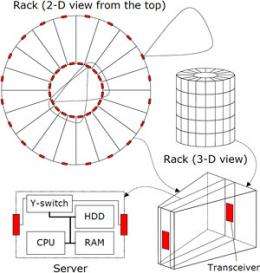

The design was inspired by the availability of a new 60 gigahertz (GHz) wireless transceiver developed at Georgia Tech based on inexpensive CMOS chip technology. The transceiver transmits in a narrow cone, and 60 GHz radiation is quickly attenuated by the air and reaches only about 10 meters from the source, so the device can be used for short-range communication that will not interfere with other activity nearby.

In a conventional data center, servers are stacked in square racks like pizza boxes in a delivery truck. On top of every stack is a "switch"—a fairly expensive and power-hungry box that routs signals in and out of the servers and sends them off on wires to other servers, based on their electronic addresses.

In the proposed design, servers are mounted vertically in cylindrical racks several tiers high. Think of a wedding cake in which every tier is the same diameter, and one wedge-shaped slice of any tier represents a server. A 60 GHz transceiver is located at the outside and inside end of each server. With racks arranged in rows, each rack has line-of-sight wireless connectivity to eight other racks (except at the edges), and transceivers at the inner ends connect servers within the rack.

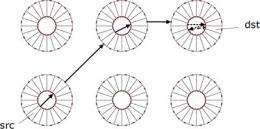

Instead of depending on switches, servers do their own routing, based on the physical location of the destination. Signals pass rack to rack, each time moving in the direction that looks like the shortest route across the floor. A Cayley Data Center would be more resistant to failure, the researchers said, because even if an entire rack died, signals could go around it. A simulation showed that 59 percent of servers in a center would have to fail before communication broke down.

Cost comparisons are difficult because the 60 GHz transceivers are not yet commercially available for data centers, but by making some guesses, the researchers suggest that the cost of wireless connectivity could be as low as 1/12 that of conventional switches and wires for a hypothetical data center with 10,000 servers. Power consumption would go down by a similar amount, they said, and with no wires, maintenance would be easier.

"We argue that 60 GHz could revolutionize the simplicity of integrating and maintaining data centers," the researchers concluded in their paper.

Provided by Cornell University