This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

trusted source

proofread

Algorithm raises new questions about Cascadia earthquake record

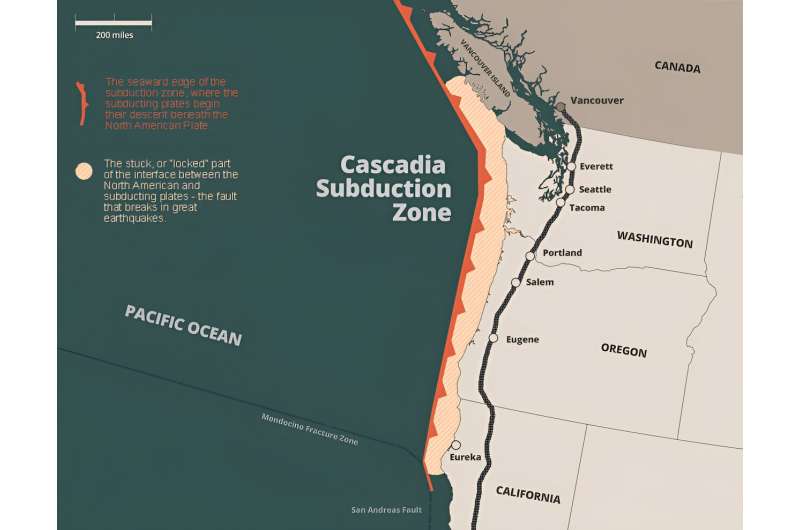

The Cascadia subduction zone in the Pacific Northwest has a history of producing powerful and destructive earthquakes that have sunk forests and spawned tsunamis that reached all the way to the shores of Japan.

The most recent great earthquake was in 1700. But it probably won't be the last. And the area that stands to be affected is now bustling metropolises that are home to millions of people.

Figuring out the frequency of earthquakes—and when the next "big one" will happen—is an active scientific question that involves looking for signs of past earthquakes in the geologic record in the form of shaken-up rocks, sediment and landscapes.

However, a study by scientists at The University of Texas at Austin and collaborators is calling into question the reliability of an earthquake record that covers thousands of years—a type of geologic deposit called a turbidite that's found in the strata of the seafloor.

The researchers analyzed a selection of turbidite layers from the Cascadia subduction zone dating back about 12,000 years ago with an algorithm that assessed how well turbidite layers correlated with one another.

They found that in most cases, the correlation between the turbidite samples was no better than random. Since turbidites can be caused by a range of phenomena, and not just earthquakes, the results suggest that the turbidite record's connection to past earthquakes is more uncertain than previously thought.

"We would like everyone citing the intervals of Cascadia subduction earthquakes to understand that these timelines are being questioned by this study," said Joan Gomberg, a research geophysicist at the U.S. Geological Survey and study co-author. "It's important to conduct further research to refine these intervals. What we do know is that Cascadia was seismically active in the past and will be in the future, so ultimately, people need to be prepared."

The results don't necessarily change the estimated earthquake frequency in Cascadia, which is about every 500 years, said the researchers. The current frequency estimate is based on a range of data and interpretations, not just the turbidites analyzed in this study. However, the results do highlight the need for more research on turbidite layers, specifically, and how they relate to each other and large earthquakes.

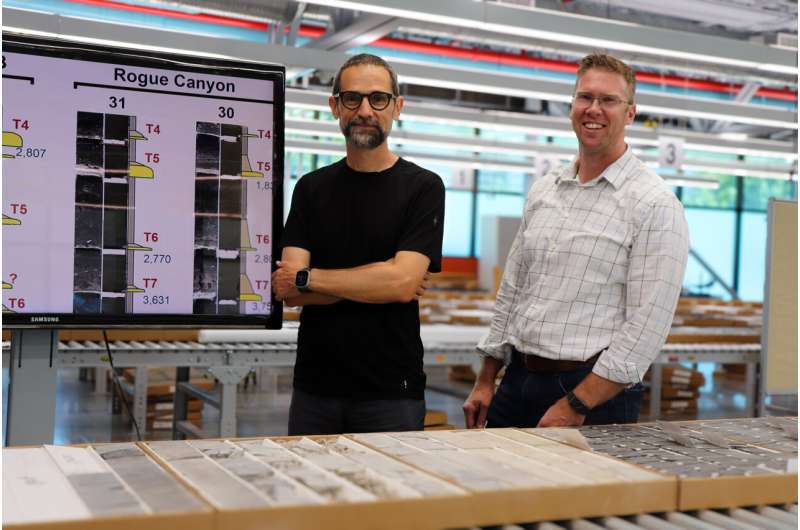

Co-author Jacob Covault, a research professor at the UT Jackson School of Geosciences, said the algorithm offers a quantitative tool that provides a replicable method for interpreting ancient earthquake records, which are usually based on more qualitative descriptions of geology and their potential associations.

"This tool provides a repeatable result, so everybody can see the same thing," said Covault, the co-principal investigator of the Quantitative Clastics lab at the Jackson School's Bureau of Economic Geology. "You can potentially argue with that result, but at least you have a baseline, an approach that is reproducible."

The results were published in the journal Geological Society of America Bulletin. The study included researchers from the USGS, Stanford University and the Alaska Division of Geological & Geophysical Surveys.

Turbidites are the remnants of underwater landslides. They're made of sediments that settled back down to the seafloor after being flung into the water by the turbulent motion of sediment rushing across the ocean floor. The sediment in these layers has a distinctive gradation, with coarser grains at the bottom and finer ones at the top.

But there's more than one way to make a turbidite layer. Earthquakes can cause landslides when they shake up the seafloor. But so can storms, floods and a range of other natural phenomena, albeit on a smaller geographical scale.

Currently, connecting turbidites to past earthquakes usually involves finding them in geologic cores taken from the seafloor. If a turbidite shows up in roughly the same spot in multiple samples across a relatively large area, it's counted as a remnant of a past earthquake, according to the researchers.

Although carbon dating samples can help narrow down timing, there's still a lot of uncertainty in interpreting if samples that appear at about the same time and place are connected by the same event.

Getting a better handle on how different turbidite samples relate to one another inspired the researchers to apply a more quantitative method—an algorithm called "dynamic time warping"—to the turbidite data. The algorithmic method dates back to the 1970s and has a wide range of applications, from voice recognition to smoothing out graphics in dynamic VR environments.

This is the first time it has been applied to analyzing turbidites, said co-author Zoltán Sylvester, a research professor at the Jackson School and co-principal investigator of the Quantitative Clastics Lab, who led the adaption of the algorithm for analyzing turbidites.

"This algorithm has been a key component of a lot of the projects I have worked on," said Sylvester. "But it's still very much underused in the geosciences."

The algorithm detects similarity between two samples that may vary over time, and determines how closely the data between them matches.

For voice recognition software, that means recognizing key words even though they might be spoken at different speeds or pitches. For the turbidites, it involves recognizing shared magnetic properties between different turbidite samples that may look different from location to location despite originating from the same event.

"Correlating turbidites is no simple task," said co-author Nora Nieminski, the coastal hazards program manager for the Alaska Division of Geological & Geophysical Surveys. "Turbidites commonly demonstrate significant lateral variability that reflect their variable flow dynamics. Therefore, it is not expected for turbidites to preserve the same depositional character over great distances, or even small distances in many cases, particularly along active margins like Cascadia or across various depositional environments."

The researchers also subjected the correlations produced by the algorithm to another level of scrutiny. They compared the results to correlation data calculated using synthetic data made by comparing 10,000 pairs of random turbidite layers. This synthetic comparison served as a control against coincidental matches in the actual samples.

The researchers applied their technique to magnetic susceptibility logs for turbidite layers in nine geologic cores that were collected during a scientific cruise in 1999. They found that in most cases, the connection between turbidite layers that had been previously correlated was no better than random. The only exception to this trend was for turbidite layers that were relatively close together—no more than about 15 miles apart.

The researchers emphasize that the algorithm is just one way of analyzing turbidities, and that the inclusion of other data could change the degree of correlation between the cores one way or another. But according to these results, the presence of turbidities at the same time and general area in the geologic record is not enough to definitively connect them to one another.

And although algorithms and machine learning approaches can help with that task, it's up to geoscientists to interpret the results and see where the research leads.

"We are here for answering questions, not just applying the tool," Sylvester said. "But at the same time, if you are doing this kind of work, then it forces you to think very carefully."

More information: Nora M. Nieminski et al, Turbidite correlation for paleoseismology, Geological Society of America Bulletin (2024). DOI: 10.1130/B37343.1

Provided by University of Texas at Austin