This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

Machine learning contributes to better quantum error correction

Researchers from the RIKEN Center for Quantum Computing have used machine learning to perform error correction for quantum computers—a crucial step for making these devices practical—using an autonomous correction system that despite being approximate, can efficiently determine how best to make the necessary corrections.

The research is published in the journal Physical Review Letters.

In contrast to classical computers, which operate on bits that can only take the basic values 0 and 1, quantum computers operate on "qubits", which can assume any superposition of the computational basis states. In combination with quantum entanglement, another quantum characteristic that connects different qubits beyond classical means, this enables quantum computers to perform entirely new operations, giving rise to potential advantages in some computational tasks, such as large-scale searches, optimization problems, and cryptography.

The main challenge towards putting quantum computers into practice stems from the extremely fragile nature of quantum superpositions. Indeed, tiny perturbations induced, for instance, by the ubiquitous presence of an environment give rise to errors that rapidly destroy quantum superpositions and, as a consequence, quantum computers lose their edge.

To overcome this obstacle, sophisticated methods for quantum error correction have been developed. While they can, in theory, successfully neutralize the effect of errors, they often come with a massive overhead in device complexity, which itself is error-prone and thus potentially even increases the exposure to errors. As a consequence, full-fledged error correction has remained elusive.

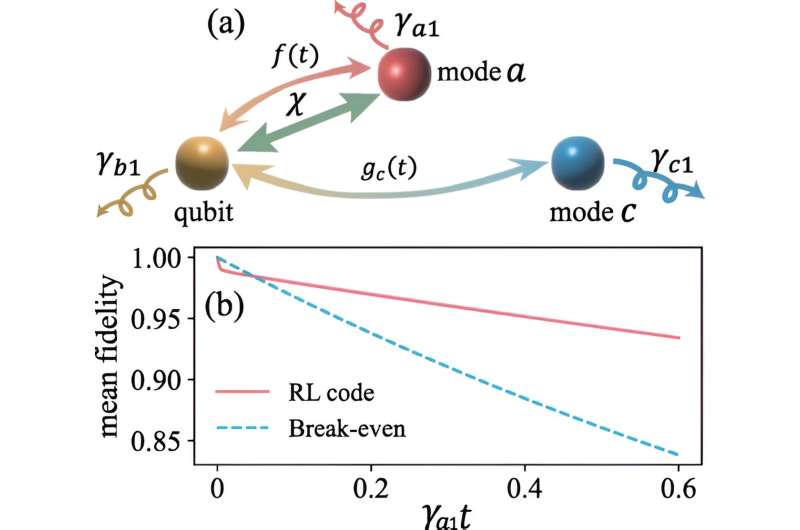

In this work, the researchers leveraged machine learning in a search for error correction schemes that minimize the device overhead while maintaining good error correcting performance. To this end, they focused on an autonomous approach to quantum error correction, where a cleverly designed, artificial environment replaces the necessity to perform frequent error-detecting measurements.

They also looked at "bosonic qubit encodings", which are, for instance, available and utilized in some of the currently most promising and widespread quantum computing machines based on superconducting circuits.

Finding high-performing candidates in the vast search space of bosonic qubit encodings represents a complex optimization task, which the researchers address with reinforcement learning, an advanced machine learning method, where an agent explores a possibly abstract environment to learn and optimize its action policy.

With this, the group found that a surprisingly simple, approximate qubit encoding could not only greatly reduce the device complexity compared to other proposed encodings, but also outperformed its competitors in terms of its capability to correct errors.

Yexiong Zeng, the first author of the paper, says, "Our work not only demonstrates the potential for deploying machine learning towards quantum error correction, but it may also bring us a step closer to the successful implementation of quantum error correction in experiments."

According to Franco Nori, "Machine learning can play a pivotal role in addressing large-scale quantum computation and optimization challenges. Currently, we are actively involved in a number of projects that integrate machine learning, artificial neural networks, quantum error correction, and quantum fault tolerance."

More information: Yexiong Zeng et al, Approximate Autonomous Quantum Error Correction with Reinforcement Learning, Physical Review Letters (2023). DOI: 10.1103/PhysRevLett.131.050601

Journal information: Physical Review Letters

Provided by RIKEN