Bug eyes and bat sonar: Bioengineers turn to animal kingdom for creation of bionic super 3D cameras

A pair of UCLA bioengineers and a former postdoctoral scholar have developed a new class of bionic 3D camera systems that can mimic flies' multiview vision and bats' natural sonar sensing, resulting in multidimensional imaging with extraordinary depth range that can also scan through blind spots.

Powered by computational image processing, the camera can decipher the size and shape of objects hidden around corners or behind other items. The technology could be incorporated into autonomous vehicles or medical imaging tools with sensing capabilities far beyond what is considered state of the art today. This research has been published in Nature Communications.

In the dark, bats can visualize a vibrant picture of their surroundings by using a form of echolocation, or sonar. Their high-frequency squeaks bounce off their surroundings and are picked back up by their ears. The minuscule differences in how long it takes for the echo to reach the nocturnal animals and the intensity of the sound tell them in real time where things are, what's in the way and the proximity of potential prey.

Many insects have geometric-shaped compound eyes, in which each "eye" is composed of hundreds to tens of thousands of individual units for sight—making it possible to see the same thing from multiple lines of sight. For example, flies' bulbous compound eyes give them a near-360-degree view even though their eyes have a fixed focus length, making it difficult for them to see anything far away, such as a flyswatter held aloft.

Inspired by these two natural phenomena found in flies and bats, the UCLA-led team set out to design a high-performance 3D camera system with advanced capabilities that leverage these advantages but also address nature's shortcomings.

"While the idea itself has been tried, seeing across a range of distances and around occlusions has been a major hurdle," said study leader Liang Gao, an associate professor of bioengineering at the UCLA Samueli School of Engineering. "To address that, we developed a novel computational imaging framework, which for the first time enables the acquisition of a wide and deep panoramic view with simple optics and a small array of sensors."

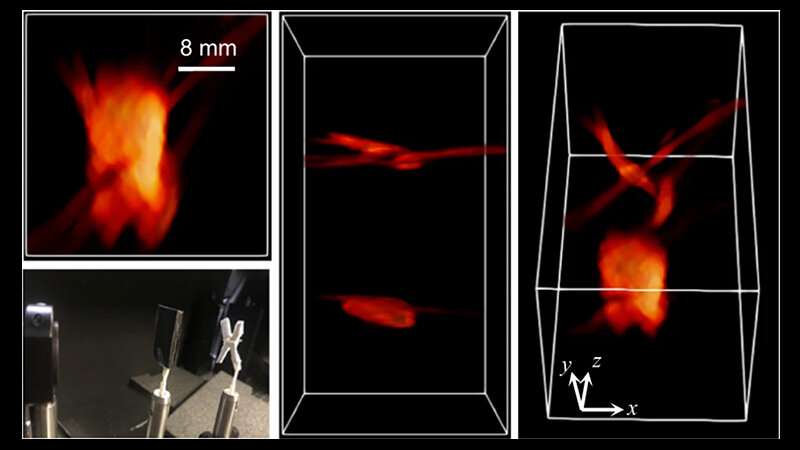

Called "Compact Light-field Photography," or CLIP, the framework allows the camera system to "see" with an extended depth range and around objects. In experiments, the researchers demonstrated that their system can "see" hidden objects that are not spotted by conventional 3D cameras.

The researchers also use a type of LiDAR, or "Light Detection And Ranging," in which a laser scans the surroundings to create a 3D map of the area.

Conventional LiDAR, without CLIP, would take a high-resolution snapshot of the scene but miss hidden objects, much like our human eyes would.

Using seven LiDAR cameras with CLIP, the array takes a lower-resolution image of the scene, processes what individual cameras see, then reconstructs the combined scene in high- resolution 3D imaging. The researchers demonstrated the camera system could image a complex 3D scene with several objects, all set at different distances.

"If you're covering one eye and looking at your laptop computer, and there's a coffee mug just slightly hidden behind it, you might not see it, because the laptop blocks the view," explained Gao, who is also a member of the California NanoSystems Institute. "But if you use both eyes, you'll notice you'll get a better view of the object. That's sort of what's happening here, but now imagine seeing the mug with an insect's compound eye. Now multiple views of it are possible."

According to Gao, CLIP helps the camera array make sense of what's hidden in a similar manner. Combined with LiDAR, the system is able to achieve the bat echolocation effect so one can sense a hidden object by how long it takes for light to bounce back to the camera.

The co-lead authors of the published research are UCLA bioengineering graduate student Yayao Ma, who is a member of Gao's Intelligent Optics Laboratory, and Xiaohua Feng—a former UCLA Samueli postdoc working in Gao's lab and now a research scientist at the Research Center for Humanoid Sensing at the Zhejiang Laboratory in Hangzhou, China.

More information: Xiaohua Feng et al, Compact light field photography towards versatile three-dimensional vision, Nature Communications (2022). DOI: 10.1038/s41467-022-31087-9

Journal information: Nature Communications

Provided by University of California, Los Angeles