New measurement of universe's expansion rate is 'stuck in the middle'

A team of collaborators from Carnegie and the University of Chicago used red giant stars that were observed by the Hubble Space Telescope to make an entirely new measurement of how fast the universe is expanding, throwing their hats into the ring of a hotly contested debate. Their result—which falls squarely between the two previous, competing values—will be published in the Astrophysical Journal.

Nearly a century ago, Carnegie astronomer Edwin Hubble discovered that the universe has been growing continuously since it exploded into being during the Big Bang. But precisely how fast it's moving—a value termed the Hubble constant in his honor—has remained stubbornly elusive.

The Hubble constant helped scientists sketch out the universe's history and structure and an accurate measurement of it might reveal any flaws in this prevailing model.

"The Hubble constant is the cosmological parameter that sets the absolute scale, size, and age of the universe; it is one of the most direct ways we have of quantifying how the universe evolves," said lead author Wendy Freedman of the University of Chicago, who began this work at Carnegie.

Until now, there have been two primary tools used to measure the universe's rate of expansion. Unfortunately, their results don't agree and the tension between the two numbers has persisted even as each side makes increasingly precise readings. However, is possible that the difference between the two values is due to systemic inaccuracies in one or both methods, spurring the research team to develop their new technique.

One method, pioneered at Carnegie, uses stars called Cepheids, which pulsate at regular intervals. Because the rate at which they pulse is known to be related to their intrinsic brightness, astronomers can use their luminosities and the period between pulses to measure their distances from Earth.

"From afar two bells may well appear to be the same, listening to their tones can reveal that one is actually much larger and more distant, and the other is smaller and closer," explained Carnegie's Barry Madore, one of the paper's co-authors. "Likewise, comparing how bright distant Cepheids appear to be against the brightness of nearby Cepheids enables us to determine how far away each of the stars' host galaxies are from Earth."

When a celestial object's distance is known, a measurement of the speed at which it is moving away from us reveals the universe's rate of expansion. The ratio of these two figures—the velocity divided by the distance—is the Hubble constant.

The second method uses the afterglow left over from the Big Bang. Called cosmic background radiation, it is the oldest light we can see. Patterns of compression in the thick, soupy plasma of which the baby universe was comprised can still be seen and mapped as slight temperature variations. These ripples, documenting the universe's first few moments, can be run forward in time through a model and used to predict the present-day Hubble constant.

The former technique says the expansion rate of the universe is 74.0 kilometers per second per megaparsec; the latter says it's 67.4. If it's real, the discrepancy could herald new physics.

Enter the third option.

The Carnegie-Chicago Hubble Program, led by Freedman and including Carnegie astronomers Madore, Christopher Burns, Mark Phillips, Jeff Rich, and Mark Seibert—as well as Carnegie-Princeton fellow Rachael Beaton—developed a new way to calculate the Hubble constant.

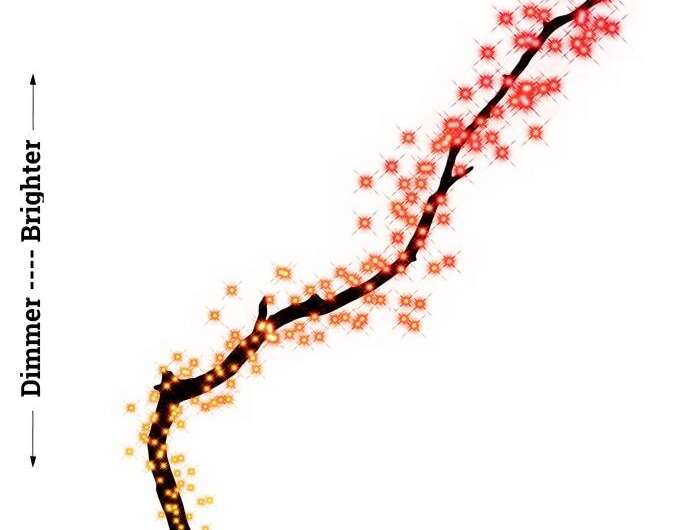

Their technique is based on a very luminous class of stars called red giants. At a certain point in their lifecycles, the helium in these stars is ignited, and their structures are rearranged by this new source of energy in their cores.

"Just as the cry of a loon is instantly recognizable among bird calls, the peak brightness of a red giant in this state is easily differentiated," Madore explained. "This makes them excellent standard candles."

The team made use of the Hubble Space Telescope's sensitive cameras to search for red giants in nearby galaxies.

"Think of it as scanning a crowd to identify the tallest person—that's like the brightest red giant experiencing a helium flash," said Burns. "If you lived in a world where you knew that the tallest person in any room would be that exact same height—as we assume that the brightest red giant's peak brightness is the same—you could use that information to tell you how far away the tallest person is from you in any given crowd."

Once the distances to these newly found red giants are known, the Hubble constant can be calculated with the help of another standard candle—type Ia supernovae—to diminish the uncertainty caused by the red giants' relative proximity to us and extend our reach out into the more-distant Hubble flow.

According to the red giant method the universe's expansion rate is 69.8—falling provocatively between the two previously determined numbers.

"We're like that old song, 'Stuck in the Middle with You,'" joked Madore. "Is there a crisis in cosmology? We'd hoped to be a tiebreaker, but for now the answer is: not so fast. The question of whether the standard model of the universe is complete or not remains to be answered."

More information: The Carnegie-Chicago Hubble Program. VIII. An Independent Determination of the Hubble Constant Based on the Tip of the Red Giant Branch. arxiv.org/abs/1907.05922

Journal information: Astrophysical Journal

Provided by Carnegie Institution for Science