Supercomputing more light than heat

Solar cells can't stand the heat. Photovoltaics lose some energy as heat in converting sunlight to electricity. The reverse holds true for lights made with light-emitting diodes (LED), which convert electricity into light. Some scientists think there might be light at the end of the tunnel in the hunt for better semiconductor materials for solar cells and LEDs, thanks to supercomputer simulations that leveraged graphics processing units to model nanocrystals of silicon.

Scientists call the heat loss in LEDs and solar cells non-radiative recombination. And they've struggled to understand the basic physics of this heat loss, especially for materials with molecules of over 20 atoms.

"The real challenge here is system size," explained Ben Levine, associate professor in the Department of Chemistry at Michigan State University. "Going from that 10-20 atom limit up to 50-100-200 atoms has been the real computation challenge here," Levine said. That's because the calculations involved scale with the size of the system to some power, sometimes four or up to six, Levine said. "Making the system ten times bigger actually requires us to perform maybe 10,000 times more operations. It's really a big change in the size of our calculations."

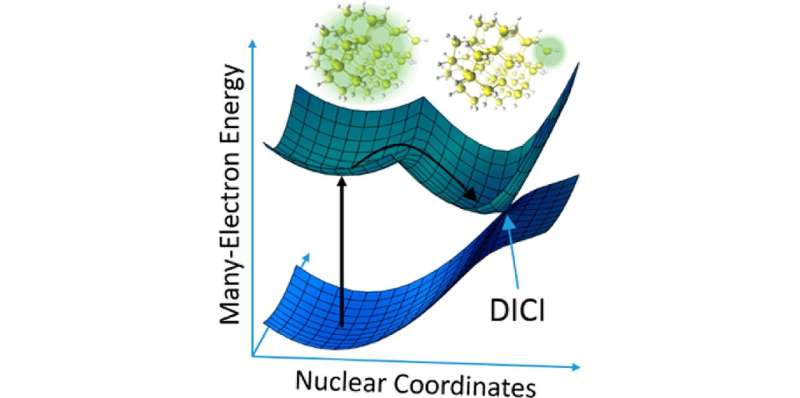

Levine's calculations involve a concept in molecular photochemistry called a conical intersection—points of degeneracy between the potential energy surfaces of two or more electronic states in a closed system. A perspective study published September of 2017 in the Journal of Physical Chemistry Letters found that recent computational and theoretical developments have enabled the location of defect-induced conical intersections in semiconductor nanomaterials.

"The key contribution of our work has been to show that we can understand these recombination processes in materials by looking at these conical intersections," Levine said. "We've been able to show is that the conical intersections can be associated with specific structural defects in the material."

The holy grail for materials science would be to predict non-radiative recombination behavior of a material based on its structural defects. These defects come from 'doping' semiconductors with impurities to control and modulate its electrical properties.

Looking beyond the ubiquitous silicon semiconductor, scientists are turning to silicon nanocrystals as candidate materials for the next generation of solar cells and LEDs. Silicon nanocrystals are molecular systems in the ballpark of 100 atoms with extremely tunable light emission compared to bulk silicon. And scientists are limited only by their imagination in ways to dope and create new kind of silicon nanocrystals.

"We've been doing this for about five years now," Levine explained about his conical intersection work. "The main focus of our work has been proof-of concept, showing that these are calculations that we can do; that what we find is in good agreement with experiment; and that it can give us insight into experiments that we couldn't get before," Levine said.

Levine addressed the computational challenges of his work using graphics processing unit (GPU) hardware, the kind typically designed for computer games and graphics design. GPUs excel at churning through linear algebra calculations, the same math involved in Levine's calculations that characterize the behavior of electrons in a material. "Using the graphics processing units, we've been able to accelerate our calculations by hundreds of times, which has allowed us to go from the molecular scale, where we were limited before, up to the nano-material size," Levine said.

Cyberinfrastructure allocations from XSEDE, the eXtreme Science and Engineering Discovery Environment, gave Levine access to over 975,000 compute hours on the Maverick supercomputing system at the Texas Advanced Computing Center (TACC). Maverick is a dedicated visualization and data analysis resource architected with 132 NVIDIA Tesla K40 "Atlas" GPU for remote visualization and GPU computing to the national community.

"Large-scale resources like Maverick at TACC, which have lots of GPUs, have been just wonderful for us," Levine said. "You need three things to be able to pull this off. You need good theories. You need good computer hardware. And you need facilities that have that hardware in sufficient quantity, so that you can do the calculations that you want to do."

Levine explained that he got started using GPUs to do science ten years ago back when he was in graduate school, chaining together SONY PlayStation 2 video game consoles to perform quantum chemical calculations. "Now, the field has exploded, where you can do lots and lots of really advanced quantum mechanical calculations using these GPUs," Levine said. "NVIDIA has been very supportive of this. They've released technology that helps us do this sort of thing better than we could do it before." That's because NVIDIA developed GPUs to more easily pass data, and they developed the popular and well-documented CUDA interface.

"A machine like Maverick is particularly useful because it brings a lot of these GPUs into one place," Levine explained. "We can sit down and look at 100 different materials or at a hundred different structures of the same material." We're able to do that using a machine such as Maverick. Whereas with a desktop gaming machine just has one GPU, we can do one calculation at a time. The large-scale studies aren't possible," said Levine.

Now that Levine's group has demonstrated the ability to predict conical intersections associated with heat loss from semiconductors and semiconductor nanomaterials, he said the next step is to do materials design in the computer.

Said Levine: "We've been running some calculations where we use a simulated evolution, called a genetic algorithm, where you simulate the evolution process. We're actually evolving materials that have the property that we're looking for, one generation after the other. Maybe we have a pool of 20 different molecules. We predict the properties of those molecules. Then we randomly pick, say, less than ten of them that have desirable properties. And we modify them in some way. We mutate them. Or in some chemical sense 'breed' them with one another to create new molecules, and test those. This all happens automatically in the computer. A lot of this is done on Maverick also. We end up with a new molecule that nobody has ever looked at before, but that we think they should look at in the lab. This automated design processes has already started."

The study, "Understanding Nonradiative Recombination through Defect-Induced Conical Intersections," was published September 7, 2017 in the Journal of Physical Chemistry Letters. The study authors are Yinan Shu (University of Minnesota); B. Scott Fales (Stanford University, SLAC); Wei-Tao Peng and Benjamin G. Levine (Michigan State University). The National Science Foundation funded the study (CHE-1565634).

More information: Yinan Shu et al, Understanding Nonradiative Recombination through Defect-Induced Conical Intersections, The Journal of Physical Chemistry Letters (2017). DOI: 10.1021/acs.jpclett.7b01707

Journal information: Journal of Physical Chemistry Letters

Provided by Texas Advanced Computing Center