Making AI systems that see the world as humans do

A Northwestern University team developed a new computational model that performs at human levels on a standard intelligence test. This work is an important step toward making artificial intelligence systems that see and understand the world as humans do.

"The model performs in the 75th percentile for American adults, making it better than average," said Northwestern Engineering's Ken Forbus. "The problems that are hard for people are also hard for the model, providing additional evidence that its operation is capturing some important properties of human cognition."

The new computational model is built on CogSketch, an artificial intelligence platform previously developed in Forbus' laboratory. The platform has the ability to solve visual problems and understand sketches in order to give immediate, interactive feedback. CogSketch also incorporates a computational model of analogy, based on Northwestern psychology professor Dedre Gentner's structure-mapping theory. (Gentner received the 2016 David E. Rumelhart Prize for her work on this theory.)

Forbus, Walter P. Murphy Professor of Electrical Engineering and Computer Science at Northwestern's McCormick School of Engineering, developed the model with Andrew Lovett, a former Northwestern postdoctoral researcher in psychology. Their research was published online this month in the journal Psychological Review.

The ability to solve complex visual problems is one of the hallmarks of human intelligence. Developing artificial intelligence systems that have this ability not only provides new evidence for the importance of symbolic representations and analogy in visual reasoning, but it could potentially shrink the gap between computer and human cognition.

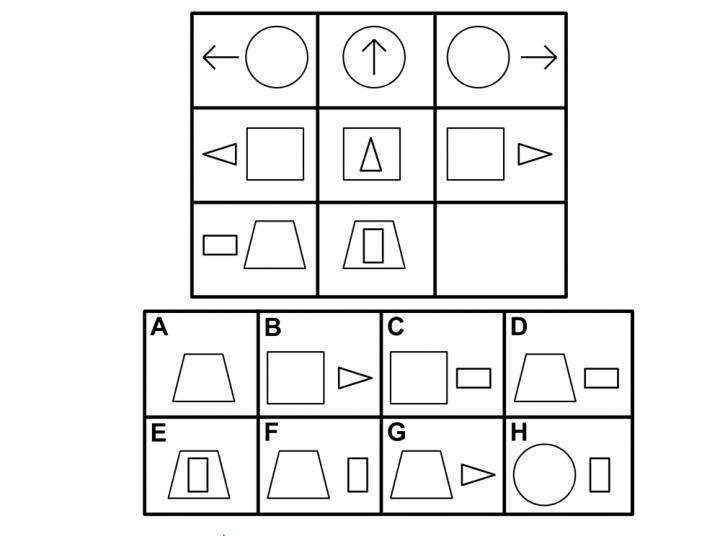

While Forbus and Lovett's system can be used to model general visual problem-solving phenomena, they specifically tested it on Raven's Progressive Matrices, a nonverbal standardized test that measures abstract reasoning. All of the test's problems consist of a matrix with one image missing. The test taker is given six to eight choices with which to best complete the matrix. Forbus and Lovett's computational model performed better than the average American.

"The Raven's test is the best existing predictor of what psychologists call 'fluid intelligence, or the general ability to think abstractly, reason, identify patterns, solve problems, and discern relationships,'" said Lovett, now a researcher at the US Naval Research Laboratory. "Our results suggest that the ability to flexibly use relational representations, comparing and reinterpreting them, is important for fluid intelligence."

The ability to use and understand sophisticated relational representations is a key to higher-order cognition. Relational representations connect entities and ideas such as "the clock is above the door" or "pressure differences cause water to flow." These types of comparisons are crucial for making and understanding analogies, which humans use to solve problems, weigh moral dilemmas, and describe the world around them.

"Most artificial intelligence research today concerning vision focuses on recognition, or labeling what is in a scene rather than reasoning about it," Forbus said. "But recognition is only useful if it supports subsequent reasoning. Our research provides an important step toward understanding visual reasoning more broadly."

More information: Andrew Lovett et al. Modeling visual problem solving as analogical reasoning., Psychological Review (2017). DOI: 10.1037/rev0000039

Journal information: Psychological Review

Provided by Northwestern University