Improving the experience of the audience with digital instruments

Researchers have developed a new augmented reality display that allows the audience to explore 3D augmentations of digital musical performances in order to improve their understanding of electronic musicians' engagement.

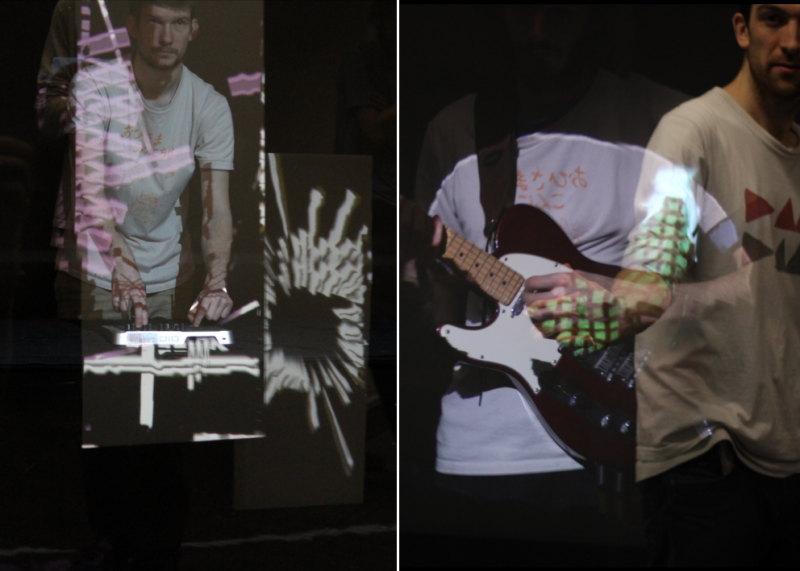

The diversity of digital musical instruments keeps increasing, especially with the emergence of software and hardware that musicians can modify. While this diversity creates novel artistic possibilities, it also makes it more difficult for the audience to appreciate what the musicians are doing during performances. Contrary to acoustic instruments, digital instruments can be used to play virtually any sound, some of them controlled in complex ways by the musicians' gestures and some of them programmed and automated. The perception of the musicians' engagement is therefore impaired and can spoil the engagement of some spectators in the performance.

A research team from the University of Bristol's Bristol Interaction and Graphics (BIG) has been investigating how to improve the audiences experience during performances with digital musical instruments. Funded by a Marie Curie grant, the IXMI project, led by Dr Florent Berthaut, aims to show the mechanisms of instruments using 3D virtual content and mixed-reality displays.

The original prototype called Rouages was presented in 2013 and since then the team has been investigating two main areas: mixed-reality display technologies adapted to musical performances and visual augmentations to improve the perception of musical gestures. The research will be presented at the 15th International Conference on New Interfaces for Musical Expression (NIME) in USA [31 May-3 June].

Their first creation Reflets is a mixed-reality environment that allows for displaying virtual content anywhere on stage, even overlapping the instruments or the performers. It does not require the audience to wear glasses or to use their smartphones to see the augmentations, which remain consistent at all positions in the audience.

Developed by Dr Berthaut, Dr Diego Martinez and Professor Sriram Subramanian, in collaboration with Dr Martin Hachet at INRIA Bordeaux, Reflets relies on combining the audience and stage spaces using reflective transparent surfaces and having the audience and performers reveal the virtual content by intersecting it with their bodies or physical props. Reflets was developed in collaboration with local Bristol performers, not only musicians but also dancers and actors.

Dr Berthaut, PhD student Hannah Limerick and Dr David Coyle, have also been investigating the mind's influence on behaviour to improve the perception of musicians' impact on the music using visual representations to link between gestures and sounds.

The team hopes that the results from the IXMI project will benefit both audiences and musicians by showing how musicians can engage during performances. In the future the team plan on organising workshops and demonstrating the new technology at public events.

More information: 'Liveness through the lens of agency and causality' by Dr Berthaut, Hannah Limerick and Dr David Coyle presented at the 15th International Conference on New Interfaces for Musical Expression (NIME).

Provided by University of Bristol