December 12, 2014 report

Assessing scientific research by 'citation wake' detects Nobel laureates' papers

(Phys.org)—Ranking scientific papers in order of importance is an inherently subjective task, yet that doesn't keep researchers from trying to develop quantitative assessments. In a new paper, scientists have proposed a new measure of assessment that is based on the "citation wake" of a paper, which encompasses the direct citations and weighted indirect citations received by the paper. The new method attempts to focus on the propagation of ideas rather than credit distribution, and succeeds by at least one significant measure: a large fraction (72%) of its top-ranked papers are coauthored by Nobel Prize laureates.

Ph.D. student David F. Klosik and Dr. Stefan Bornholdt at the University of Bremen have published their paper on the citation wake measure of publications in a recent issue of PLOS ONE.

As Klosik and Bornholdt explain, scientists' practice of citing the work that influenced them in the reference list of their own publications offers a wealth of data on the structure and progress of science. The difficulty lies in interpreting the data, which is often a controversial process.

The first paper citation network was developed in the 1960s, and early analysis was based almost exclusively on counting a paper's number of direct citations. This method has formed the basis of several newer quantitative methods of assessment, such as the h-index, which attempts to measure the impact of individual researchers, and the Thomson Scientific Journal Impact Factor, which ranks the relative importance of journals.

However, it's well-known that measures based on citation count have several shortcomings. For one thing, a paper's ranking strongly depends on the citation habits and size of the paper's field. Further, newer papers have fewer citations simply because they have not been around long enough to receive as many citations as older papers. On the other hand, the citation count may underestimate the impact of very old yet groundbreaking publications, since once seminal results become textbook knowledge, the original papers are often no longer cited.

More recently, newer methods (such as CiteRank, SARA, and Eigenfactor) have addressed some of these drawbacks by accounting for factors other than direct citations. While they have made improvements, these methods generally view the citation network primarily as one of credit diffusion.

Klosik and Bornholdt's new measure differs in that it views the citation network as a picture of idea propagation, in which the ideas within a paper influence future research far beyond the citations the paper receives directly.

"Our wake citation score is less sensitive to the size of the research community of a paper than other existing measures, as we do not focus on the direct citation count of a paper," Bornholdt told Phys.org. "What makes our wake citation score unique is our focus on whether a paper 'started something,' by estimating its 'word of mouth dynamics' from the subsequent citation network."

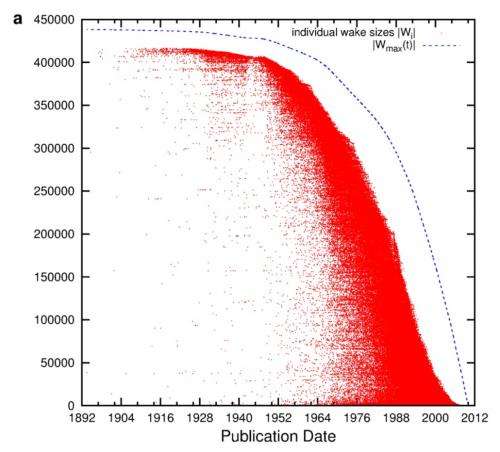

In their study, the researchers analyzed all papers in the Physical Review database, dating back more than a century. In their method, each paper receives a wake citation score. A paper's wake consists of all papers that that have cited it, either directly or indirectly. Since a paper can receive citations only from papers published at a later date, these papers form a "wake" of that paper as viewed on a graph.

All papers in a paper's wake are then assigned to neighborhood layers according to the length of the shortest path to the paper (similar to the concept of degrees of separation). In terms of idea propagation, the shortest path can also be viewed as the minimal number of processing steps of an idea.

Finally, the paper's wake citation score is computed as a weighted sum of the total number of papers in each layer. A detrending factor accounts for the fact that, the earlier a paper is published, the more papers there are in the future that could potentially cite it. A dilution factor can also be applied to restrict the number of layers considered, from only direct citations to the full wake of citations.

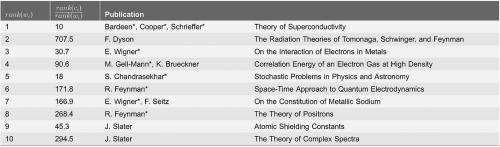

The resulting wake citation scores yield a ranking of papers that is very different than a list of papers ranked by number of citations. As the results show, 9 out of the top 10 papers ranked by wake citation score are only moderately cited (the exception is the #1 ranked paper, "Theory of Superconductivity" by Bardeen, Cooper, and Schrieffer). The other papers show a very high ratio between the direct-citation-rank and the wake-citation-rank. For example, the paper ranked #2 according to wake citation score ("The Radiation Theories of Tomonaga, Schwinger, and Feynman" by F. Dyson) has a ratio of 707.5, indicating a direct-citation-rank of merely 1,415. Among the top 100 papers ranked by wake citation score, 86 show a ratio higher than 10.

As for which ranking method is "better," there is of course no objective measure of importance; otherwise, that would be the only measure needed. But considering the widely accepted scientific quality of Nobel Prize research, Klosik and Bornholdt have checked their top-ranked papers that have been coauthored by Nobel Prize laureates. They found that 18 of the top 25 and more than half of the top 100 papers have contributions from a Nobel Prize laureate. In contrast, the ranking by direct citation count yields Nobel author contributions in just 4 of the top 25 and 25 of the top 100 papers. (Overall, the ranking by direct citation in the Physical Review database is dominated by papers on density-functional theory.)

Besides comparing to the direct citation ranking, the researchers also compared the wake citation ranking to one of the more elaborate measures of rank, which is Google's PageRank algorithm. They found that the top papers according to PageRank contain more Nobel laureate coauthors than in the direct citation rank, but fewer than in the wake citation rank. One of the biggest differences between PageRank and wake citation is that PageRank counts weighted paths (the connections between papers) while wake citation counts weighted nodes (the papers themselves).

While the wake citation method currently applies only to papers, Klosik and Bornholdt plan to extend the measure to scientists in the future.

"We are currently exploring the wake citation score as an impact measure for scientists," Bornholdt said. "This could provide a more balanced ranking of scientists from different fields."

More information: David F. Klosik and Stefan Bornholdt. "The Citation Wake of Publications Detects Nobel Laureates' Papers." PLOS ONE. DOI: 10.1371/journal.pone.0113184

Journal information: PLoS ONE

© 2014 Phys.org