Asimov's Three Laws of Robotics supplemented for 21st century care robots

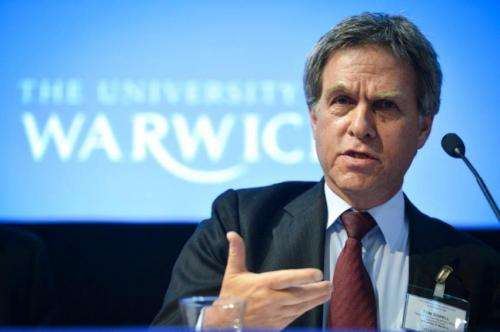

Isaac Asimov famously devised three laws of robotics that underpinned a number of his science fiction books and short stories, Professor Tom Sorell of the University of Warwick has helped develop a new set of rules that they believe will be needed for 21st century care robots.

Following recent developments in robotics research philosopher Professor Tom Sorell of the University of Warwick has helped produced six values to be used in properly-designed care-robots.

First fully stated in 1942 in the story Runaround, Asimov's Three Laws of Robotics sought to provide a framework for the relationship between humankind and robots, then mainly creatures of science fiction. Now that robots are widely used in caring for older people, as well as in military and industrial applications, rules for making and interacting with them are a practical and ethical necessity.

The six values are designed address the circumstances of older people in need of support and are to be embodied in the programming and hardware of the care-robot.

Professor Sorell argues that the six values can be promoted by a care-robot "depending on whether the purpose of the robot is to prolong normal adult autonomy and independence as far as possible into old age, or whether the purpose is to take the load off the support network for an older person".

The Six Values proposed are:

· Autonomy – being able to set goals in life and choose means;

· Independence – being able to implement one's goals without the permission, assistance or material resources of others;

· Enablement – having, or having access to, the means of realizing goals and choices;

· Safety – being able readily to avoid pain or harm;

· Privacy – being able to pursue and realize one's goals and implement one's choices unobserved

· Social Connectedness – having regular contact with friends and loved ones and safe access to strangers one can choose to meet.

Discussing the values, which were developed by Professor Tom Sorell from the University of Warwick, in collaboration with Professor Heather Draper of the University of Birmingham, for a European Commission funded project called ACCOMPANY, Professor Sorell from the University of Warwick said there were "moral reasons why autonomy should be promoted before the alleviation of burdens on carers".

"Older people deserve to have the same choices as other adults, on pain otherwise of having an arbitrarily worse moral status. And where the six values conflict, there is reason for autonomy to be treated as overriding", argues Professor Sorell.

On the question of whether the care-robot is answerable to the older person or carers who might worry about the older person and seek to restrict their activities, Professor Sorell says that the ability of the older person "to lead their life in their own way should prevail" with this being reflected in how the six values are applied.

Professor Sorell recognises that there may be exceptions to the primacy of Autonomy:

"Exceptions might be where older people lack 'capacity' in the legal sense (in which case they would not be autonomous), where they are highly dependent, or where leading life in one's own way is highly likely to lead to the need for rescue".

Care-robots that are designed to promote the six values and assist older people to pursue their own interests are, Professor Sorell argues, "better than robots designed merely to monitor the vital signs and warn of risks and dangers".

"Robots designed to let the user control information about their own routines and activities (including mishaps) are also to be preferred to those engaged in data-sharing with worried relations or health care workers".

The researchers will continue to work on and refine the six values as engineers develop such devices and even Asimov himself continued to refine his own laws in later stories.

Provided by University of Warwick