Seeing depth through a single lens

Researchers at the Harvard School of Engineering and Applied Sciences (SEAS) have developed a way for photographers and microscopists to create a 3D image through a single lens, without moving the camera.

Published in the journal Optics Letters, this improbable-sounding technology relies only on computation and mathematics—no unusual hardware or fancy lenses. The effect is the equivalent of seeing a stereo image with one eye closed.

That's easier said than done, as principal investigator Kenneth B. Crozier, John L. Loeb Associate Professor of the Natural Sciences, explains.

"If you close one eye, depth perception becomes difficult. Your eye can focus on one thing or another, but unless you also move your head from side to side, it's difficult to gain much sense of objects' relative distances," Crozier says. "If your viewpoint is fixed in one position, as a microscope would be, it's a challenging problem."

Offering a workaround, Crozier and graduate student Antony Orth essentially compute how the image would look if it were taken from a different angle. To do this, they rely on the clues encoded within the rays of light entering the camera.

"Arriving at each pixel, the light's coming at a certain angle, and that contains important information," explains Crozier. "Cameras have been developed with all kinds of new hardware—microlens arrays and absorbing masks—that can record the direction of the light, and that allows you to do some very interesting things, such as take a picture and focus it later, or change the perspective view. That's great, but the question we asked was, can we get some of that functionality with a regular camera, without adding any extra hardware?"

The key, they found, is to infer the angle of the light at each pixel, rather than directly measuring it (which standard image sensors and film would not be able to do). The team's solution is to take two images from the same camera position but focused at different depths. The slight differences between these two images provide enough information for a computer to mathematically create a brand-new image as if the camera had been moved to one side.

By stitching these two images together into an animation, Crozier and Orth provide a way for amateur photographers and microscopists alike to create the impression of a stereo image without the need for expensive hardware. They are calling their computational method "light-field moment imaging"—not to be confused with "light field cameras" (like the Lytro), which achieve similar effects using high-end hardware rather than computational processing.

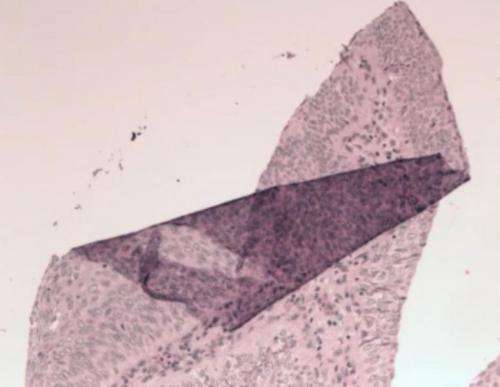

Importantly, the technique offers a new and very accessible way to create 3D images of translucent materials, such as biological tissues.

Biologists can use a variety of tools to create 3D optical images, including light-field microscopes, which are limited in terms of spatial resolution and are not yet commercially available; confocal microscopes, which are expensive; and a computational method called "shape from focus," which uses a stack of images focused at different depths to identify at which layer each object is most in focus. That's less sophisticated than Crozier and Orth's new technique because it makes no allowance for overlapping materials, such as a nucleus that might be visible through a cell membrane, or a sheet of tissue that's folded over on itself. Stereo microscopes may be the most flexible and affordable option right now, but they are still not as common in laboratories as traditional, monocular microscopes.

"This method devised by Orth and Crozier is an elegant solution to extract depth information with only a minimum of information from a sample," says Conor L. Evans, an assistant professor at Harvard Medical School and an expert in biomedical imaging, who was not involved in the research. "Depth measurements in microscopy are usually made by taking many sequential images over a range of depths; the ability to glean depth information from only two images has the potential to accelerate the acquisition of digital microscopy data."

"As the method can be applied to any image pair, microscopists can readily add this approach to our toolkit," Evans adds. "Moreover, as the computational method is relatively straightforward on modern computer hardware, the potential exists for real-time rendering of depth-resolved information, which will be a boon to microscopists who currently have to comb through large data sets to generate similar 3D renders. I look forward to using their method in the future."

The new technology also suggests an alternative way to create 3D movies for the big screen.

"When you go to a 3D movie, you can't help but move your head to try to see around the 3D image, but of course it's not going to do anything because the stereo image depends on the glasses," explains Orth, a Ph.D. student in applied physics. "Using light-field moment imaging, though, we're creating the perspective-shifted images that you'd fundamentally need to make that work—and just from a regular camera. So maybe one day this will be a way to just use all of the existing cinematography hardware, and get rid of the glasses. With the right screen, you could play that back to the audience, and they could move their heads and feel like they're actually there."

For the 3D effect to be noticeable, the camera aperture must be wide enough to let in light from a wide range of angles so that the differences between the two images focused at different depths are distinct. However, while a cellphone camera proves too small (Orth tried it on his iPhone), a standard 50 mm lens on a single-lens reflex camera is more than adequate.

Journal information: Optics Letters

Provided by Harvard University