June 21, 2013 weblog

Robots learn how to arrange objects by 'hallucinating' humans into their environment (w/ video)

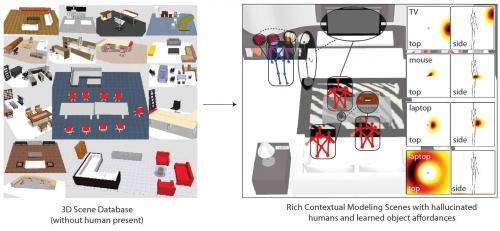

(Phys.org) —A team of robotics engineers working in the Personal Robotics Lab at Cornell University (led by Ashutosh Saxena) has developed a new way to give robots a context-sensitive way to organize a room. Instead of providing the robots with a map, the researchers instead cause the robot to "imagine" how a human being would use objects in a room and then to place them accordingly.

Traditional programming for robots has relied on providing clear instructions on what they are supposed to do—pick something up from one place and put it down in another, for example. To get a robot to engage in activities that require some degree of intuition, however, would mean giving them some means for doing so. One example would be to ask a robot to enter a room, note objects on a table, and ask that they be arranged on a desk for use by a person. To arrange objects in a way that makes sense to a human being requires some understanding of how people operate. To do that, the Cornell team gave a test robot a means for imagining what a person would look like in the room while using a set of objects.

As an example, the researchers programmed a robot to pick up a coffee mug and computer mouse from a table and place them on a desk in what would seem the most logical positions based on human behavior. To do that, they gave the robot what they call "an ability to hallucinate" humans into the room—the robot "brain" overlays images of stick figure humans onto images of the room. Various poses are considered while the robot "imagines" how a human might make the best use of the mouse and mug. Based on this process, the robot then placed the mouse just to the right of the keyboard (because the average person is right handed) and the mug a little ways back—within reach, but not so close it might get knocked over unintentionally. The approach mimics what a human would likely do given the same instructions, of course, and that is exactly the point.

The team will be outlining their research findings and progress at the Robotics: Science and Systems 2013 conference in Berlin next week.

More information: Project page: pr.cs.cornell.edu/hallucinatinghumans/

Research papers:

Infinite Latent Conditional Random Fields for Modeling Environments through Humans (PDF)

Hallucinated Humans as the Hidden Context for Labeling 3D Scenes (PDF)

via IEEESpectrum

© 2013 Phys.org