August 15, 2012 report

MPEG hammers out codec that halves bit rate

(Phys.org) -- A new international standard for a video compression format was announced today. The draft was issued by the influential Moving Picture Experts Group (MPEG) which met in Stockholm in July. MPEG, formed by the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC), drew 450 participants at the meeting, from 26 countries, representing telecoms, computer, TV and consumer electronics industries. MPEG discussions and standards affect these industries. In other words, the standard is a big deal.

“High-Efficiency Video Coding (HEVC) was the main focus and was issued as a Draft International Standard (DIS) with substantial compression efficiency over AVC, in particular for higher resolutions where the average savings are around 50 percent,” said Ericsson’s meeting notes.

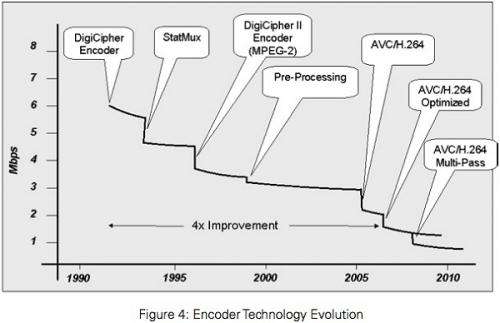

In video alone, almost all digital terrestrial, satellite and cable TV services rely on video codecs that MPEG has standardized. The new standard issued this week is all about bandwidth, and the reduction thereof. This is a draft standard for High Efficiency Video Coding, to enable compression levels twice as high as the current H.264/AVC standard. The format may launch in commercial products next year.

The news is especially good for mobile networks, where spectrum is costly. Service providers will be able to launch more video services with the spectrum that is currently available.

“You can halve the bit rate and still achieve the same visual quality, or double the number of television channels with the same bandwidth, which will have an enormous impact on the industry,” said Per Fröjdh, Manager for Visual Technology at Ericsson Research, Group Function Technology.

He was a key figure at the event as chairman of the Swedish MPEG delegation. Ericsson, by nature of its business, is actively involved with MPEG. (Fröjdh’s Visual Technology team is working with MPEG in a new kind of 3-D video compression format, which would do away with 3-D glasses. Fröjdh said the technology could be standardized by 2014.)

Anything to do with video compression over mobile broadband is a key concern to Ericsson, said another executive at Ericsson.

Out of all data sent over networks, a good proportion is video. According to statistics in a study dated this year, across all geographies, video is the leading driver of total data traffic on mobile networks at an average of 50 percent, but, in some networks, the data volume due to video content is approaching 70 percent.

© 2012 Phys.org