Computing experts unveil superefficient 'inexact' chip

Researchers have unveiled an "inexact" computer chip that challenges the industry's dogmatic 50-year pursuit of accuracy. The design improves power and resource efficiency by allowing for occasional errors. Prototypes unveiled this week at the ACM International Conference on Computing Frontiers in Cagliari, Italy, are at least 15 times more efficient than today's technology.

The research, which earned best-paper honors at the conference, was conducted by experts from Rice University in Houston, Singapore's Nanyang Technological University (NTU), Switzerland's Center for Electronics and Microtechnology (CSEM) and the University of California, Berkeley.

"It is exciting to see this technology in a working chip that we can measure and validate for the first time," said project leader Krishna Palem, who also serves as director of the Rice-NTU Institute for Sustainable and Applied Infodynamics (ISAID). "Our work since 2003 showed that significant gains were possible, and I am delighted that these working chips have met and even exceeded our expectations."

ISAID is working in partnership with CSEM to create new technology that will allow next-generation inexact microchips to use a fraction of the electricity of today's microprocessors.

"The paper received the highest peer-review evaluation of all the Computing Frontiers submissions this year," said Paolo Faraboschi, the program co-chair of the ACM Computing Frontiers conference and a distinguished technologist at Hewlett Packard Laboratories. "Research on approximate computation matches the forward-looking charter of Computing Frontiers well, and this work opens the door to interesting energy-efficiency opportunities of using inexact hardware together with traditional processing elements."

The concept is deceptively simple: Slash power use by allowing processing components -- like hardware for adding and multiplying numbers -- to make a few mistakes. By cleverly managing the probability of errors and limiting which calculations produce errors, the designers have found they can simultaneously cut energy demands and dramatically boost performance.

One example of the inexact design approach is "pruning," or trimming away some of the rarely used portions of digital circuits on a microchip. Another innovation, "confined voltage scaling," trades some performance gains by taking advantage of improvements in processing speed to further cut power demands.

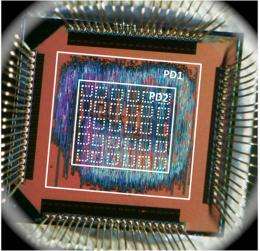

In their initial simulated tests in 2011, the researchers showed that pruning some sections of traditionally designed microchips could boost performance in three ways: The pruned chips were twice as fast, used half as much energy and were half the size. In the new study, the team delved deeper and implemented their ideas in the processing elements on a prototype silicon chip.

"In the latest tests, we showed that pruning could cut energy demands 3.5 times with chips that deviated from the correct value by an average of 0.25 percent," said study co-author Avinash Lingamneni, a Rice graduate student. "When we factored in size and speed gains, these chips were 7.5 times more efficient than regular chips. Chips that got wrong answers with a larger deviation of about 8 percent were up to 15 times more efficient."

Project co-investigator Christian Enz, who leads the CSEM arm of the collaboration, said, "Particular types of applications can tolerate quite a bit of error. For example, the human eye has a built-in mechanism for error correction. We used inexact adders to process images and found that relative errors up to 0.54 percent were almost indiscernible, and relative errors as high as 7.5 percent still produced discernible images."

Palem, the Ken and Audrey Kennedy Professor of Computing at Rice, who holds a joint appointment at NTU, said likely initial applications for the pruning technology will be in application-specific processors, such as special-purpose "embedded" microchips like those used in hearing aids, cameras and other electronic devices.

The inexact hardware is also a key component of ISAID's I-slate educational tablet. The low-cost I-slate is designed for Indian classrooms with no electricity and too few teachers. Officials in India's Mahabubnagar District announced plans in March to adopt 50,000 I-slates into middle and high school classrooms over the next three years.

The hardware and graphic content for the I-slate are being developed in tandem. Pruned chips are expected to cut power requirements in half and allow the I-slate to run on solar power from small panels similar to those used on handheld calculators. Palem said the first I-slates and prototype hearing aids to contain pruned chips are expected by 2013.

Provided by Rice University