This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

trusted source

proofread

Advanced deep learning and UAV imagery boost precision agriculture for future food security

A research team has investigated the efficacy of AlexNet, an advanced Convolutional Neural Network (CNN) variant, for automatic crop classification using high-resolution aerial imagery from UAVs. Their findings demonstrated that AlexNet consistently outperformed conventional CNNs.

This study highlights the potential of integrating deep learning with UAV data to enhance precision agriculture, emphasizing the importance of early stopping techniques to prevent overfitting and suggesting further optimization for broader crop classification applications.

By 2030, global population growth is projected to reach 9 billion, significantly increasing the demand for food. Currently, natural disasters and climate change are major threats to food security, necessitating timely and accurate crop classification for sustaining adequate food production. Despite advancements in remote sensing and machine learning for crop classification, challenges remain, such as reliance on expert knowledge and information loss.

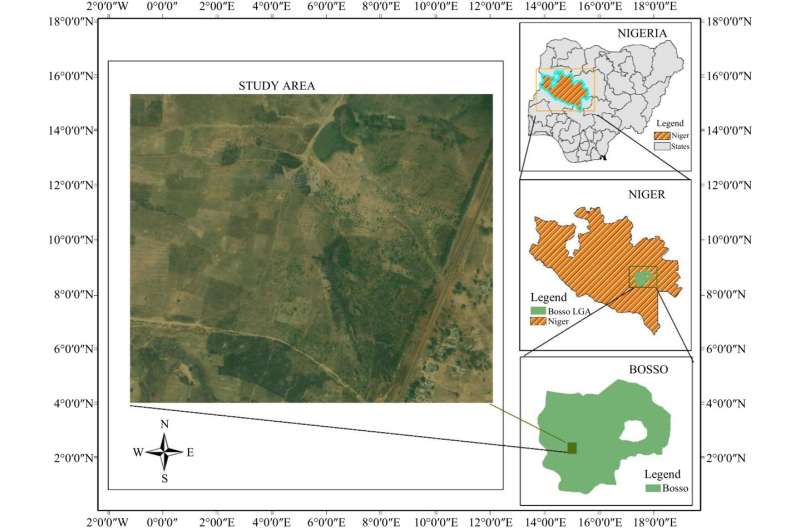

A research article published in Technology in Agronomy on 28 May 2024, aims to assess the performance of AlexNet, a CNN-based model, for crop type classification on mixed small-scale farms.

In this study, the AlexNet and conventional CNN models were employed to evaluate crop classification efficiency using high-resolution UAV imagery. Both models were trained with hyperparameters, including 30–60 epochs, a learning rate of 0.0001, and a batch size of 32. AlexNet, with its 8-layer depth, achieved a training accuracy of 99.25% and validation accuracy of 71.81% at 50 epochs, showcasing its superior performance.

Conversely, the 5-layer CNN model reached its highest training accuracy of 62.83% and validation accuracy of 46.98% at 60 epochs. AlexNet's performance slightly dropped at 60 epochs due to overfitting, emphasizing the need for early stopping mechanisms.

The results indicate that while both models improve with more epochs, AlexNet consistently outperforms the conventional CNN, particularly in handling complex datasets and maintaining high accuracy levels.

This suggests that AlexNet is better suited for accurate and efficient crop classification in precision agriculture, although care must be taken to mitigate overfitting in prolonged training.

According to the study's lead researcher, Oluibukun Gbenga Ajayi, "In light of the observed overfitting, we strongly recommend implementing early stopping techniques, as demonstrated in this study at 50 epochs, or modifying classification hyperparameters to optimize AlexNet's performance whenever overfitting is detected."

Future research will focus on expanding AlexNet's capabilities, optimizing pre-processing, and refining hyperparameters to further enhance crop classification accuracy and support global food security efforts.

More information: Oluibukun Gbenga Ajayi et al, Optimizing crop classification in precision agriculture using AlexNet and high resolution UAV imagery, Technology in Agronomy (2024). DOI: 10.48130/tia-0024-0009

Provided by Chinese Academy of Sciences