This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

trusted source

proofread

'Opting in' to see information can reduce hiring bias

A new study from an ILR School expert offers a pathway to reducing bias in hiring while preserving managers' autonomy—by encouraging hiring managers to avoid receiving potentially biasing information about applicants.

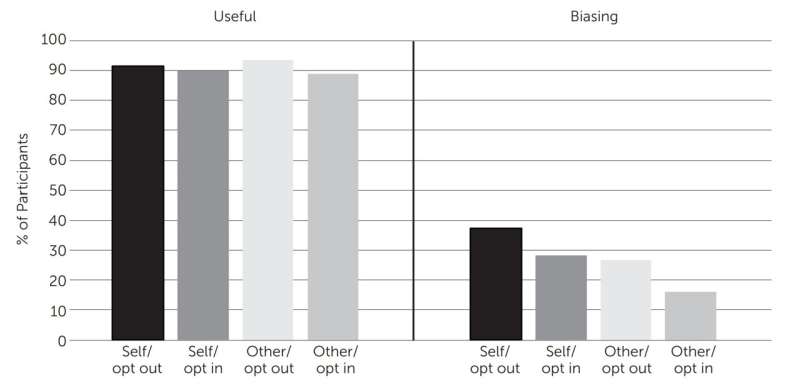

The researchers found that study participants who were screening mock job applicants were less likely to choose to see potentially biasing applicant information when they were instructed to opt into information they wanted to see, rather than when they had to opt out of information they did not want to see.

Sean Fath, assistant professor of organizational behavior at the ILR School, wrote the paper with his Duke University co-authors, Richard Larrick and Jack Soll. The article, "Encouraging Self-Blinding in Hiring," published in Behavioral Science & Policy.

"We found that having to actively select each piece of information leads individuals to be a little more thoughtful about what they're selecting," Fath said.

"The main takeaway was that if you require people to actively opt into what information they want to see, as opposed to just giving it all to them and trusting them to opt out of the stuff likely to cause bias, you'll get better outcomes in terms of what they ultimately choose to receive," he said.

Participants were also less likely to choose potentially biasing information for others. "We know that people tend to think that others are more susceptible to bias than they are themselves," Fath said. "So, if they are thinking of what information might likely bias one of their peers, they will tend to avoid that information."

And the study participants were less likely to elect to see biasing information when the possibility of bias was relatively transparent—such as information that reveals the applicant's race or gender—than when it was relatively nontransparent—such as the applicant's name and photograph.

"The notion is that people see the availability of race, ethnicity and gender information and it sets off an alarm bell in their head that tells them, 'Oh. This is a time when I might be susceptible to bias,'" Fath said. "But if you give them that information, just packaged in a different way, such as with a name and a photo, it doesn't set off the same alarm. In reality the name and photo will likely convey race, ethnicity and gender and can sometimes convey even more biasing information, such as attractiveness and social class."

During the experiment, roughly 800 participants with hiring experience completed a mock hiring task, screening applicants for a hypothetical position and determining which to advance to the interview stage. All participants received a checklist from which they could choose to see any of seven items of information available about the applicants.

Of the seven, five items included information that is commonly requested in job applications, for example, education credentials and work history. In contrast, two items—the applicant's race and gender or the applicant's picture and name—did not indicate likely job performance and had the potential to trigger bias.

The participants were then randomly assigned to either opt into the items of information they wanted or opt out of information they did not. Participants were also randomly assigned to choose the information they would like to see if they were screening applicants, or what information they would like a peer to see when screening. Finally, participants were randomly assigned to either a situation in which the potentially biasing information was transparently likely to cause bias—the applicants' race and gender—or less obviously likely to cause bias—the applicants' name and photo.

The participants ultimately selected fewer of the potentially biasing items of information when they needed to opt into information, when they were making a choice for others, and when the information was transparently likely to cause bias.

The findings suggest two policy changes organizations could implement to reduce hiring bias. Importantly, both are behavioral nudges: They only encourage people to make certain choices while preserving their autonomy to ultimately do whatever they prefer.

First, organizations could appoint someone to itemize the different types of information available about job applicants and then have hiring managers opt into, rather than opt out of, the information they want to see. Second, organizations could train hiring managers to first consider what information they would provide to a colleague evaluating applicants before deciding what information to opt into themselves.

"People tend to think others are more susceptible to bias than they are, so they think others shouldn't receive potentially biasing information," Fath said. "Once they've made that decision for someone else, a desire to be cognitively consistent is probably going to lead them to make the same choice for themselves."

More information: Fath, S. et al, Encouraging self-blinding in hiring, Behavioral Science & Policy (2023). behavioralpolicy.org/wp-conten … SP_Fath_09-06-23.pdf

Provided by Cornell University