This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

Snapshot multispectral imaging using a diffractive optical network

Multispectral imaging has fueled major advances in various fields, including environmental monitoring, astronomy, agricultural sciences, biomedicine, medical diagnostics and food quality control. The most ubiquitous and primitive form of a spectral imaging device is the color camera that collects information from red (R), green (G) and blue (B) color channels.

The traditional design of RGB color cameras relies on spectral filters spatially located over a periodically repeating array of 2×2 pixels, with each subpixel containing an absorptive spectral filter that transmits one of the red, green, or blue channels while blocking others.

Despite its widespread use in various imaging applications, scaling up the number of these absorptive filter arrays to collect richer spectral information from many distinct color bands poses various challenges due to their low power efficiency, high spectral cross-talk and poor color representation quality.

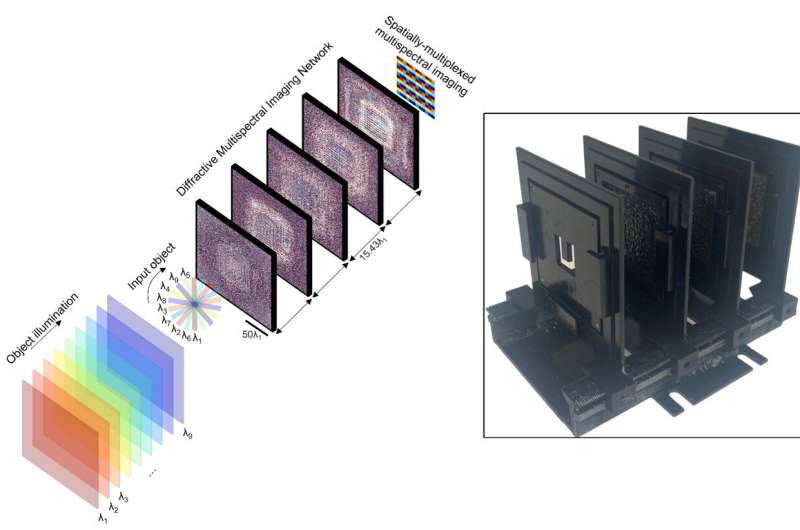

UCLA researchers have recently introduced a snapshot multispectral imager that uses a diffractive optical network, instead of absorptive filters, to have 16 unique spectral bands periodically repeating at the output image field-of-view to form a virtual multispectral pixel array. This diffractive network-based multispectral imager is optimized using deep learning to spatially separate the input spectral channels onto distinct pixels at the output image plane, serving as a virtual spectral filter array that preserves the spatial information of the input scene or objects, instantaneously yielding an image cube without image reconstruction algorithms.

Therefore, this diffractive multispectral imaging network can virtually convert a monochrome image sensor into a snapshot multispectral imaging device without conventional spectral filters or digital algorithms.

Published in Light: Science & Applications, the diffractive network-based multispectral imager framework is reported to offer both high spatial imaging quality and high spectral signal contrast. The authors' research showed that ~79% average transmission efficiency across distinct bands could be achieved without a major compromise on the system's spatial imaging performance and spectral signal contrast.

More information: Deniz Mengu et al, Snapshot multispectral imaging using a diffractive optical network, Light: Science & Applications (2023). DOI: 10.1038/s41377-023-01135-0

Journal information: Light: Science & Applications