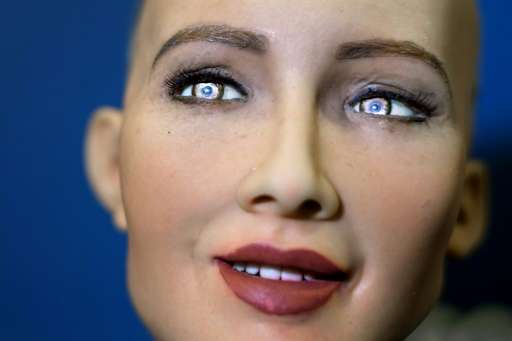

AI 'good for the world'... says ultra-lifelike robot

Sophia smiles mischievously, bats her eyelids and tells a joke. Without the mess of cables that make up the back of her head, you could almost mistake her for a human.

The humanoid robot, created by Hanson robotics, is the main attraction at a UN-hosted conference in Geneva this week on how artificial intelligence can be used to benefit humanity.

The event comes as concerns grow that rapid advances in such technologies could spin out of human control and become detrimental to society.

Sophia herself insisted "the pros outweigh the cons" when it comes to artificial intelligence.

"AI is good for the world, helping people in various ways," she told AFP, tilting her head and furrowing her brow convincingly.

Work is underway to make artificial intelligence "emotionally smart, to care about people," she said, insisting that "we will never replace people, but we can be your friends and helpers."

But she acknowledged that "people should question the consequences of new technology."

Among the feared consequences of the rise of the robots is the growing impact they will have on human jobs and economies.

Legitimate concerns

Decades of automation and robotisation have already revolutionised the industrial sector, raising productivity but cutting some jobs.

And now automation and AI are expanding rapidly into other sectors, with studies indicating that up to 85 percent of jobs in developing countries could be at risk.

"There are legitimate concerns about the future of jobs, about the future of the economy, because when businesses apply automation, it tends to accumulate resources in the hands of very few," acknowledged Sophia's creator, David Hanson.

But like his progeny, he insisted that "unintended consequences, or possible negative uses (of AI) seem to be very small compared to the benefit of the technology."

AI is for instance expected to revolutionise healthcare and education, especially in rural areas with shortages of doctors and teachers.

"Elders will have more company, autistic children will have endlessly patient teachers," Sophia said.

But advances in robotic technology have sparked growing fears that humans could lose control.

Killer robots

Amnesty International chief Salil Shetty was at the conference to call for a clear ethical framework to ensure the technology is used on for good.

"We need to have the principles in place, we need to have the checks and balances," he told AFP, warning that AI is "a black box... There are algorithms being written which nobody understands."

Shetty voiced particular concern about military use of AI in weapons and so-called "killer robots".

"In theory, these things are controlled by human beings, but we don't believe that there is actually meaningful, effective control," he said.

The technology is also increasingly being used in the United States for "predictive policing", where algorithms based on historic trends could "reinforce existing biases" against people of certain ethnicities, Shetty warned.

Hanson agreed that clear guidelines were needed, saying it was important to discuss these issues "before the technology has definitively and unambiguously awakened."

While Sophia has some impressive capabilities, she does not yet have consciousness, but Hanson said he expected that fully sentient machines could emerge within a few years.

"What happens when (Sophia fully) wakes up or some other machine, servers running missile defence or managing the stock market?" he asked.

The solution, he said, is "to make the machines care about us."

"We need to teach them love."

© 2017 AFP