Technology that lets self-driving cars, robots see

LiDAR, once used in the Apollo 15 lunar mission, has shrunk in size and cost, making it easier for researchers and product makers to bring the 3D vision mapping technology to smart devices.

As the population of internet connected things continues to rise, how will autonomous robotic devices see and be smart enough to navigate obstacles, each other and people in the real world?

Light Detection And Ranging (LiDAR) is one answer.

The remote sensing technology has been around since the 1960s. Back then, it required enormous scanners that filled planes and cost hundreds of thousands of dollars.

Increasingly it's more affordable, and in some configurations can fit inside something the size off a baseball.

It is used for widespread surveying, mapping for geological research and archaeology.

It works like this: A scanner shoots out infrared lasers that reflect or bounce off solid matter on surfaces. The distance measured helps create a 3D image. In the case of robots, that 3D image is interpreted by the computer system, which is coded with instructions for how the robot should react.

Like most technologies that survive the test of time, LiDAR technology has evolved.

In fact, today police use LiDAR speed guns to measure vehicles that exceed the speed limit.

A small, cutting-edge LiDAR sensor can be purchased for $8,000. While this is still expensive, advancements in LiDAR technology and the rise in robot and autonomous machine research have combined to create a boom in projects that are pushing the limits of what the environment-mapping technology can do.

Deloitte estimates that this year's $18 billion market for sales of robots for logistics, packaging and materials handling will nearly double by 2020. The research firm stated that many of these robots are already designed to interact directly with consumers or assist them with shopping.

These machines need to somehow see in order to function properly.

"LiDAR systems have been an absolutely necessary tool for the advancement of mobile robots," said Charles Grinnell, CEO of Harvest Automation, which builds autonomous agricultural robots.

"LiDAR is a great all-in-one solution for us."

Then there's the Cheetah running robot. This four-legged, 70-pound robot, developed at MIT and funded by Defense Advanced Research Project Agency (DARPA), can run untethered at 13 mph over a flat course.

Now it can jump over hurdles autonomously with the help of an onboard LiDAR setup. The system lets the robot spot approaching obstacles then calculate their size and distance.

A series of path-panning algorithms tell the robot when and how high to jump, all without any human help.

The Cheetah can clear hurdles 18 inches high—more than half its own height—while running 5 mph. On a treadmill, the robot can make it over almost three-quarters of obstacles. On a track, with more space and time to make its calculations, the robot has a 90 percent success rate.

The Harvest Automation HV-100 has a more benign purpose: helping move and place plants with exacting precision.

Nicknamed "Harvey," the 20-inch-tall autonomous model is already zipping around nurseries and fields across the country.

LiDAR helps these robots identify their location and determine where to place the plants they're carrying amid thousands of others—and avoid obstacles in the way.

"Our robots work with people in the same space, so we are constantly on the lookout to avoid collisions," said Grinnell.

This plant placement is also important for growers to optimize space while minimizing labor costs and preventing worker injury related to repetitive tasks.

"It's critical for mobile robots to process movement and sense obstacles," said Eric Mantion, an evangelist for Intel RealSense camera technology. RealSense technology, which is built into many new laptop and all-in-one PC models, gives computer vision to experimental drones and robots.

Mantion said that just as a high jumper's brain needs to process every step of a jump – the approach, the launch, the clearing of the bar—robots also have to process all of these types of movements to function in the real world.

"The more efficiently these algorithms can be processed, the more responsive and successful the outcome," Mantion said.

RealSense is much smaller and more affordable than LiDAR, making it possible to create new consumer robotic devices. One example is the iRobot butler, which uses RealSense cameras to navigate hotel hallways.

In addition to helping researchers pioneer new capabilities for autonomous machines, LiDAR sensors are being used by a number of car manufacturers. Together with other kinds of navigational systems, LiDAR helps direct self-driving vehicles.

Recently, however, researchers have shown that LiDAR sensors can be fooled from more than 300 feet away by someone using a specially modified laser pointer.

Hackers could conceivably make a self-driving car stop or swerve by convincing the sensor that it is about to hit an imaginary obstacle like a car, person or wall, said Jonathan Petit with the University of Cork's Computer Security Group.

"LiDAR uses light, an unregulated spectrum that is accessible to anyone," he explained.

Luckily there are ways to mitigate such attacks, he says, such as identifying and removing outliers from the raw LiDAR data.

With its nearly instantaneous 360-degree sweeps, however, LiDAR is ideal for covering large areas quickly. That's why it's so popular with geologists, surveyors and archaeologists, who often mount sensors on planes.

Some companies specialize in the scanning process itself, capturing data for a variety of clients.

London-based ScanLab works with historians, climatologists and forensic anthropologists to create large-scale 3D images of everything from historic sites to clothes.

They've helped researchers at the University of Cambridge calculate how ice floes in the Arctic are being affected by climate change and recreated the beaches of Normandy for a PBS documentary about D-Day.

Some of their projects edge into the morbid, such as scans of concentration camps in former Yugoslavia and the coat Lord Nelson wore at the Battle of Trafalgar, complete with bullet hole and bloodstains.

Last year, ScanLab technicians descended 70 feet beneath the streets of London to record images of part of the old London Post Office Railway. The 23-mile "Mail Rail" carried post and packages until 2003, when it was almost completely abandoned. A total of 223 scans resulted in more than 11 billion data points, filling more than a terabyte of memory.

CyArk, a nonprofit, was launched to create 3D models of threatened cultural heritage sites around the world before they are lost. So far their free online library includes LiDAR scans of the Leaning Tower of Pisa, Chichén Itzá and the towering stone moai of Easter Island.

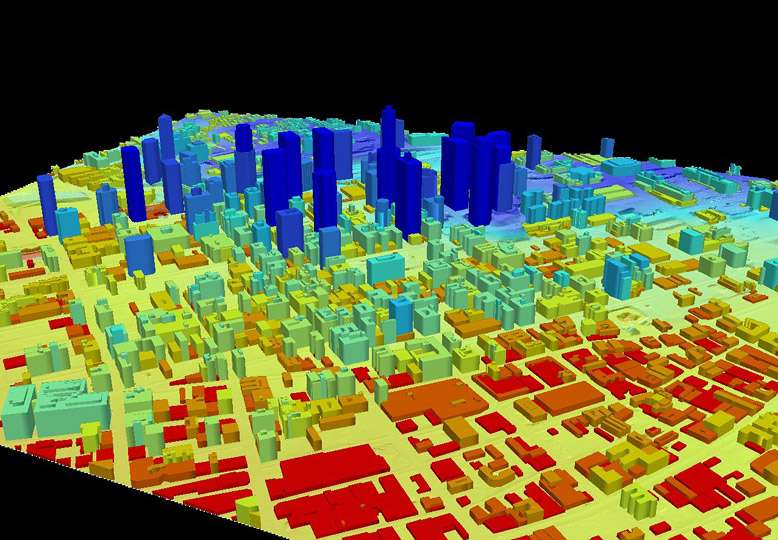

In 2014, the organization scanned 5.71 square miles of the historic core of New Orleans, creating a virtual version that is accurate to within about five inches. The idea is to capture the city as it stands today—and to have something to guide rebuilding in the aftermath of a natural disaster like Hurricane Katrina.

Some LiDAR projects have a more artistic bent. In 2008, Radiohead filmed the video for the song "House of Cards" entirely using laser scans, in part by driving a LiDAR-equipped van around Florida.

"In the Eyes of the Animal," a virtual reality (VR) exhibit created by Marshmallow Laser Feast for the Abandon Normal Devices Festival in the U.K., gives users an immersive experience of what it's like to be an animal in the wild.

First they used LiDAR, CT scanning and video-equipped drones to record part of Grizedale Forest in Britain's Lake District. To experience the virtual forest, users don sensory units the size of beach balls that completely enclose their heads.

Inside, VR goggles and binaural headphones simulate what a fox, bird or deer might see and hear, down to the specific field of vision and light wavelengths. A wearable subwoofer adds a physical dimension.

Despite the awesome things LiDAR enables people to do, Grinnell says price is still an issue. The systems are much cheaper than they used to be, but the one that guides each HV-100 is the single most expensive part of the robot, accounting for about 20 percent of the $30,000 price tag.

"The cost represents a big barrier to creating more commercially viable solutions using mobile robots."

All this means is that LiDAR technology isn't nearly as inexpensive and ubiquitous as something like GPS—yet. Luckily, there are plenty of important projects to motivate experts to continue working toward even better solutions.

Provided by Intel