June 25, 2015 report

How the brain combines information across sensory modalities

(Phys.org)—Visual information is dense, and researchers have long theorized that when visual stimuli are confusing or ambiguous, the brain must apply additional contextual information in order to interpret it. A group of Korean researchers became interested in one source of visual confusion called binocular rivalry as a means of studying how the brain provides additional context to confusing visual information. They have published the results of their study in the Proceedings of the National Academy of Sciences.

Binocular rivalry occurs when the two eyes view dissimilar ocular stimuli, and interocular competition replaces stable binocular single vision. When the left and right eye are in disagreement, the brain resolves the conflict via compromise, alternating visual awareness between the two viewpoints over time—the input from one eye will be suppressed from consciousness while the other becomes dominant. This rivalry phenomenon offers researchers the opportunity to study the inferential nature of perception and the brain's apparent tendency to apply additional sensory context when presented with confusing visual input.

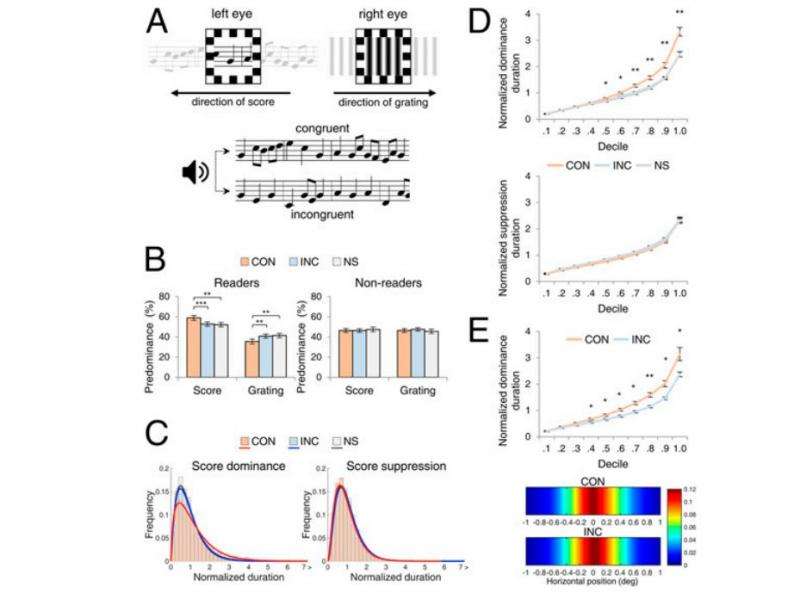

Musicians are ideal subjects for studying the congruence between abstract visual representations because they are familiar with symbolic musical notation, and can therefore experience melodic structure through both sound and vision. The researchers designed an experiment in which a group of both musicians and nonmusicians were subjected to a conventional binocular rivalry task in which they pressed buttons to track alternating periods of dominance and suppression between dissimilar monocular displays—one eye saw a musical score scrolling through the display; the other eye saw a vertical, drifting grating.

Participants tracked their alternations in perception in one of three audiovisual conditions: while listening to a melody that was congruent with the score; while listening to a melody that was incongruent with the score; or while not listening to any sound at all.

Results

In the experiment, musical scores in rivalry enjoyed significantly greater predominance than the image of the drifting grate among the participants who were able to read music. Predominance was not significantly different between the grate and the musical score for participants who could not read music.

The researchers conclude that visual awareness during states of interocular competition is influenced by nonvisual information. They theorize that musical training might play a role in the development of unusually strong connections between the brain's perception and action systems. They further attribute the multisensory interactions observed in the study to information combinations across different sensory modalities, rather than to any sensory-neural convergence of auditory and visual signals.

As the authors write, these results add to the growing evidence that the process of sensory combination is a form of probabilistic inference "with dynamic weightings of different sources of information being governed by their reliability and likelihood." Additionally, the bisensory congruence observed in the participants with musical training only occurred when the musical score was perceptually dominant, not when it was being suppressed from awareness by the image of the grate in the opposite eye. "Taken together, these results demonstrate robust audiovisual interaction based on high-level, symbolic representations and its predictive influence on perceptual dynamics during binocular rivalry," the authors write.

More information: "Melodic sound enhances visual awareness of congruent musical notes, but only if you can read music." PNAS 2015 ; published ahead of print June 15, 2015, DOI: 10.1073/pnas.1509529112

Abstract

Predictive influences of auditory information on resolution of visual competition were investigated using music, whose visual symbolic notation is familiar only to those with musical training. Results from two experiments using different experimental paradigms revealed that melodic congruence between what is seen and what is heard impacts perceptual dynamics during binocular rivalry. This bisensory interaction was observed only when the musical score was perceptually dominant, not when it was suppressed from awareness, and it was observed only in people who could read music. Results from two ancillary experiments showed that this effect of congruence cannot be explained by differential patterns of eye movements or by differential response sluggishness associated with congruent score/melody combinations. Taken together, these results demonstrate robust audiovisual interaction based on high-level, symbolic representations and its predictive influence on perceptual dynamics during binocular rivalry.

Journal information: Proceedings of the National Academy of Sciences

© 2015 Phys.org

.jpeg)