NIST seeks calibration methodologies for determining the accuracy of micro-flows

Many medical treatments both new and old involve extremely small doses of powerful drugs in liquid form – from scorpion venom for cancer research to opioid analgesics for pain control. Often, these substances are administered on the scale of a few tens of nanoliters (nL, billionths of a liter) at a time.

But at present there are no widely accepted standards or authoritative calibration methodologies for determining the accuracy of such micro-flows. That is a situation of growing concern as implantable drug-delivery devices become more common and as potent and costly new agents designed to be dispensed in minute quantities – milligrams per day or less – become more prevalent.

"We want to be ahead of the game," says James Schmidt of NIST's Fluid Metrology Group. "Nobody is complaining yet, but manufacturers may not know as precisely as they would like to how much fluid is being dispensed by technologies such as implantable devices. We'd like to help them achieve that accuracy. It would also benefit makers of other devices that function at the same dimensions, such as DNA identifiers and lab-on-a-chip chemical analyzers."

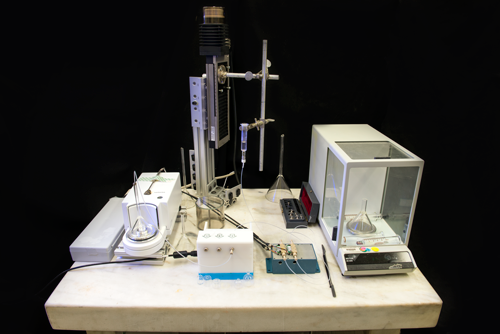

So Schmidt, with colleagues John Wright and Jodie Pope, has begun the process of establishing a Micro-Flow Calibration Facility at NIST with the objective of developing chip-scale flowmeters that can serve as transfer standards, or be distributed as standard reference devices. (Development of miniature, disseminated, NIST-traceable standards, which can eliminate much of the need for customers to bring instruments to NIST for calibration, is part of an agency-wide initiative known as "NIST-on-a-chip.")

"The smaller the flow, the harder it is to get the same relative accuracy," Schmidt says. "Typical uncertainties in this range are around 5 percent. We're shooting for about 1 percent for flows in the range of 1 milliliter per minute to 100 nL per minute." (A milliliter is equivalent in volume to about 20 drops of water; 100 nanoliters is 10,000 times smaller.)

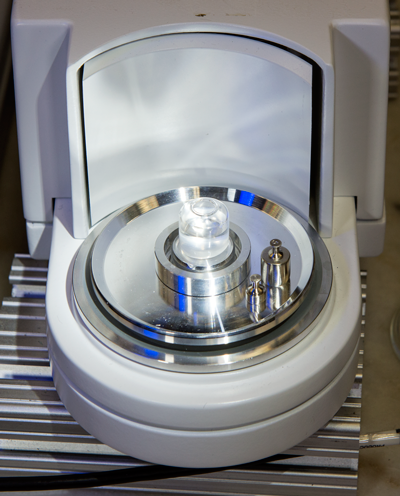

That's a formidable goal, given the difficulty of controlling sources of error. Like most micro-flow measurement systems, NIST's prototype system relies on continuously measuring the accumulating mass of liquid (typically highly purified water for experimental purposes) that has traveled from a pressurized source via small-diameter channels into and through micro-flow meters and then into a container placed on a very accurate weigh scale that takes readings at designated time intervals.

The flowmeter readings are then compared to the mass data – which are traceable to the International System of Units via carefully calibrated NIST standard masses – to determine the device's accuracy.

In NIST's prototype, the fluid pressure is provided by gravity: The source tube is raised above the measurement level. (See photo.) The lab setup may seem straightforward enough. But at the quantities of interest, the water's surface tension and evaporation rate become major factors affecting the results.

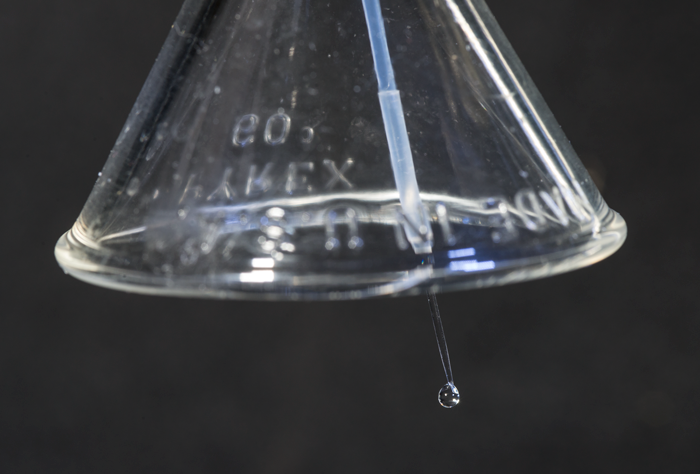

Surface tension produces a complex push-pull competition of forces as water molecules are attracted to each other and to the glassware in the experiment. The balance of forces changes depending on the angular configuration of pipettes and other glassware, as well as any impurities on the inside surfaces of the glass. In addition, given the very small volumes of fluid, evaporation is a significant factor, and has to be minimized and corrected for.

Other kinds of problems arise because there is necessarily a lag time of a few tenths of a second between reading the mass of the accumulated water, which is constantly changing. By the time a mass measurement is recorded, the actual mass on the weigh scale has increased.

Schmidt and colleagues are working to quantify, control, and compensate for those and other variables in order to produce a comprehensive uncertainty budget for the system. Already, however, it has performed very well in brief comparisons with micro-flow measurement apparatus at the national metrology institutes (NMIs) of Switzerland and Denmark.

Each of the NMIs uses a different technique to limit evaporation and capture the liquid, and both use a different kind of pressure source from NIST's. But the first-generation NIST system was within 2 to 3 percent of the measured values at both NMIs.

"That's encouraging," Schmidt says, "but we still have a long way to go. We need to be able to reproducibly calibrate this thing to something like a tenth of a percent if we can get it. Then we want to calibrate some of our NIST colleagues' meters, and then hopefully we'll open the doors."

"Now that Jim has a functional system, we are turning our attention to applying it to new micro-flow meter designs and eventually nanoscale fluid mechanics problems," says Wright. "Our experience in characterizing the behavior and uncertainty of macro-scale flow meters is being applied to the smallest of flow meters. These meters are needed for faster and cheaper medical diagnostic tests and treatments."

Provided by National Institute of Standards and Technology