Researchers enabling smartphones to identify objects

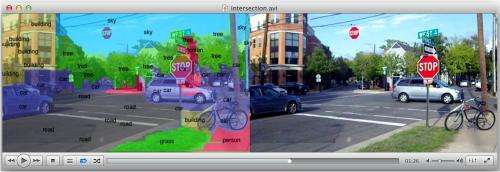

(Phys.org) —Researchers are working to enable smartphones and other mobile devices to understand and immediately identify objects in a camera's field of view, overlaying lines of text that describe items in the environment.

"It analyzes the scene and puts tags on everything," said Eugenio Culurciello, an associate professor in Purdue University's Weldon School of Biomedical Engineering and the Department of Psychological Sciences.

The innovation could find applications in "augmented reality" technologies like Google Glass, facial recognition systems and robotic cars that drive themselves.

"When you give vision to machines, the sky's the limit," Culurciello said.

The concept is called deep learning because it requires layers of neural networks that mimic how the human brain processes information. Internet companies are using deep-learning software, which allows users to search the Web for pictures and video that have been tagged with keywords. Such tagging, however, is not possible for portable devices and home computers.

"The deep-learning algorithms that can tag video and images require a lot of computation, so it hasn't been possible to do this in mobile devices," said Culurciello, who is working with Berin Martini, a research associate at Purdue, and doctoral students.

The research group has developed software and hardware and shown how it could be used to enable a conventional smartphone processor to run deep-learning software.

Research findings were presented in a poster paper during the Neural Information Processing Systems conference in Nevada in December. The poster paper was prepared by Martini; Culurciello and graduate students Jonghoon Jin, Vinayak Gokhale, Aysegul Dundar, Bharadwaj Krishnamurthy and Alfredo Canziani.

The new deep-learning capability represents a potential artificial-intelligence upgrade for smartphones. Research findings have shown the approach is about 15 times more efficient than conventional graphic processors, and an additional 10-fold improvement is possible.

"Now we have an approach for potentially embedding this capability onto mobile devices, which could enable these devices to analyze videos or pictures the way you do now over the Internet," Culurciello said. "You might have 10,000 images in your computer, but you can't really find an image by searching a keyword. Say you wanted to find pictures of yourself at the beach throwing a football. You cannot search for these things right now."

The deep learning software works by performing processing in layers.

"They are combined hierarchically," Culurciello said. "For facial recognition, one layer might recognize the eyes, another layer the nose, and so on until a person's face is recognized."

Deep learning could enable the viewer to understand technical details in pictures.

"Say you are viewing medical images and looking for signs of cancer," he said. "A program could overlay the pictures with descriptions."

Provided by Purdue University