June 3, 2012 report

ShakeID tracks touch action in multi-user display

(Phys.org) -- How do you determine who is doing the touching with a multi-user touch display? Microsoft Research has published a paper that presents a technique for doing so. The researchers make their attempt by fusing Kinect, mobile device inertial sensing, and multi-touch interactive displays. The technique can associate multi-touch interactions to individual users and their accelerometer-equipped mobile devices. ShakeID is the technique; it associates a specific user’s touch contacts on an interactive display to a mobile device held by the user. The phone’s on-board sensors and touch screen sensing go to work to drive the association.

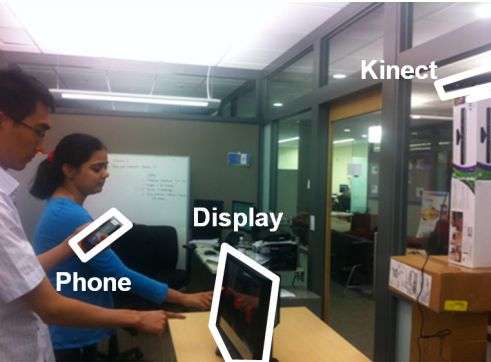

The researchers say that the technique is special compared to other approaches that require bringing the phone in physical contact with the display. ShakeID differs as it only requires the user to hold the phone while touching the display, The approach involves a Kinect camera, multi-touch display and two accelerometer-equipped phones. Specifically, the experimenters used the Microsoft Kinect for Windows SDK to track the hands of multiple users, the Microsoft Surface 2.0 SDK for the multi-touch display and two Windows Phone smartphones.

If two users touch a display simultaneously in different locations to grab content, ShakeID can associate each touch to a specific user and transfer the correct content to each user’s personal device.

Capabilities like this may make shared interactive displays for walk-up use in conference rooms and office hallways more useful. The study says that other applications for interactive displays incorporate smaller devices such as mobile phones.

The researchers showed that ShakeID cross-correlates acceleration data from smartphones that people carry together with hand acceleration captured through Kinect to perform user identification. ShakeID matches the motion sensed by the device to motion observed by a Microsoft Kinect camera pointed at the users standing in front of the touch display. To validate this approach, the researchers conducted a 14 person user study and showed accuracy rates of 92% and higher.

The authors say, though, that an important limitation of the process involves the case where the hand holding the phone is stationary. The researchers acknowledge the limits—in realtime the phone users holding the phone may not remain stationary, especially with larger displays.

The paper is titled, “Your Phone or Mine? Fusing Body, Touch and Device Sensing for Multi-User Device-Display Interaction.”

Microsoft’s Kinect made other news recently in London, where St. Thomas Hospital is testing out Kinect’s gesture controls and voice commands with surgeons. The team interacts with a computer that shows a 3-D image of the part of the body being operated on. Using Kinect, there is less risk of contamination from touching computer peripherals during an operation. Standing straight, arms raised, the surgeon issues commands to a Kinect sensor beneath a monitor displaying a 3-D image of the patient's damaged body part. As such, the surgeon can pan across, zoom in and out, rotate images, lock the image and make markers.

More information: Research paper: research.microsoft.com/pubs/16 … 080/ShakeIDchi12.pdf

© 2012 Phys.Org