Research seeks to make robotic 'human patient simulators'

A young doctor leans over a patient who has been in a serious car accident and invariably must be experiencing pain. The doctor's trauma team examines the patient's pelvis and rolls her onto her side to check her spine. They scan the patient's abdomen with a rapid ultrasound machine, finding fluid. They insert a tube in her nose. Throughout the procedure, the patient's face remains rigid, showing no signs of pain.

The patient's facial demeanor isn't a result of stoicism—it's a robot, not a person. The trauma team is training on a "human patient simulator," (HPS) a training tool which enables clinicians to practice their skills before treating real patients. HPS systems have evolved over the past several decades from mannequins into machines that can breathe, bleed and expel fluids. Some models have pupils that contract when hit by light. Others have entire physiologies that can change. They come in life-sized forms that resemble both children and adults.

But they could be better, said Laurel D. Riek, a computer science and engineering professor at the University of Notre Dame. As remarkable as modern patient simulators are, they have two major limitations.

"Their faces don't actually move, and they are unable to sense or respond to the environment," she said.

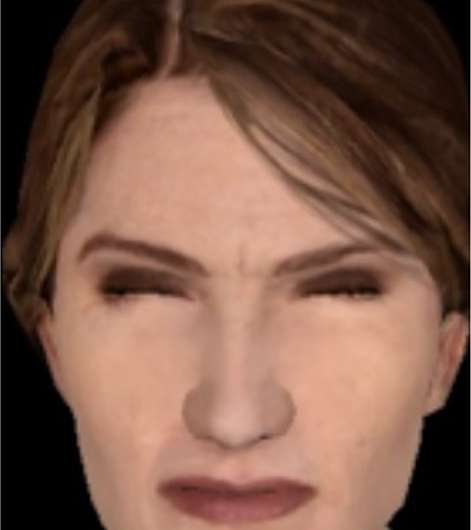

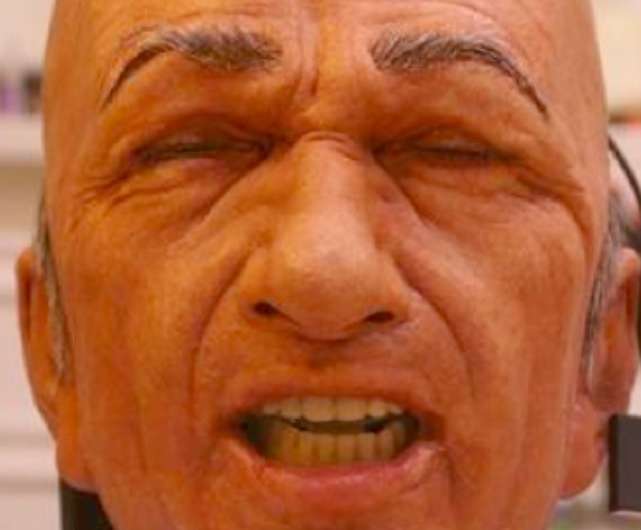

Riek, a roboticist, is designing the next generation of HPS systems. Her NSF-supported research explores new means for the robots to exhibit realistic, clinically relevant facial expressions and respond automatically to clinicians in real time.

"This work will enable hundreds of thousands of doctors, nurses, EMTs, firefighters and combat medics to practice their treatment and diagnostic skills extensively and safely on robots before treating real patients," she said.

One novel aspect of Riek's research is the development of new algorithms that use data from real patients to generate simulated facial characteristics. For example, Riek and her students have recently completed a pain simulation project and are the first research group to synthesize pain using patient data. This work won them best overall paper and best student paper at the International Meeting on Simulation in Healthcare, the top medical simulation conference.

Riek's team is now working on an interactive stroke simulator that can automatically sense and respond to learners as they work through a case. Stroke is the fifth leading cause of death in the United States, yet many of these deaths could be prevented through faster diagnosis and treatment.

"With current technology, clinicians are sometimes not learning the right skills. They are not able to read diagnostic clues from the face," she said.

Yet learning to read those clues could yield lifesaving results. Preventable medical errors in hospitals are the third-leading cause of death in the United States.

"What's really striking about this is that these deaths are completely preventable," Riek said.

One factor contributing to those accidents is clinicians missing clues and going down incorrect diagnostic paths, using incorrect treatments or wasting time. Reading facial expressions, Riek said, can help doctors improve those diagnoses. It is important that their training reflects this.

In addition to modeling and synthesizing patient facial expressions, Riek and her team are building a new, fully-expressive robot head. By employing 3-D printing, they are working to produce a robot that is low-cost and will be one day available to both researchers and hobbyists in addition to clinicians.

The team has engineered the robot to have interchangeable skins, so that the robot's age, race and gender can be easily changed. This will enable researchers to explore social factors or "cultural competency" in new ways.

"Clinicians can create different patient histories and backgrounds and can look at subtle differences in how healthcare workers treat different kinds of patients," Riek said.

Riek's work has the potential to help address the patient safety problem, enabling clinicians to take part in simulations otherwise impossible with existing technology.

Provided by National Science Foundation